Mohamed A. Mabrok

X-VMamba: Explainable Vision Mamba

Nov 16, 2025Abstract:State Space Models (SSMs), particularly the Mamba architecture, have recently emerged as powerful alternatives to Transformers for sequence modeling, offering linear computational complexity while achieving competitive performance. Yet, despite their effectiveness, understanding how these Vision SSMs process spatial information remains challenging due to the lack of transparent, attention-like mechanisms. To address this gap, we introduce a controllability-based interpretability framework that quantifies how different parts of the input sequence (tokens or patches) influence the internal state dynamics of SSMs. We propose two complementary formulations: a Jacobian-based method applicable to any SSM architecture that measures influence through the full chain of state propagation, and a Gramian-based approach for diagonal SSMs that achieves superior speed through closed-form analytical solutions. Both methods operate in a single forward pass with linear complexity, requiring no architectural modifications or hyperparameter tuning. We validate our framework through experiments on three diverse medical imaging modalities, demonstrating that SSMs naturally implement hierarchical feature refinement from diffuse low-level textures in early layers to focused, clinically meaningful patterns in deeper layers. Our analysis reveals domain-specific controllability signatures aligned with diagnostic criteria, progressive spatial selectivity across the network hierarchy, and the substantial influence of scanning strategies on attention patterns. Beyond medical imaging, we articulate applications spanning computer vision, natural language processing, and cross-domain tasks. Our framework establishes controllability analysis as a unified, foundational interpretability paradigm for SSMs across all domains. Code and analysis tools will be made available upon publication

RISCuer: A Reliable Multi-UAV Search and Rescue Testbed

Jun 12, 2020

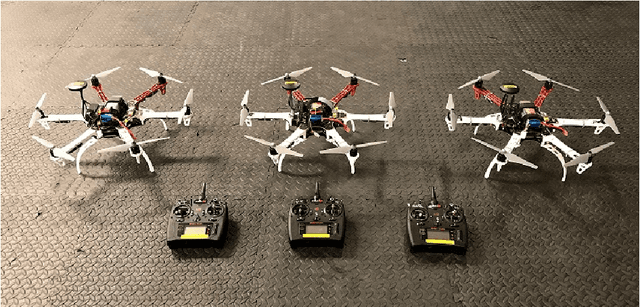

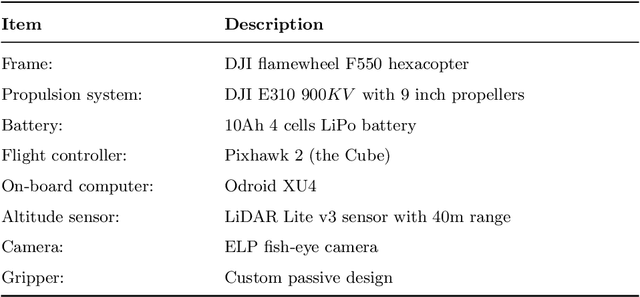

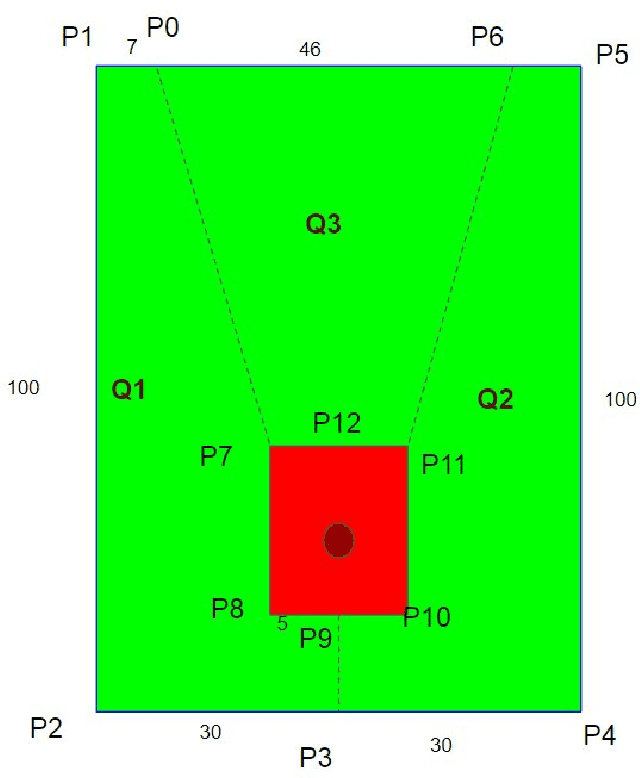

Abstract:We present the RISC Lab multi-agent testbed for reliable search and rescue and aerial transport in outdoor environments. The system consists of a team of three multi-rotor Unmanned Aerial Vehicles (UAVs), which are capable of autonomously searching, picking, and transporting randomly distributed objects in an outdoor field. The method involves vision based object detection and localization, passive aerial grasping with our novel design, GPS based UAV navigation, and safe release of the objects at the drop zone. Our cooperative strategy ensures safe spatial separation between UAVs at all times and we prevent any conflicts at the drop zone using communication enabled consensus. All computation is performed onboard each UAV. We describe the complete software and hardware architecture for the system and demonstrate its reliable performance using comprehensive outdoor experiments and by comparing our results with some recent, similar works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge