Michael Brauckmann

High-Performance Reinforcement Learning on Spot: Optimizing Simulation Parameters with Distributional Measures

Apr 29, 2025Abstract:This work presents an overview of the technical details behind a high performance reinforcement learning policy deployment with the Spot RL Researcher Development Kit for low level motor access on Boston Dynamics Spot. This represents the first public demonstration of an end to end end reinforcement learning policy deployed on Spot hardware with training code publicly available through Nvidia IsaacLab and deployment code available through Boston Dynamics. We utilize Wasserstein Distance and Maximum Mean Discrepancy to quantify the distributional dissimilarity of data collected on hardware and in simulation to measure our sim2real gap. We use these measures as a scoring function for the Covariance Matrix Adaptation Evolution Strategy to optimize simulated parameters that are unknown or difficult to measure from Spot. Our procedure for modeling and training produces high quality reinforcement learning policies capable of multiple gaits, including a flight phase. We deploy policies capable of over 5.2ms locomotion, more than triple Spots default controller maximum speed, robustness to slippery surfaces, disturbance rejection, and overall agility previously unseen on Spot. We detail our method and release our code to support future work on Spot with the low level API.

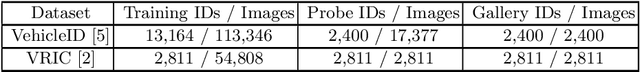

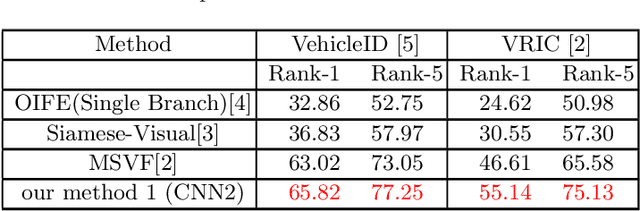

Methods of the Vehicle Re-identification

Sep 14, 2020

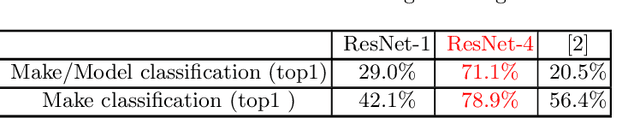

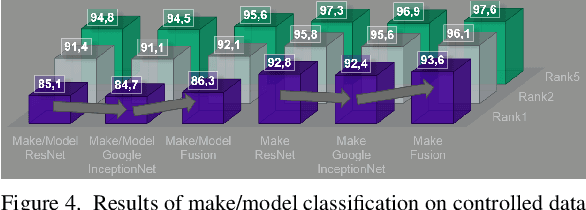

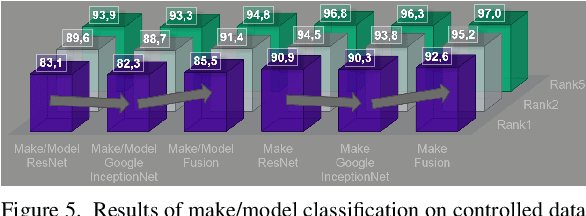

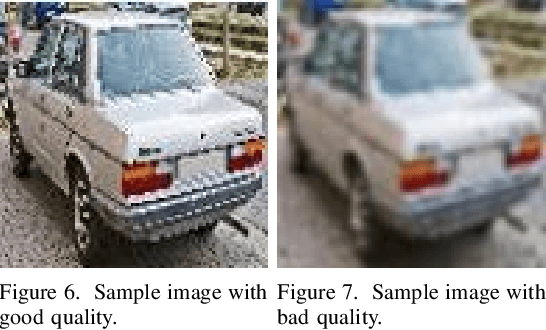

Abstract:Most of researchers use the vehicle re-identification based on classification. This always requires an update with the new vehicle models in the market. In this paper, two types of vehicle re-identification will be presented. First, the standard method, which needs an image from the search vehicle. VRIC and VehicleID data set are suitable for training this module. It will be explained in detail how to improve the performance of this method using a trained network, which is designed for the classification. The second method takes as input a representative image of the search vehicle with similar make/model, released year and colour. It is very useful when an image from the search vehicle is not available. It produces as output a shape and a colour features. This could be used by the matching across a database to re-identify vehicles, which look similar to the search vehicle. To get a robust module for the re-identification, a fine-grained classification has been trained, which its class consists of four elements: the make of a vehicle refers to the vehicle's manufacturer, e.g. Mercedes-Benz, the model of a vehicle refers to type of model within that manufacturer's portfolio, e.g. C Class, the year refers to the iteration of the model, which may receive progressive alterations and upgrades by its manufacturer and the perspective of the vehicle. Thus, all four elements describe the vehicle at increasing degree of specificity. The aim of the vehicle shape classification is to classify the combination of these four elements. The colour classification has been separately trained. The results of vehicle re-identification will be shown. Using a developed tool, the re-identification of vehicles on video images and on controlled data set will be demonstrated. This work was partially funded under the grant.

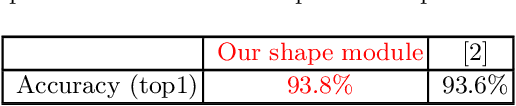

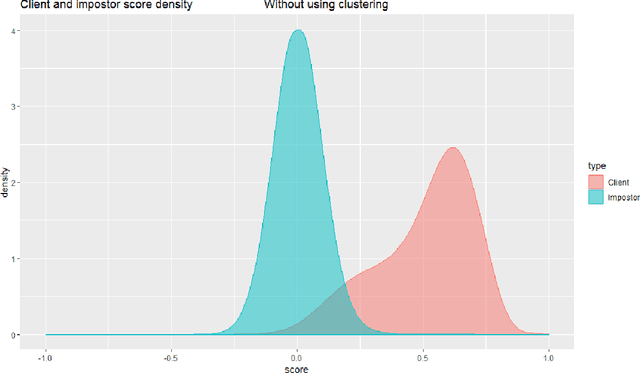

Data Augmentation and Clustering for Vehicle Make/Model Classification

Sep 14, 2020

Abstract:Vehicle shape information is very important in Intelligent Traffic Systems (ITS). In this paper we present a way to exploit a training data set of vehicles released in different years and captured under different perspectives. Also the efficacy of clustering to enhance the make/model classification is presented. Both steps led to improved classification results and a greater robustness. Deeper convolutional neural network based on ResNet architecture has been designed for the training of the vehicle make/model classification. The unequal class distribution of training data produces an a priori probability. Its elimination, obtained by removing of the bias and through hard normalization of the centroids in the classification layer, improves the classification results. A developed application has been used to test the vehicle re-identification on video data manually based on make/model and color classification. This work was partially funded under the grant.

Vehicle Shape and Color Classification Using Convolutional Neural Network

May 15, 2019

Abstract:This paper presents a module of vehicle reidentification based on make/model and color classification. It could be used by the Automated Vehicular Surveillance (AVS) or by the fast analysis of video data. Many of problems, that are related to this topic, had to be addressed. In order to facilitate and accelerate the progress in this subject, we will present our way to collect and to label a large scale data set. We used deeper neural networks in our training. They showed a good classification accuracy. We show the results of make/model and color classification on controlled and video data set. We demonstrate with the help of a developed application the re-identification of vehicles on video images based on make/model and color classification. This work was partially funded under the grant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge