Michael Arnold

Temporal Object Captioning for Street Scene Videos from LiDAR Tracks

May 22, 2025Abstract:Video captioning models have seen notable advancements in recent years, especially with regard to their ability to capture temporal information. While many research efforts have focused on architectural advancements, such as temporal attention mechanisms, there remains a notable gap in understanding how models capture and utilize temporal semantics for effective temporal feature extraction, especially in the context of Advanced Driver Assistance Systems. We propose an automated LiDAR-based captioning procedure that focuses on the temporal dynamics of traffic participants. Our approach uses a rule-based system to extract essential details such as lane position and relative motion from object tracks, followed by a template-based caption generation. Our findings show that training SwinBERT, a video captioning model, using only front camera images and supervised with our template-based captions, specifically designed to encapsulate fine-grained temporal behavior, leads to improved temporal understanding consistently across three datasets. In conclusion, our results clearly demonstrate that integrating LiDAR-based caption supervision significantly enhances temporal understanding, effectively addressing and reducing the inherent visual/static biases prevalent in current state-of-the-art model architectures.

Adverse Weather Conditions Augmentation of LiDAR Scenes with Latent Diffusion Models

Jan 03, 2025Abstract:LiDAR scenes constitute a fundamental source for several autonomous driving applications. Despite the existence of several datasets, scenes from adverse weather conditions are rarely available. This limits the robustness of downstream machine learning models, and restrains the reliability of autonomous driving systems in particular locations and seasons. Collecting feature-diverse scenes under adverse weather conditions is challenging due to seasonal limitations. Generative models are therefore essentials, especially for generating adverse weather conditions for specific driving scenarios. In our work, we propose a latent diffusion process constituted by autoencoder and latent diffusion models. Moreover, we leverage the clear condition LiDAR scenes with a postprocessing step to improve the realism of the generated adverse weather condition scenes.

A Preprocessing and Postprocessing Voxel-based Method for LiDAR Semantic Segmentation Improvement in Long Distance

May 16, 2024Abstract:In recent years considerable research in LiDAR semantic segmentation was conducted, introducing several new state of the art models. However, most research focuses on single-scan point clouds, limiting performance especially in long distance outdoor scenarios, by omitting time-sequential information. Moreover, varying-density and occlusions constitute significant challenges in single-scan approaches. In this paper we propose a LiDAR point cloud preprocessing and postprocessing method. This multi-stage approach, in conjunction with state of the art models in a multi-scan setting, aims to solve those challenges. We demonstrate the benefits of our method through quantitative evaluation with the given models in single-scan settings. In particular, we achieve significant improvements in mIoU performance of over 5 percentage point in medium range and over 10 percentage point in far range. This is essential for 3D semantic scene understanding in long distance as well as for applications where offline processing is permissible.

LMD: Light-weight Prediction Quality Estimation for Object Detection in Lidar Point Clouds

Jun 15, 2023Abstract:Object detection on Lidar point cloud data is a promising technology for autonomous driving and robotics which has seen a significant rise in performance and accuracy during recent years. Particularly uncertainty estimation is a crucial component for down-stream tasks and deep neural networks remain error-prone even for predictions with high confidence. Previously proposed methods for quantifying prediction uncertainty tend to alter the training scheme of the detector or rely on prediction sampling which results in vastly increased inference time. In order to address these two issues, we propose LidarMetaDetect (LMD), a light-weight post-processing scheme for prediction quality estimation. Our method can easily be added to any pre-trained Lidar object detector without altering anything about the base model and is purely based on post-processing, therefore, only leading to a negligible computational overhead. Our experiments show a significant increase of statistical reliability in separating true from false predictions. We propose and evaluate an additional application of our method leading to the detection of annotation errors. Explicit samples and a conservative count of annotation error proposals indicates the viability of our method for large-scale datasets like KITTI and nuScenes. On the widely-used nuScenes test dataset, 43 out of the top 100 proposals of our method indicate, in fact, erroneous annotations.

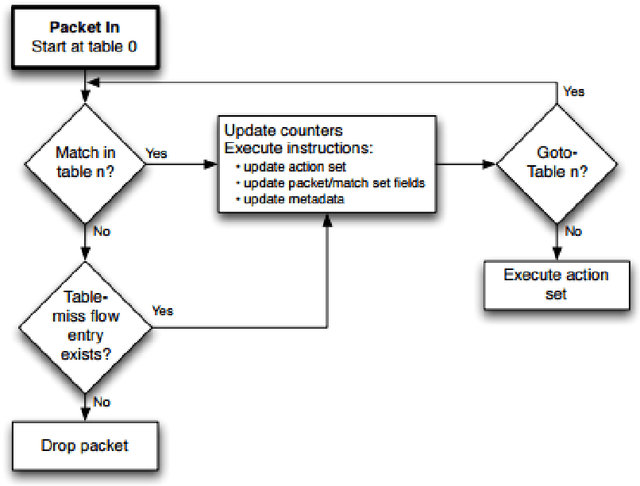

Predictive networking and optimization for flow-based networks

Jul 21, 2017

Abstract:Artificial Neural Networks (ANNs) were used to classify neural network flows by flow size. After training the neural network was able to predict the size of a flows with 87% accuracy with a Feed Forward Neural Network. This demonstrates that flow based routers can prioritize candidate flows with a predicted large number of packets for priority insertion into hardware content-addressable memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge