Mehran Yazdi

NeuroLingua: A Language-Inspired Hierarchical Framework for Multimodal Sleep Stage Classification Using EEG and EOG

Nov 12, 2025Abstract:Automated sleep stage classification from polysomnography remains limited by the lack of expressive temporal hierarchies, challenges in multimodal EEG and EOG fusion, and the limited interpretability of deep learning models. We propose NeuroLingua, a language-inspired framework that conceptualizes sleep as a structured physiological language. Each 30-second epoch is decomposed into overlapping 3-second subwindows ("tokens") using a CNN-based tokenizer, enabling hierarchical temporal modeling through dual-level Transformers: intra-segment encoding of local dependencies and inter-segment integration across seven consecutive epochs (3.5 minutes) for extended context. Modality-specific embeddings from EEG and EOG channels are fused via a Graph Convolutional Network, facilitating robust multimodal integration. NeuroLingua is evaluated on the Sleep-EDF Expanded and ISRUC-Sleep datasets, achieving state-of-the-art results on Sleep-EDF (85.3% accuracy, 0.800 macro F1, and 0.796 Cohen's kappa) and competitive performance on ISRUC (81.9% accuracy, 0.802 macro F1, and 0.755 kappa), matching or exceeding published baselines in overall and per-class metrics. The architecture's attention mechanisms enhance the detection of clinically relevant sleep microevents, providing a principled foundation for future interpretability, explainability, and causal inference in sleep research. By framing sleep as a compositional language, NeuroLingua unifies hierarchical sequence modeling and multimodal fusion, advancing automated sleep staging toward more transparent and clinically meaningful applications.

Urban Change Detection by Fully Convolutional Siamese Concatenate Network with Attention

Jan 31, 2021

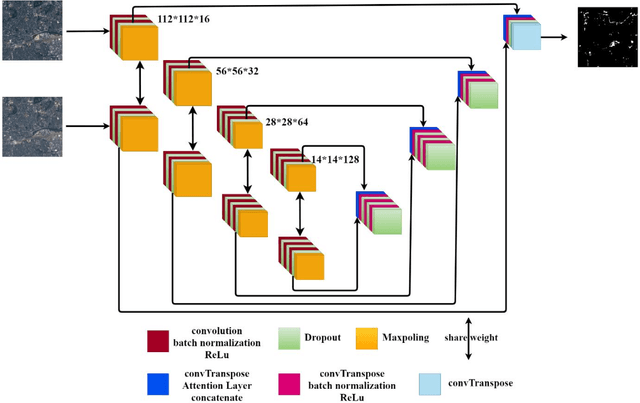

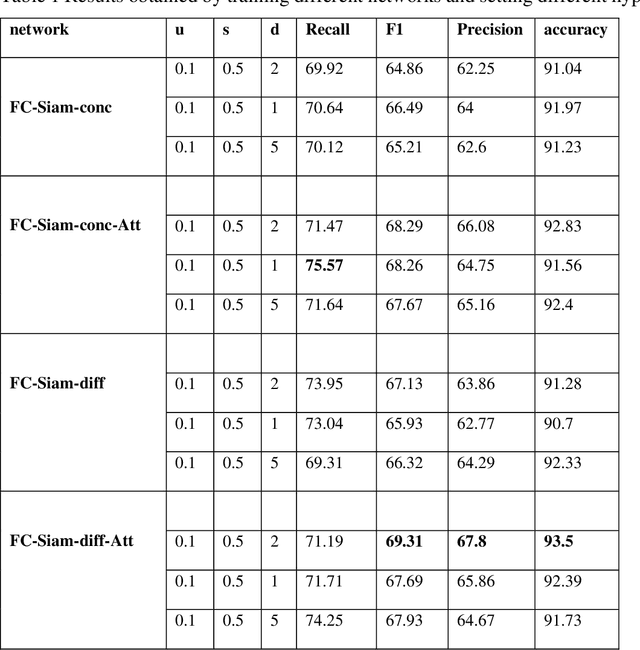

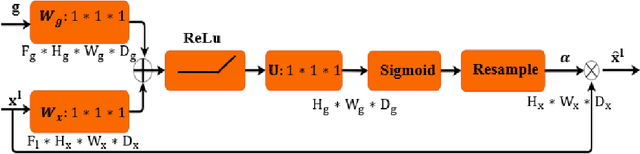

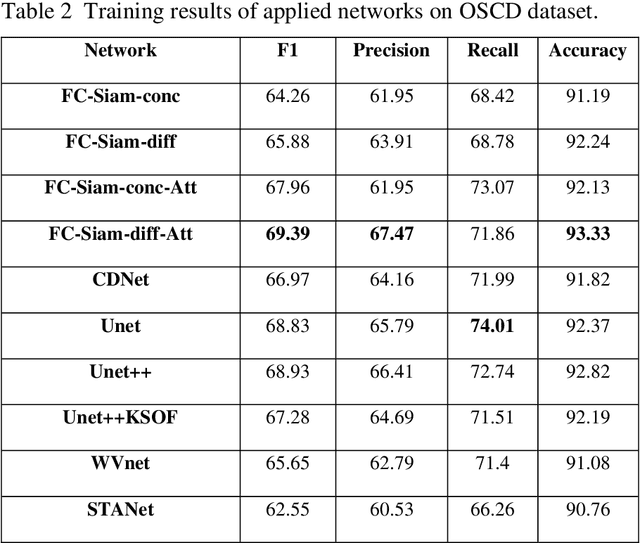

Abstract:Change detection (CD) is an important problem in remote sensing, especially in disaster time for urban management. Most existing traditional methods for change detection are categorized based on pixel or objects. Object-based models are preferred to pixel-based methods for handling very high-resolution remote sensing (VHR RS) images. Such methods can benefit from the ongoing research on deep learning. In this paper, a fully automatic change-detection algorithm on VHR RS images is proposed that deploys Fully Convolutional Siamese Concatenate networks (FC-Siam-Conc). The proposed method uses preprocessing and an attention gate layer to improve accuracy. Gaussian attention (GA) as a soft visual attention mechanism is used for preprocessing. GA helps the network to handle feature maps like biological visual systems. Since the GA parameters cannot be adjusted during network training, an attention gate layer is introduced to play the role of GA with parameters that can be tuned among other network parameters. Experimental results obtained on Onera Satellite Change Detection (OSCD) and RIVER-CD datasets confirm the superiority of the proposed architecture over the state-of-the-art algorithms.

A New Approach for Automatic Segmentation and Evaluation of Pigmentation Lesion by using Active Contour Model and Speeded Up Robust Features

Jan 18, 2021

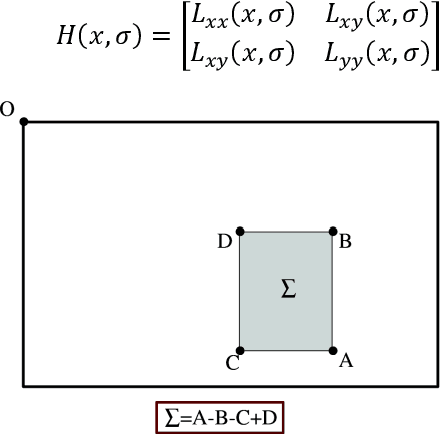

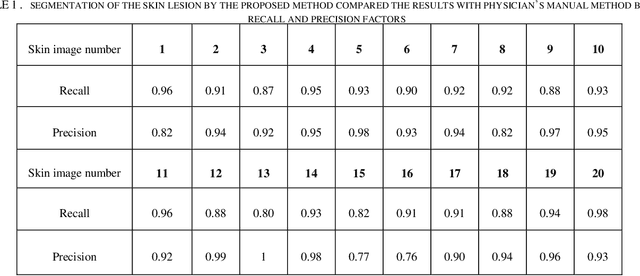

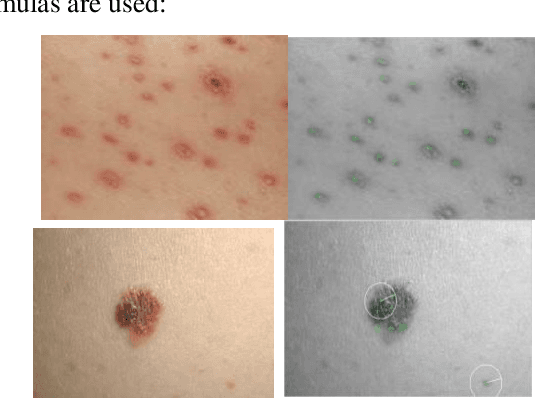

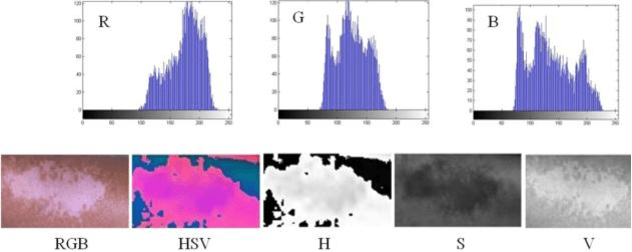

Abstract:Digital image processing techniques have wide applications in different scientific fields including the medicine. By use of image processing algorithms, physicians have been more successful in diagnosis of different diseases and have achieved much better treatment results. In this paper, we propose an automatic method for segmenting the skin lesions and extracting features that are associated to them. At this aim, a combination of Speeded-Up Robust Features (SURF) and Active Contour Model (ACM), is used. In the suggested method, at first region of skin lesion is segmented from the whole skin image, and then some features like the mean, variance, RGB and HSV parameters are extracted from the segmented region. Comparing the segmentation results, by use of Otsu thresholding, our proposed method, shows the superiority of our procedure over the Otsu theresholding method. Segmentation of the skin lesion by the proposed method and Otsu thresholding compared the results with physician's manual method. The proposed method for skin lesion segmentation, which is a combination of SURF and ACM, gives the best result. For empirical evaluation of our method, we have applied it on twenty different skin lesion images. Obtained results confirm the high performance, speed and accuracy of our method.

Combining Real-Valued and Binary Gabor-Radon Features for Classification and Search in Medical Imaging Archives

Sep 27, 2017

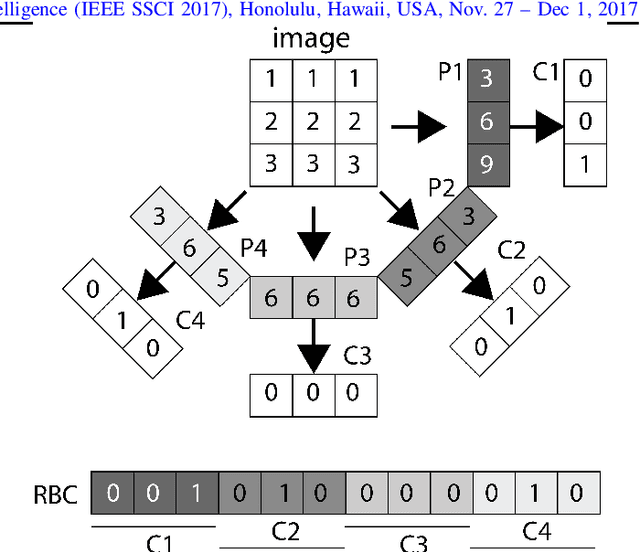

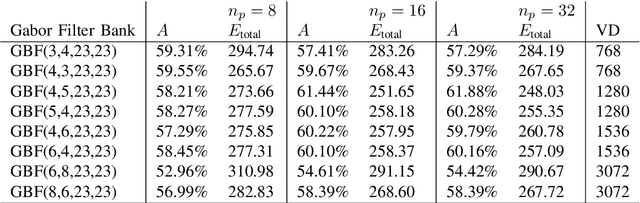

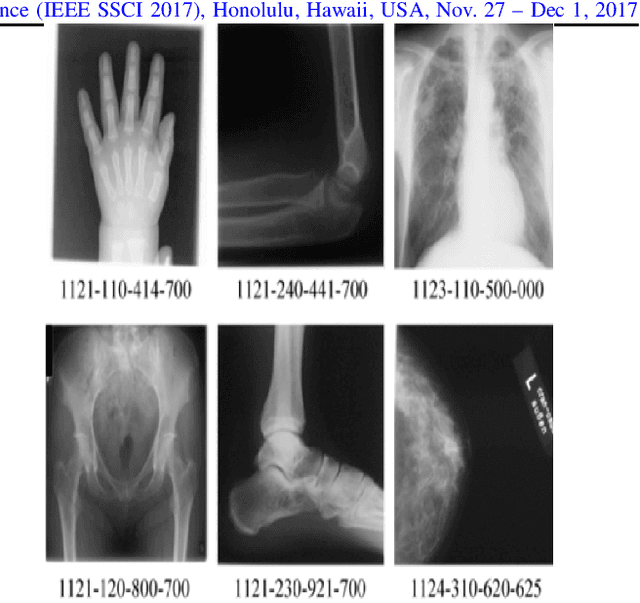

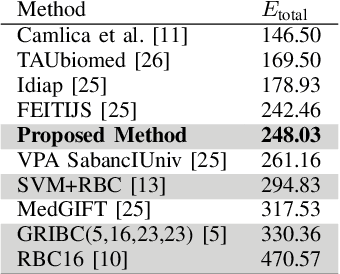

Abstract:Content-based image retrieval (CBIR) of medical images in large datasets to identify similar images when a query image is given can be very useful in improving the diagnostic decision of the clinical experts and as well in educational scenarios. In this paper, we used two stage classification and retrieval approach to retrieve similar images. First, the Gabor filters are applied to Radon-transformed images to extract features and to train a multi-class SVM. Then based on the classification results and using an extracted Gabor barcode, similar images are retrieved. The proposed method was tested on IRMA dataset which contains more than 14,000 images. Experimental results show the efficiency of our approach in retrieving similar images compared to other Gabor-Radon-oriented methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge