Md. Ashraful Amin

BD Open LULC Map: High-resolution land use land cover mapping & benchmarking for urban development in Dhaka, Bangladesh

May 28, 2025Abstract:Land Use Land Cover (LULC) mapping using deep learning significantly enhances the reliability of LULC classification, aiding in understanding geography, socioeconomic conditions, poverty levels, and urban sprawl. However, the scarcity of annotated satellite data, especially in South/East Asian developing countries, poses a major challenge due to limited funding, diverse infrastructures, and dense populations. In this work, we introduce the BD Open LULC Map (BOLM), providing pixel-wise LULC annotations across eleven classes (e.g., Farmland, Water, Forest, Urban Structure, Rural Built-Up) for Dhaka metropolitan city and its surroundings using high-resolution Bing satellite imagery (2.22 m/pixel). BOLM spans 4,392 sq km (891 million pixels), with ground truth validated through a three-stage process involving GIS experts. We benchmark LULC segmentation using DeepLab V3+ across five major classes and compare performance on Bing and Sentinel-2A imagery. BOLM aims to support reliable deep models and domain adaptation tasks, addressing critical LULC dataset gaps in South/East Asia.

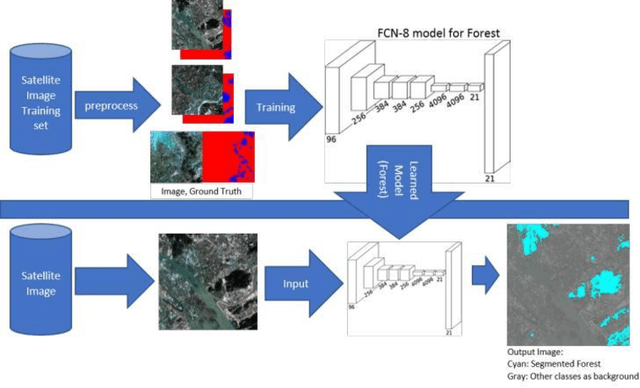

LULC Segmentation of RGB Satellite Image Using FCN-8

Aug 24, 2020

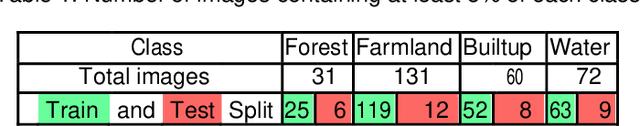

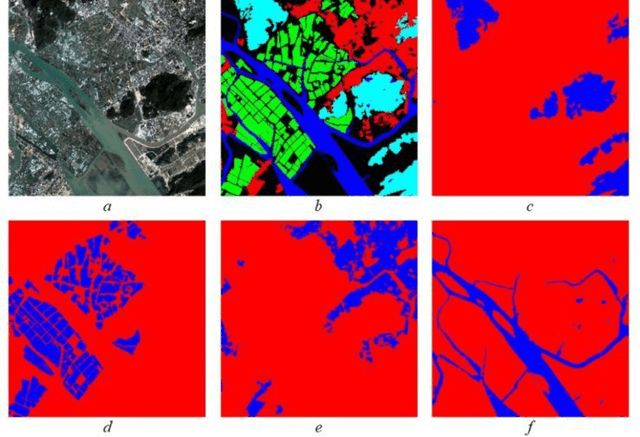

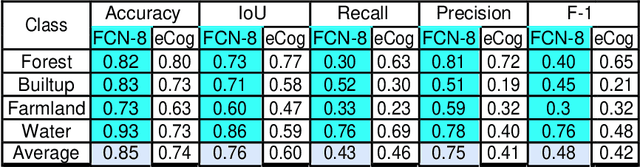

Abstract:This work presents use of Fully Convolutional Network (FCN-8) for semantic segmentation of high-resolution RGB earth surface satel-lite images into land use land cover (LULC) categories. Specically, we propose a non-overlapping grid-based approach to train a Fully Convo-lutional Network (FCN-8) with vgg-16 weights to segment satellite im-ages into four (forest, built-up, farmland and water) classes. The FCN-8 semantically projects the discriminating features in lower resolution learned by the encoder onto the pixel space in higher resolution to get a dense classi cation. We experimented the proposed system with Gaofen-2 image dataset, that contains 150 images of over 60 di erent cities in china. For comparison, we used available ground-truth along with images segmented using a widely used commeriial GIS software called eCogni-tion. With the proposed non-overlapping grid-based approach, FCN-8 obtains signi cantly improved performance, than the eCognition soft-ware. Our model achieves average accuracy of 91.0% and average Inter-section over Union (IoU) of 0.84. In contrast, eCognitions average accu-racy is 74.0% and IoU is 0.60. This paper also reports a detail analysis of errors occurred at the LULC boundary.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge