Matteo Sonza Reorda

Evaluating Different Fault Injection Abstractions on the Assessment of DNN SW Hardening Strategies

Dec 11, 2024Abstract:The reliability of Neural Networks has gained significant attention, prompting efforts to develop SW-based hardening techniques for safety-critical scenarios. However, evaluating hardening techniques using application-level fault injection (FI) strategies, which are commonly hardware-agnostic, may yield misleading results. This study for the first time compares two FI approaches (at the application level (APP) and instruction level (ISA)) to evaluate deep neural network SW hardening strategies. Results show that injecting permanent faults at ISA (a more detailed abstraction level than APP) changes completely the ranking of SW hardening techniques, in terms of both reliability and accuracy. These results highlight the relevance of using an adequate analysis abstraction for evaluating such techniques.

* 6 pages, 2 figures, 1 table, Asian Test Symposium

Reliability Assessment of Neural Networks in GPUs: A Framework For Permanent Faults Injections

May 24, 2022

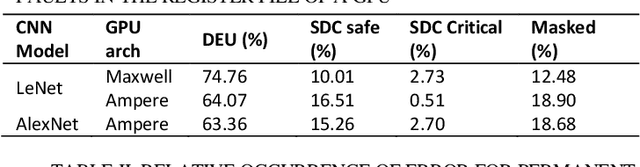

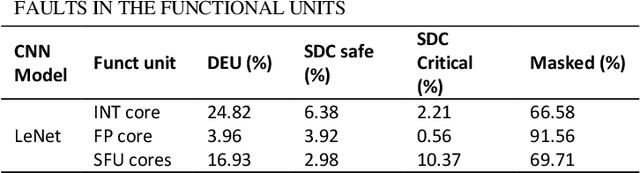

Abstract:Currently, Deep learning and especially Convolutional Neural Networks (CNNs) have become a fundamental computational approach applied in a wide range of domains, including some safety-critical applications (e.g., automotive, robotics, and healthcare equipment). Therefore, the reliability evaluation of those computational systems is mandatory. The reliability evaluation of CNNs is performed by fault injection campaigns at different levels of abstraction, from the application level down to the hardware level. Many works have focused on evaluating the reliability of neural networks in the presence of transient faults. However, the effects of permanent faults have been investigated at the application level, only, e.g., targeting the parameters of the network. This paper intends to propose a framework, resorting to a binary instrumentation tool to perform fault injection campaigns, targeting different components inside the GPU, such as the register files and the functional units. This environment allows for the first time assessing the reliability of CNNs deployed on a GPU considering the presence of permanent faults.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge