Manu Mathew Thomas

CGVQM+D: Computer Graphics Video Quality Metric and Dataset

Jun 13, 2025

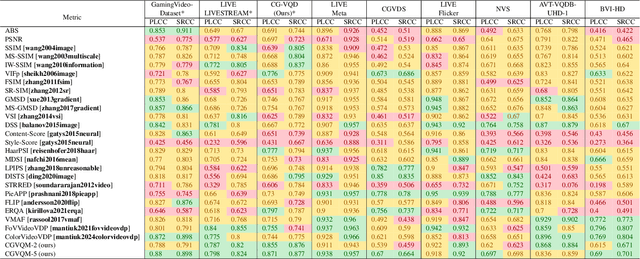

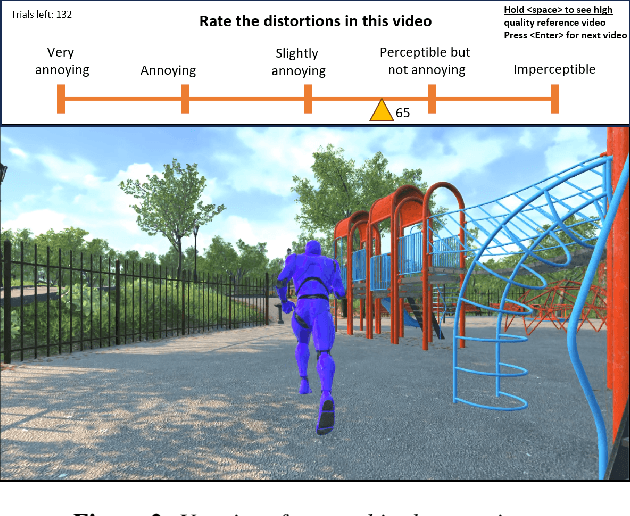

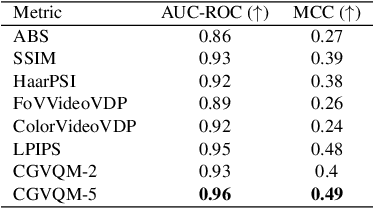

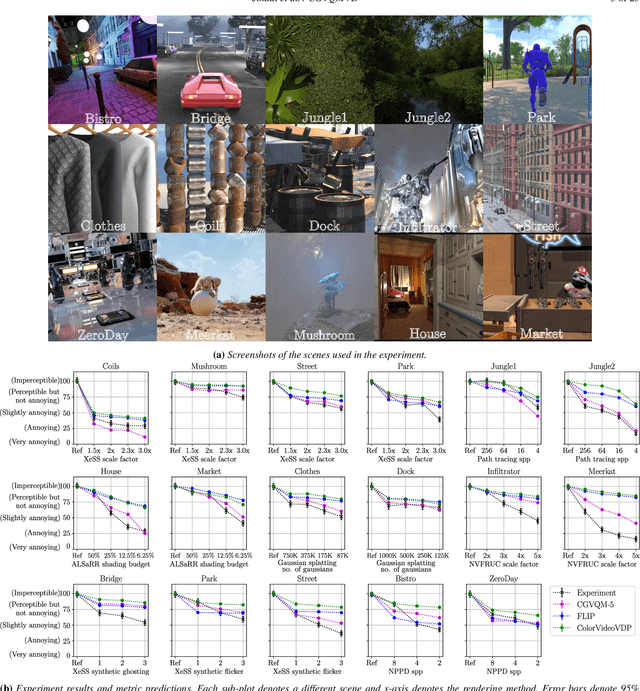

Abstract:While existing video and image quality datasets have extensively studied natural videos and traditional distortions, the perception of synthetic content and modern rendering artifacts remains underexplored. We present a novel video quality dataset focused on distortions introduced by advanced rendering techniques, including neural supersampling, novel-view synthesis, path tracing, neural denoising, frame interpolation, and variable rate shading. Our evaluations show that existing full-reference quality metrics perform sub-optimally on these distortions, with a maximum Pearson correlation of 0.78. Additionally, we find that the feature space of pre-trained 3D CNNs aligns strongly with human perception of visual quality. We propose CGVQM, a full-reference video quality metric that significantly outperforms existing metrics while generating both per-pixel error maps and global quality scores. Our dataset and metric implementation is available at https://github.com/IntelLabs/CGVQM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge