Maksims Ivanovs

AI-Augmented Pollen Recognition in Optical and Holographic Microscopy for Veterinary Imaging

Dec 13, 2025Abstract:We present a comprehensive study on fully automated pollen recognition across both conventional optical and digital in-line holographic microscopy (DIHM) images of sample slides. Visually recognizing pollen in unreconstructed holographic images remains challenging due to speckle noise, twin-image artifacts and substantial divergence from bright-field appearances. We establish the performance baseline by training YOLOv8s for object detection and MobileNetV3L for classification on a dual-modality dataset of automatically annotated optical and affinely aligned DIHM images. On optical data, detection mAP50 reaches 91.3% and classification accuracy reaches 97%, whereas on DIHM data, we achieve only 8.15% for detection mAP50 and 50% for classification accuracy. Expanding the bounding boxes of pollens in DIHM images over those acquired in aligned optical images achieves 13.3% for detection mAP50 and 54% for classification accuracy. To improve object detection in DIHM images, we employ a Wasserstein GAN with spectral normalization (WGAN-SN) to create synthetic DIHM images, yielding an FID score of 58.246. Mixing real-world and synthetic data at the 1.0 : 1.5 ratio for DIHM images improves object detection up to 15.4%. These results demonstrate that GAN-based augmentation can reduce the performance divide, bringing fully automated DIHM workflows for veterinary imaging a small but important step closer to practice.

Automated Pollen Recognition in Optical and Holographic Microscopy Images

Dec 09, 2025Abstract:This study explores the application of deep learning to improve and automate pollen grain detection and classification in both optical and holographic microscopy images, with a particular focus on veterinary cytology use cases. We used YOLOv8s for object detection and MobileNetV3L for the classification task, evaluating their performance across imaging modalities. The models achieved 91.3% mAP50 for detection and 97% overall accuracy for classification on optical images, whereas the initial performance on greyscale holographic images was substantially lower. We addressed the performance gap issue through dataset expansion using automated labeling and bounding box area enlargement. These techniques, applied to holographic images, improved detection performance from 2.49% to 13.3% mAP50 and classification performance from 42% to 54%. Our work demonstrates that, at least for image classification tasks, it is possible to pair deep learning techniques with cost-effective lensless digital holographic microscopy devices.

* 08 pages, 10 figures, 04 tables, 20 references. Date of Conference: 13-14 June 2025 Date Added to IEEE Xplore: 10 July 2025 Electronic ISBN: 979-8-3315-0969-9 Print on Demand(PoD) ISBN: 979-8-3315-0970-5 DOI: 10.1109/AICCONF64766.2025.11064260 Conference Location: Prague, Czech Republic Online Access: https://ieeexplore.ieee.org/document/11064260

Automated Quality Assessment of Hand Washing Using Deep Learning

Dec 01, 2020

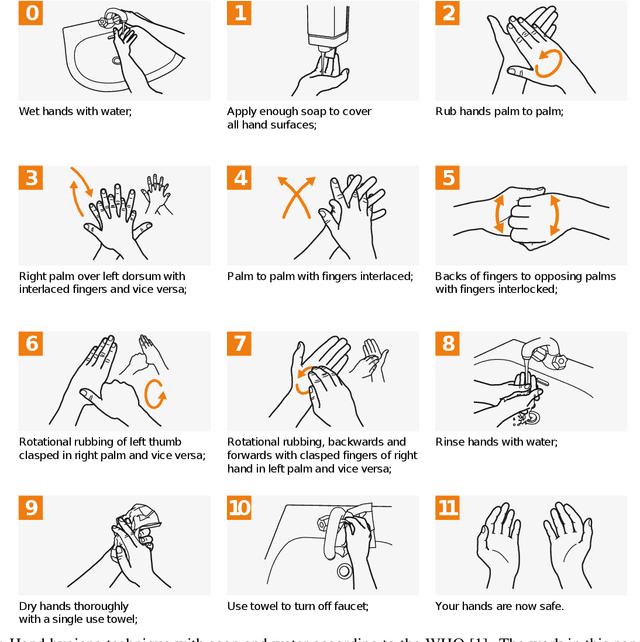

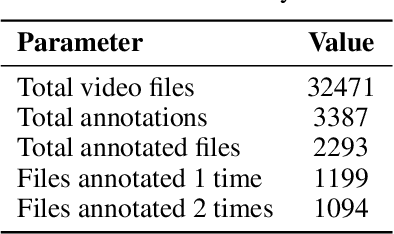

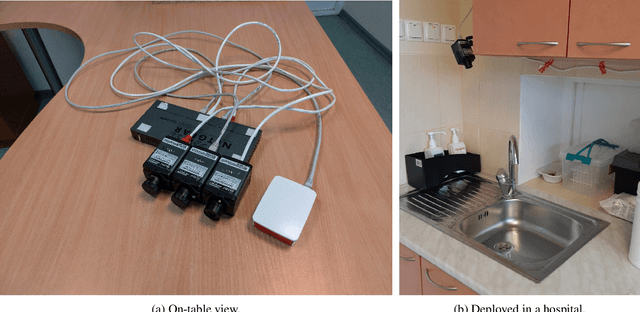

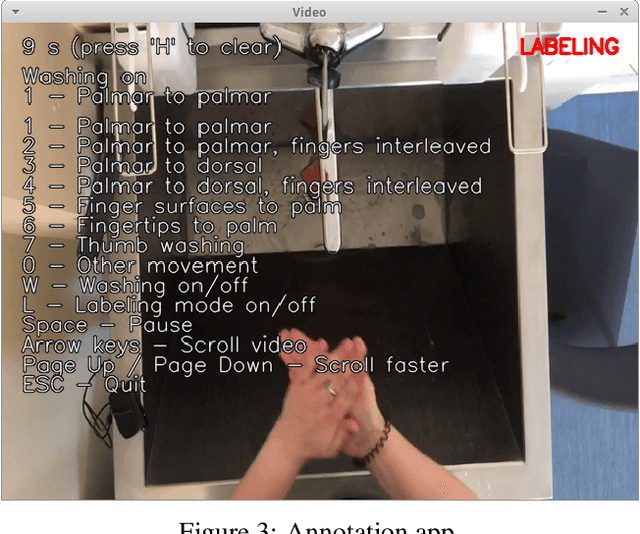

Abstract:Washing hands is one of the most important ways to prevent infectious diseases, including COVID-19. Unfortunately, medical staff does not always follow the World Health Organization (WHO) hand washing guidelines in their everyday work. To this end, we present neural networks for automatically recognizing the different washing movements defined by the WHO. We train the neural network on a part of a large (2000+ videos) real-world labeled dataset with the different washing movements. The preliminary results show that using pre-trained neural network models such as MobileNetV2 and Xception for the task, it is possible to achieve >64 % accuracy in recognizing the different washing movements. We also describe the collection and the structure of the above open-access dataset created as part of this work. Finally, we describe how the neural network can be used to construct a mobile phone application for automatic quality control and real-time feedback for medical professionals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge