Maitreya Sonawane

Token-free Models for Sarcasm Detection

May 02, 2025Abstract:Tokenization is a foundational step in most natural language processing (NLP) pipelines, yet it introduces challenges such as vocabulary mismatch and out-of-vocabulary issues. Recent work has shown that models operating directly on raw text at the byte or character level can mitigate these limitations. In this paper, we evaluate two token-free models, ByT5 and CANINE, on the task of sarcasm detection in both social media (Twitter) and non-social media (news headlines) domains. We fine-tune and benchmark these models against token-based baselines and state-of-the-art approaches. Our results show that ByT5-small and CANINE outperform token-based counterparts and achieve new state-of-the-art performance, improving accuracy by 0.77% and 0.49% on the News Headlines and Twitter Sarcasm datasets, respectively. These findings underscore the potential of token-free models for robust NLP in noisy and informal domains such as social media.

UNet with Axial Transformer : A Neural Weather Model for Precipitation Nowcasting

Apr 28, 2025Abstract:Making accurate weather predictions can be particularly challenging for localized storms or events that evolve on hourly timescales, such as thunderstorms. Hence, our goal for the project was to model Weather Nowcasting for making highly localized and accurate predictions that apply to the immediate future replacing the current numerical weather models and data assimilation systems with Deep Learning approaches. A significant advantage of machine learning is that inference is computationally cheap given an already-trained model, allowing forecasts that are nearly instantaneous and in the native high resolution of the input data. In this work we developed a novel method that employs Transformer-based machine learning models to forecast precipitation. This approach works by leveraging axial attention mechanisms to learn complex patterns and dynamics from time series frames. Moreover, it is a generic framework and can be applied to univariate and multivariate time series data, as well as time series embeddings data. This paper represents an initial research on the dataset used in the domain of next frame prediciton, and hence, we demonstrate state-of-the-art results in terms of metrices (PSNR = 47.67, SSIM = 0.9943) used for the given dataset using UNet with Axial Transformer.

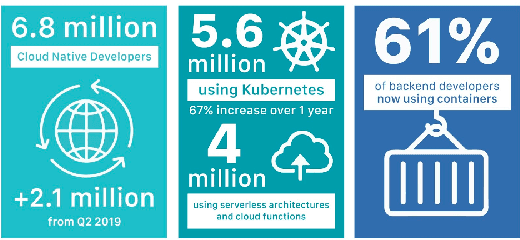

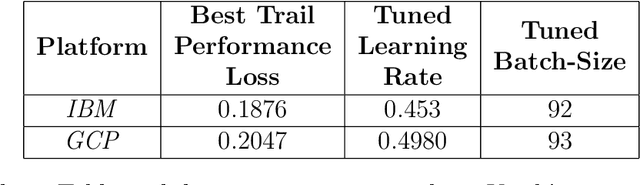

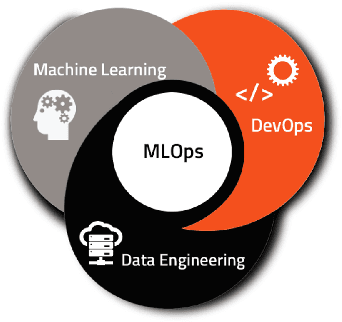

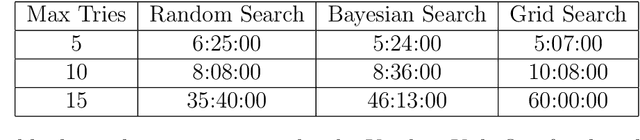

Deployment of ML Models using Kubeflow on Different Cloud Providers

Jun 27, 2022

Abstract:This project aims to explore the process of deploying Machine learning models on Kubernetes using an open-source tool called Kubeflow [1] - an end-to-end ML Stack orchestration toolkit. We create end-to-end Machine Learning models on Kubeflow in the form of pipelines and analyze various points including the ease of setup, deployment models, performance, limitations and features of the tool. We hope that our project acts almost like a seminar/introductory report that can help vanilla cloud/Kubernetes users with zero knowledge on Kubeflow use Kubeflow to deploy ML models. From setup on different clouds to serving our trained model over the internet - we give details and metrics detailing the performance of Kubeflow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge