Mahdi Torabzadehkashi

HyperTune: Dynamic Hyperparameter Tuning For Efficient Distribution of DNN Training Over Heterogeneous Systems

Jul 16, 2020

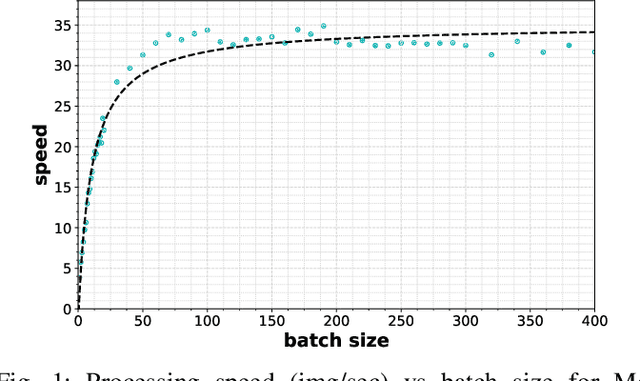

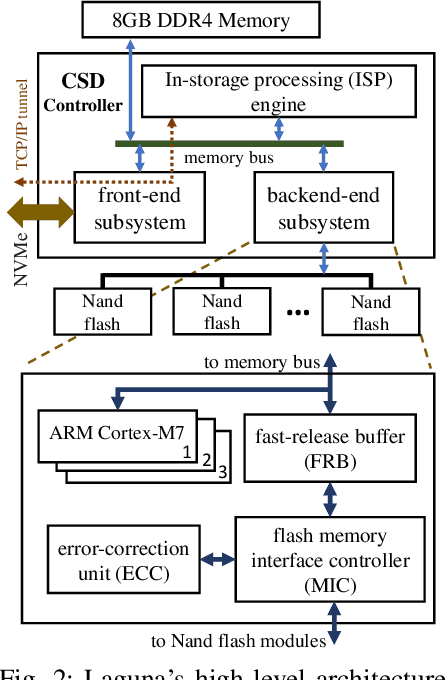

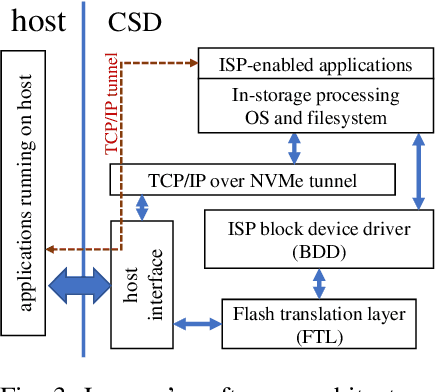

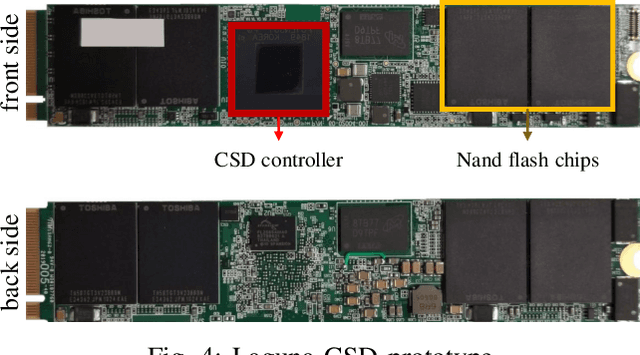

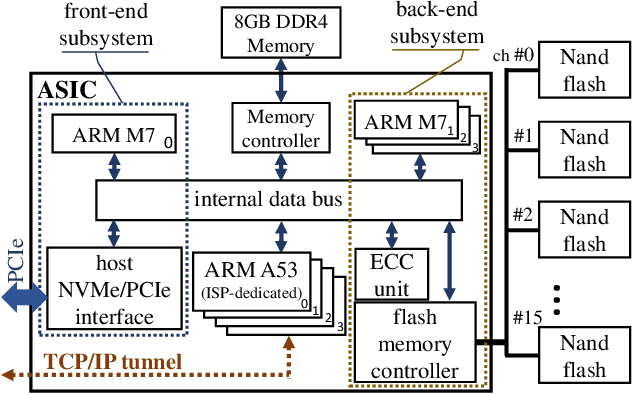

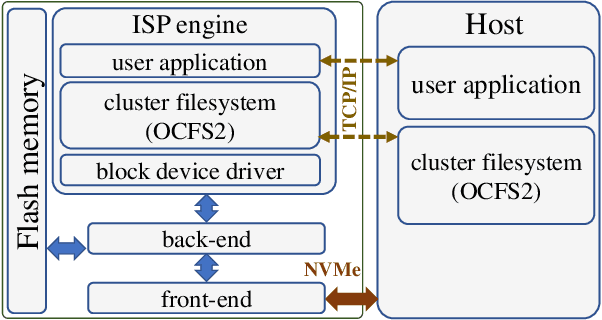

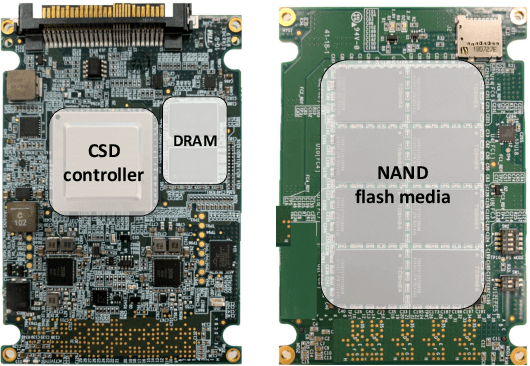

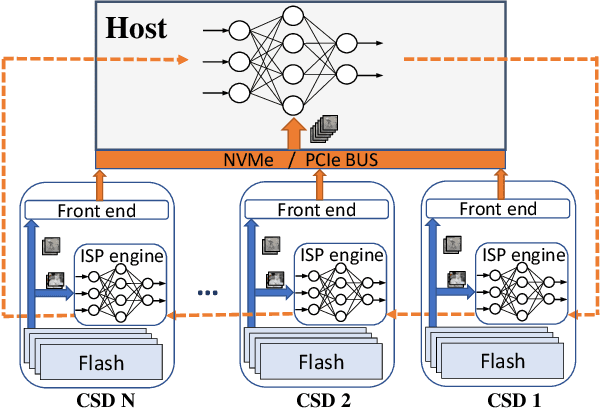

Abstract:Distributed training is a novel approach to accelerate Deep Neural Networks (DNN) training, but common training libraries fall short of addressing the distributed cases with heterogeneous processors or the cases where the processing nodes get interrupted by other workloads. This paper describes distributed training of DNN on computational storage devices (CSD), which are NAND flash-based, high capacity data storage with internal processing engines. A CSD-based distributed architecture incorporates the advantages of federated learning in terms of performance scalability, resiliency, and data privacy by eliminating the unnecessary data movement between the storage device and the host processor. The paper also describes Stannis, a DNN training framework that improves on the shortcomings of existing distributed training frameworks by dynamically tuning the training hyperparameters in heterogeneous systems to maintain the maximum overall processing speed in term of processed images per second and energy efficiency. Experimental results on image classification training benchmarks show up to 3.1x improvement in performance and 2.45x reduction in energy consumption when using Stannis plus CSD compare to the generic systems.

STANNIS: Low-Power Acceleration of Deep Neural Network Training Using Computational Storage

Feb 19, 2020

Abstract:This paper proposes a framework for distributed, in-storage training of neural networks on clusters of computational storage devices. Such devices not only contain hardware accelerators but also eliminate data movement between the host and storage, resulting in both improved performance and power savings. More importantly, this in-storage processing style of training ensures that private data never leaves the storage while fully controlling the sharing of public data. Experimental results show up to 2.7x speedup and 69% reduction in energy consumption and no significant loss in accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge