Luiz Manella Pereira

Optimal Transport-based Domain Alignment as a Preprocessing Step for Federated Learning

Jun 04, 2025Abstract:Federated learning (FL) is a subfield of machine learning that avoids sharing local data with a central server, which can enhance privacy and scalability. The inability to consolidate data leads to a unique problem called dataset imbalance, where agents in a network do not have equal representation of the labels one is trying to learn to predict. In FL, fusing locally-trained models with unbalanced datasets may deteriorate the performance of global model aggregation, and reduce the quality of updated local models and the accuracy of the distributed agents' decisions. In this work, we introduce an Optimal Transport-based preprocessing algorithm that aligns the datasets by minimizing the distributional discrepancy of data along the edge devices. We accomplish this by leveraging Wasserstein barycenters when computing channel-wise averages. These barycenters are collected in a trusted central server where they collectively generate a target RGB space. By projecting our dataset towards this target space, we minimize the distributional discrepancy on a global level, which facilitates the learning process due to a minimization of variance across the samples. We demonstrate the capabilities of the proposed approach over the CIFAR-10 dataset, where we show its capability of reaching higher degrees of generalization in fewer communication rounds.

Towards Characterizing Cyber Networks with Large Language Models

Nov 11, 2024

Abstract:Threat hunting analyzes large, noisy, high-dimensional data to find sparse adversarial behavior. We believe adversarial activities, however they are disguised, are extremely difficult to completely obscure in high dimensional space. In this paper, we employ these latent features of cyber data to find anomalies via a prototype tool called Cyber Log Embeddings Model (CLEM). CLEM was trained on Zeek network traffic logs from both a real-world production network and an from Internet of Things (IoT) cybersecurity testbed. The model is deliberately overtrained on a sliding window of data to characterize each window closely. We use the Adjusted Rand Index (ARI) to comparing the k-means clustering of CLEM output to expert labeling of the embeddings. Our approach demonstrates that there is promise in using natural language modeling to understand cyber data.

A Survey on Optimal Transport for Machine Learning: Theory and Applications

Jun 03, 2021

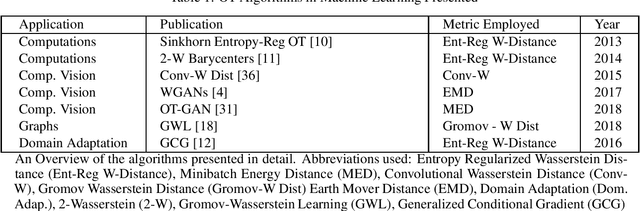

Abstract:Optimal Transport (OT) theory has seen an increasing amount of attention from the computer science community due to its potency and relevance in modeling and machine learning. It introduces means that serve as powerful ways to compare probability distributions with each other, as well as producing optimal mappings to minimize cost functions. In this survey, we present a brief introduction and history, a survey of previous work and propose directions of future study. We will begin by looking at the history of optimal transport and introducing the founders of this field. We then give a brief glance into the algorithms related to OT. Then, we will follow up with a mathematical formulation and the prerequisites to understand OT. These include Kantorovich duality, entropic regularization, KL Divergence, and Wassertein barycenters. Since OT is a computationally expensive problem, we then introduce the entropy-regularized version of computing optimal mappings, which allowed OT problems to become applicable in a wide range of machine learning problems. In fact, the methods generated from OT theory are competitive with the current state-of-the-art methods. We follow this up by breaking down research papers that focus on image processing, graph learning, neural architecture search, document representation, and domain adaptation. We close the paper with a small section on future research. Of the recommendations presented, three main problems are fundamental to allow OT to become widely applicable but rely strongly on its mathematical formulation and thus are hardest to answer. Since OT is a novel method, there is plenty of space for new research, and with more and more competitive methods (either on an accuracy level or computational speed level) being created, the future of applied optimal transport is bright as it has become pervasive in machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge