Leonardo Militano

ROSBag MCP Server: Analyzing Robot Data with LLMs for Agentic Embodied AI Applications

Nov 05, 2025

Abstract:Agentic AI systems and Physical or Embodied AI systems have been two key research verticals at the forefront of Artificial Intelligence and Robotics, with Model Context Protocol (MCP) increasingly becoming a key component and enabler of agentic applications. However, the literature at the intersection of these verticals, i.e., Agentic Embodied AI, remains scarce. This paper introduces an MCP server for analyzing ROS and ROS 2 bags, allowing for analyzing, visualizing and processing robot data with natural language through LLMs and VLMs. We describe specific tooling built with robotics domain knowledge, with our initial release focused on mobile robotics and supporting natively the analysis of trajectories, laser scan data, transforms, or time series data. This is in addition to providing an interface to standard ROS 2 CLI tools ("ros2 bag list" or "ros2 bag info"), as well as the ability to filter bags with a subset of topics or trimmed in time. Coupled with the MCP server, we provide a lightweight UI that allows the benchmarking of the tooling with different LLMs, both proprietary (Anthropic, OpenAI) and open-source (through Groq). Our experimental results include the analysis of tool calling capabilities of eight different state-of-the-art LLM/VLM models, both proprietary and open-source, large and small. Our experiments indicate that there is a large divide in tool calling capabilities, with Kimi K2 and Claude Sonnet 4 demonstrating clearly superior performance. We also conclude that there are multiple factors affecting the success rates, from the tool description schema to the number of arguments, as well as the number of tools available to the models. The code is available with a permissive license at https://github.com/binabik-ai/mcp-rosbags.

Towards Embodied Agentic AI: Review and Classification of LLM- and VLM-Driven Robot Autonomy and Interaction

Aug 07, 2025

Abstract:Foundation models, including large language models (LLMs) and vision-language models (VLMs), have recently enabled novel approaches to robot autonomy and human-robot interfaces. In parallel, vision-language-action models (VLAs) or large behavior models (BLMs) are increasing the dexterity and capabilities of robotic systems. This survey paper focuses on those words advancing towards agentic applications and architectures. This includes initial efforts exploring GPT-style interfaces to tooling, as well as more complex system where AI agents are coordinators, planners, perception actors, or generalist interfaces. Such agentic architectures allow robots to reason over natural language instructions, invoke APIs, plan task sequences, or assist in operations and diagnostics. In addition to peer-reviewed research, due to the fast-evolving nature of the field, we highlight and include community-driven projects, ROS packages, and industrial frameworks that show emerging trends. We propose a taxonomy for classifying model integration approaches and present a comparative analysis of the role that agents play in different solutions in today's literature.

Efficient delivery of Robotics Programming educational content using Cloud Robotics

Oct 19, 2022

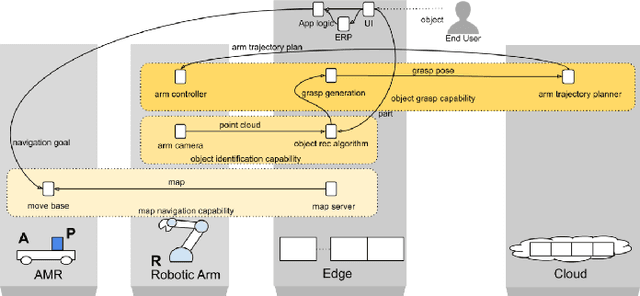

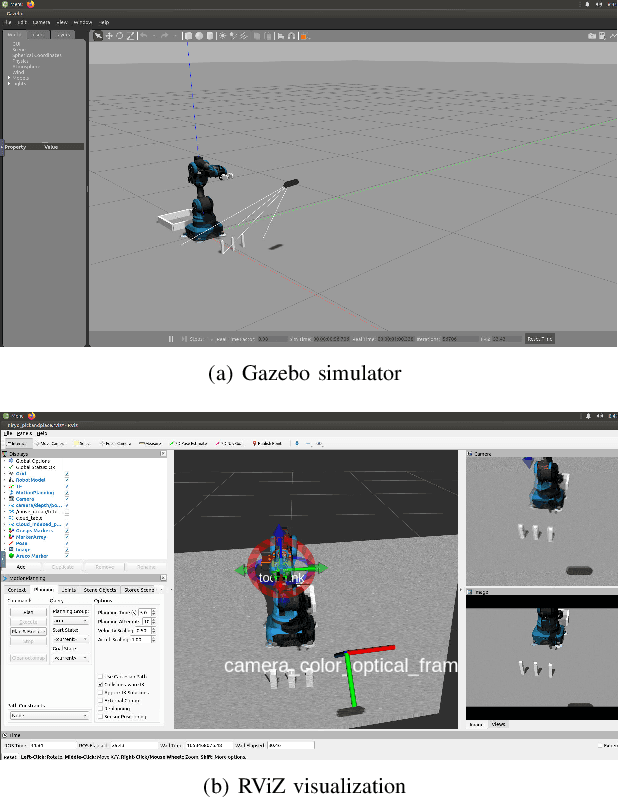

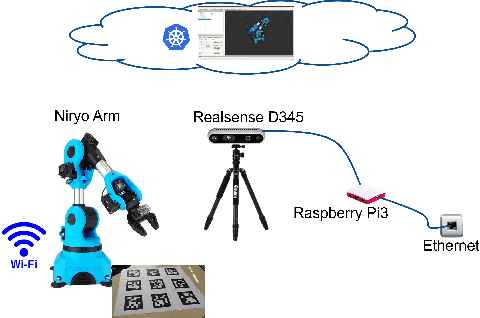

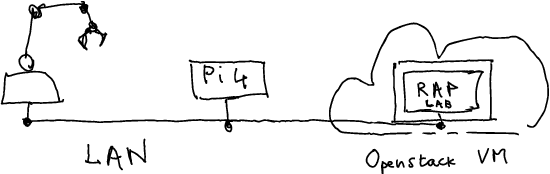

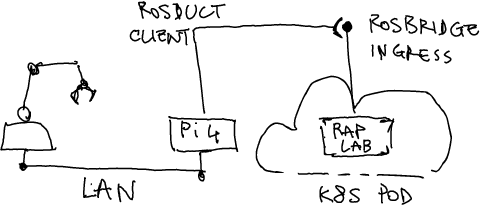

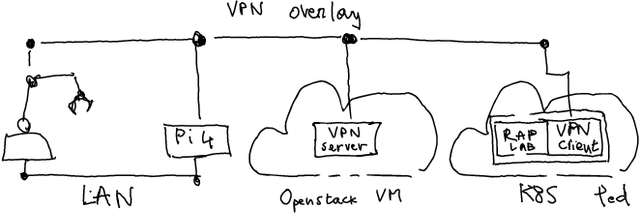

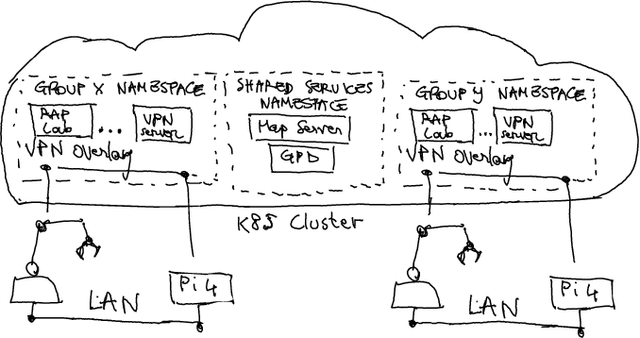

Abstract:In this paper, we report on our use of cloud-robotics solutions to teach a Robotics Applications Programming course at Zurich University of Applied Sciences (ZHAW). The usage of Kubernetes based cloud computing environment combined with real robots -- turtlebots and Niryo arms -- allowed us to: 1) minimize the set up times required to provide a Robotic Operating System (ROS) simulation and development environment to all students independently of their laptop architecture and OS; 2) provide a seamless "simulation to real" experience preserving the exciting experience of writing software interacting with the physical world; and 3) sharing GPUs across multiple student groups, thus using resources efficiently. We describe our requirements, solution design, experience working with the solution in the educational context and areas where it can be further improved. This may be of interest to other educators who may want to replicate our experience.

Cloud Native Robotic Applications with GPU Sharing on Kubernetes

Oct 08, 2022

Abstract:In this paper we discuss our experience in teaching the Robotic Applications Programming course at ZHAW combining the use of a Kubernetes (k8s) cluster and real, heterogeneous, robotic hardware. We discuss the main advantages of our solutions in terms of seamless ``simulation to real'' experience for students and the main shortcomings we encountered with networking and sharing GPUs to support deep learning workloads. We describe the current and foreseen alternatives to avoid these drawbacks in future course editions and propose a more cloud-native approach to deploying multiple robotics applications on a k8s cluster.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge