Lennart M. Reimann

Optimizing Binary and Ternary Neural Network Inference on RRAM Crossbars using CIM-Explorer

May 20, 2025Abstract:Using Resistive Random Access Memory (RRAM) crossbars in Computing-in-Memory (CIM) architectures offers a promising solution to overcome the von Neumann bottleneck. Due to non-idealities like cell variability, RRAM crossbars are often operated in binary mode, utilizing only two states: Low Resistive State (LRS) and High Resistive State (HRS). Binary Neural Networks (BNNs) and Ternary Neural Networks (TNNs) are well-suited for this hardware due to their efficient mapping. Existing software projects for RRAM-based CIM typically focus on only one aspect: compilation, simulation, or Design Space Exploration (DSE). Moreover, they often rely on classical 8 bit quantization. To address these limitations, we introduce CIM-Explorer, a modular toolkit for optimizing BNN and TNN inference on RRAM crossbars. CIM-Explorer includes an end-to-end compiler stack, multiple mapping options, and simulators, enabling a DSE flow for accuracy estimation across different crossbar parameters and mappings. CIM-Explorer can accompany the entire design process, from early accuracy estimation for specific crossbar parameters, to selecting an appropriate mapping, and compiling BNNs and TNNs for a finalized crossbar chip. In DSE case studies, we demonstrate the expected accuracy for various mappings and crossbar parameters. CIM-Explorer can be found on GitHub.

Introducing Instruction-Accurate Simulators for Performance Estimation of Autotuning Workloads

May 19, 2025Abstract:Accelerating Machine Learning (ML) workloads requires efficient methods due to their large optimization space. Autotuning has emerged as an effective approach for systematically evaluating variations of implementations. Traditionally, autotuning requires the workloads to be executed on the target hardware (HW). We present an interface that allows executing autotuning workloads on simulators. This approach offers high scalability when the availability of the target HW is limited, as many simulations can be run in parallel on any accessible HW. Additionally, we evaluate the feasibility of using fast instruction-accurate simulators for autotuning. We train various predictors to forecast the performance of ML workload implementations on the target HW based on simulation statistics. Our results demonstrate that the tuned predictors are highly effective. The best workload implementation in terms of actual run time on the target HW is always within the top 3 % of predictions for the tested x86, ARM, and RISC-V-based architectures. In the best case, this approach outperforms native execution on the target HW for embedded architectures when running as few as three samples on three simulators in parallel.

Logic Locking at the Frontiers of Machine Learning: A Survey on Developments and Opportunities

Jul 21, 2021

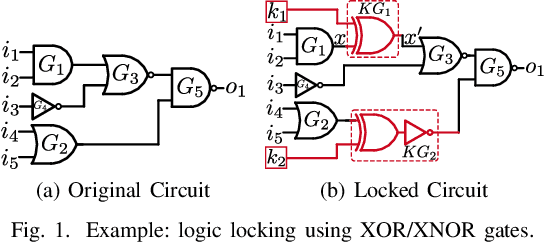

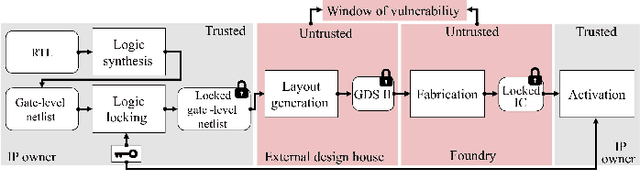

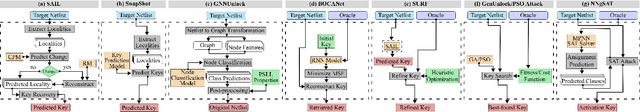

Abstract:In the past decade, a lot of progress has been made in the design and evaluation of logic locking; a premier technique to safeguard the integrity of integrated circuits throughout the electronics supply chain. However, the widespread proliferation of machine learning has recently introduced a new pathway to evaluating logic locking schemes. This paper summarizes the recent developments in logic locking attacks and countermeasures at the frontiers of contemporary machine learning models. Based on the presented work, the key takeaways, opportunities, and challenges are highlighted to offer recommendations for the design of next-generation logic locking.

Deceptive Logic Locking for Hardware Integrity Protection against Machine Learning Attacks

Jul 19, 2021

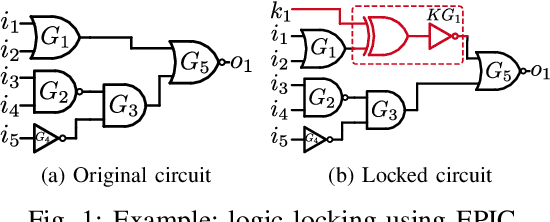

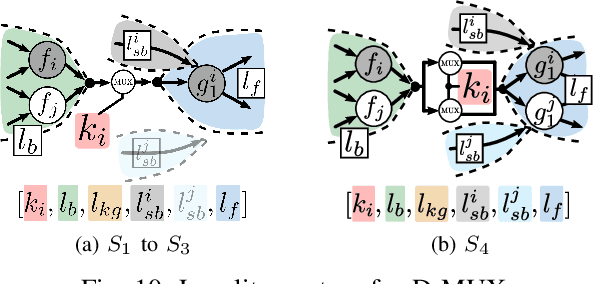

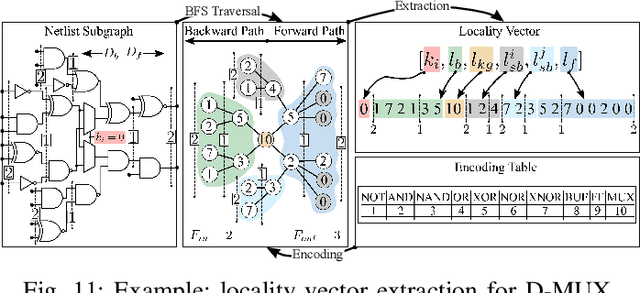

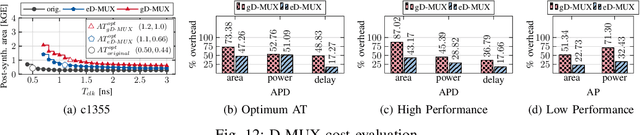

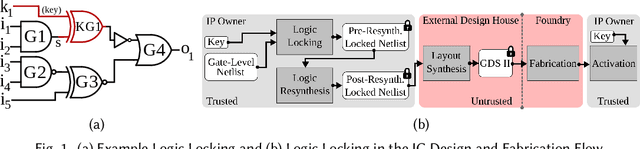

Abstract:Logic locking has emerged as a prominent key-driven technique to protect the integrity of integrated circuits. However, novel machine-learning-based attacks have recently been introduced to challenge the security foundations of locking schemes. These attacks are able to recover a significant percentage of the key without having access to an activated circuit. This paper address this issue through two focal points. First, we present a theoretical model to test locking schemes for key-related structural leakage that can be exploited by machine learning. Second, based on the theoretical model, we introduce D-MUX: a deceptive multiplexer-based logic-locking scheme that is resilient against structure-exploiting machine learning attacks. Through the design of D-MUX, we uncover a major fallacy in existing multiplexer-based locking schemes in the form of a structural-analysis attack. Finally, an extensive cost evaluation of D-MUX is presented. To the best of our knowledge, D-MUX is the first machine-learning-resilient locking scheme capable of protecting against all known learning-based attacks. Hereby, the presented work offers a starting point for the design and evaluation of future-generation logic locking in the era of machine learning.

Challenging the Security of Logic Locking Schemes in the Era of Deep Learning: A Neuroevolutionary Approach

Nov 30, 2020

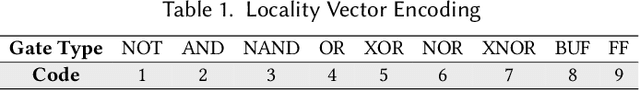

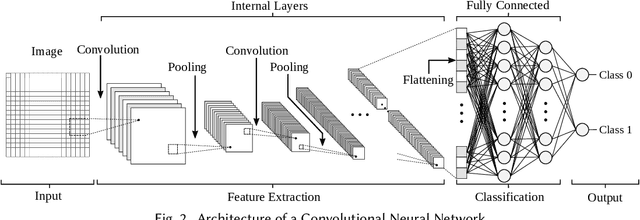

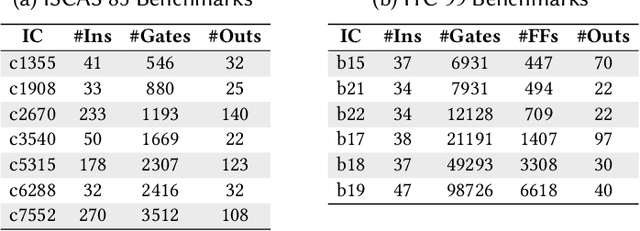

Abstract:Logic locking is a prominent technique to protect the integrity of hardware designs throughout the integrated circuit design and fabrication flow. However, in recent years, the security of locking schemes has been thoroughly challenged by the introduction of various deobfuscation attacks. As in most research branches, deep learning is being introduced in the domain of logic locking as well. Therefore, in this paper we present SnapShot: a novel attack on logic locking that is the first of its kind to utilize artificial neural networks to directly predict a key bit value from a locked synthesized gate-level netlist without using a golden reference. Hereby, the attack uses a simpler yet more flexible learning model compared to existing work. Two different approaches are evaluated. The first approach is based on a simple feedforward fully connected neural network. The second approach utilizes genetic algorithms to evolve more complex convolutional neural network architectures specialized for the given task. The attack flow offers a generic and customizable framework for attacking locking schemes using machine learning techniques. We perform an extensive evaluation of SnapShot for two realistic attack scenarios, comprising both reference benchmark circuits as well as silicon-proven RISC-V core modules. The evaluation results show that SnapShot achieves an average key prediction accuracy of 82.60% for the selected attack scenario, with a significant performance increase of 10.49 percentage points compared to the state of the art. Moreover, SnapShot outperforms the existing technique on all evaluated benchmarks. The results indicate that the security foundation of common logic locking schemes is build on questionable assumptions. The conclusions of the evaluation offer insights into the challenges of designing future logic locking schemes that are resilient to machine learning attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge