Kok Yew Ng

Design and Development of a Robust Tolerance Optimisation Framework for Automated Optical Inspection in Semiconductor Manufacturing

May 06, 2025Abstract:Automated Optical Inspection (AOI) is widely used across various industries, including surface mount technology in semiconductor manufacturing. One of the key challenges in AOI is optimising inspection tolerances. Traditionally, this process relies heavily on the expertise and intuition of engineers, making it subjective and prone to inconsistency. To address this, we are developing an intelligent, data-driven approach to optimise inspection tolerances in a more objective and consistent manner. Most existing research in this area focuses primarily on minimising false calls, often at the risk of allowing actual defects to go undetected. This oversight can compromise product quality, especially in critical sectors such as medical, defence, and automotive industries. Our approach introduces the use of percentile rank, amongst other logical strategies, to ensure that genuine defects are not overlooked. With continued refinement, our method aims to reach a point where every flagged item is a true defect, thereby eliminating the need for manual inspection. Our proof of concept achieved an 18% reduction in false calls at the 80th percentile rank, while maintaining a 100% recall rate. This makes the system both efficient and reliable, offering significant time and cost savings.

Learning to Predict Grip Quality from Simulation: Establishing a Digital Twin to Generate Simulated Data for a Grip Stability Metric

Feb 06, 2023Abstract:A robust grip is key to successful manipulation and joining of work pieces involved in any industrial assembly process. Stability of a grip depends on geometric and physical properties of the object as well as the gripper itself. Current state-of-the-art algorithms can usually predict if a grip would fail. However, they are not able to predict the force at which the gripped object starts to slip, which is critical as the object might be subjected to external forces, e.g. when joining it with another object. This research project aims to develop a AI-based approach for a grip metric based on tactile sensor data capturing the physical interactions between gripper and object. Thus, the maximum force that can be applied to the object before it begins to slip should be predicted before manipulating the object. The RGB image of the contact surface between the object and gripper jaws obtained from GelSight tactile sensors during the initial phase of the grip should serve as a training input for the grip metric. To generate such a data set, a pull experiment is designed using a UR 5 robot. Performing these experiments in real life to populate the data set is time consuming since different object classes, geometries, material properties and surface textures need to be considered to enhance the robustness of the prediction algorithm. Hence, a simulation model of the experimental setup has been developed to both speed up and automate the data generation process. In this paper, the design of this digital twin and the accuracy of the synthetic data are presented. State-of-the-art image comparison algorithms show that the simulated RGB images of the contact surface match the experimental data. In addition, the maximum pull forces can be reproduced for different object classes and grip scenarios. As a result, the synthetically generated data can be further used to train the neural grip metric network.

Seizure Classification Using Parallel Genetic Naive Bayes Classifiers

Oct 06, 2021

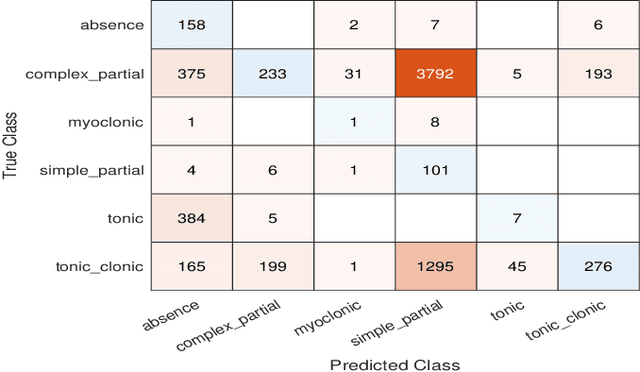

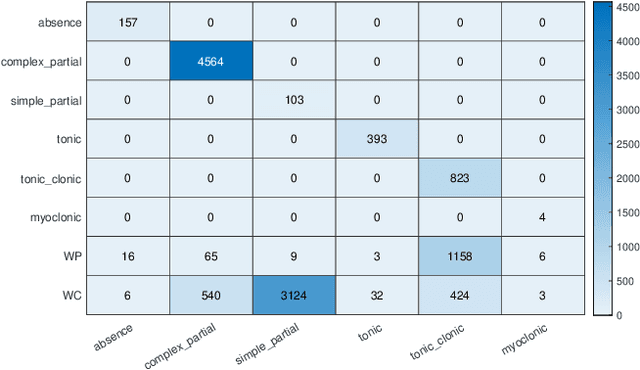

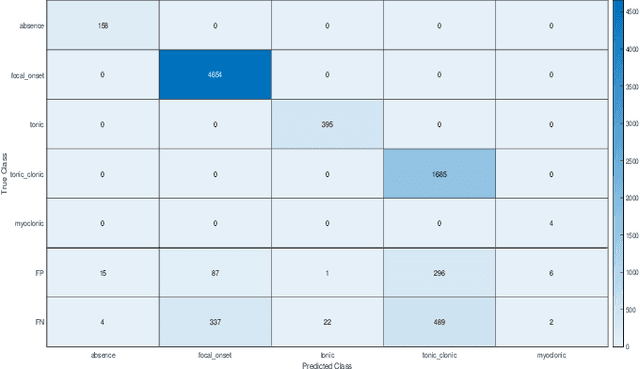

Abstract:Epilepsy affects 50 million people worldwide and is one of the most common serious brain disorders. Seizure detection and classification is a valuable tool for maintaining the condition. An automated detection algorithm will allow for accurate diagnosis. This study proposes a method using unique features with a novel parallel classifier trained using a genetic algorithm. Ictal states from the EEG are segmented into 1.8 s windows, where the epochs are then further decomposed into 13 different features from the first IMF. All of the features are fed into a genetic algorithm (Binary Grey Wolf Optimisation Option 1) with a Naive Bayes classifier. Combining the simple partial and complex partial seizures provides the highest accuracy of all the models tested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge