Kaustubh Yadav

A Comprehensive Study on Optimization Strategies for Gradient Descent In Deep Learning

Jan 07, 2021

Abstract:One of the most important parts of Artificial Neural Networks is minimizing the loss functions which tells us how good or bad our model is. To minimize these losses we need to tune the weights and biases. Also to calculate the minimum value of a function we need gradient. And to update our weights we need gradient descent. But there are some problems with regular gradient descent ie. it is quite slow and not that accurate. This article aims to give an introduction to optimization strategies to gradient descent. In addition, we shall also discuss the architecture of these algorithms and further optimization of Neural Networks in general

A Comprehensive Survey on Aspect Based Sentiment Analysis

Jun 08, 2020

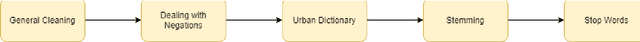

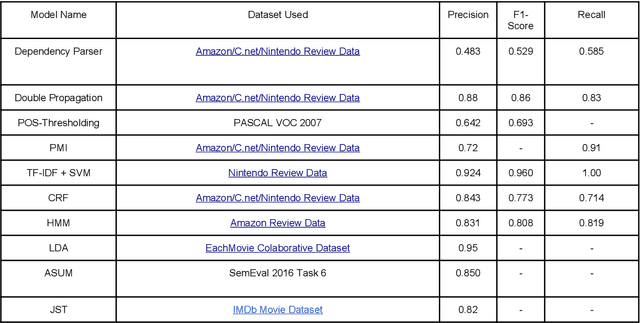

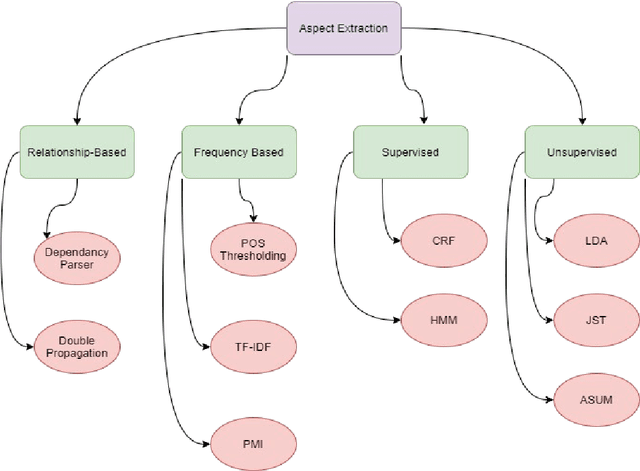

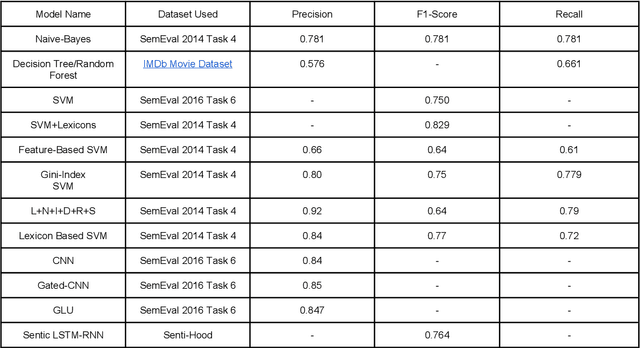

Abstract:Aspect Based Sentiment Analysis (ABSA) is the sub-field of Natural Language Processing that deals with essentially splitting our data into aspects ad finally extracting the sentiment information. ABSA is known to provide more information about the context than general sentiment analysis. In this study, our aim is to explore the various methodologies practiced while performing ABSA, and providing a comparative study. This survey paper discusses various solutions in-depth and gives a comparison between them. And is conveniently divided into sections to get a holistic view on the process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge