Juliano Henrique Foleiss

Segment Relevance Estimation for Audio Analysis and Weakly-Labelled Classification

Nov 12, 2019

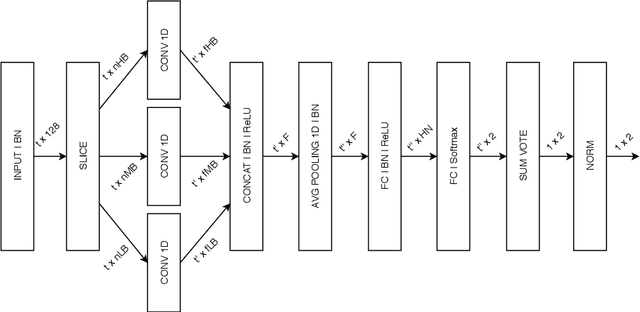

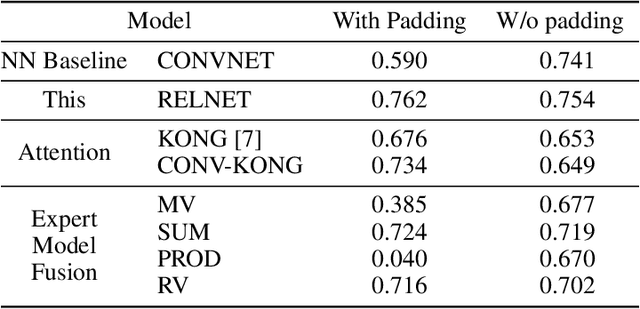

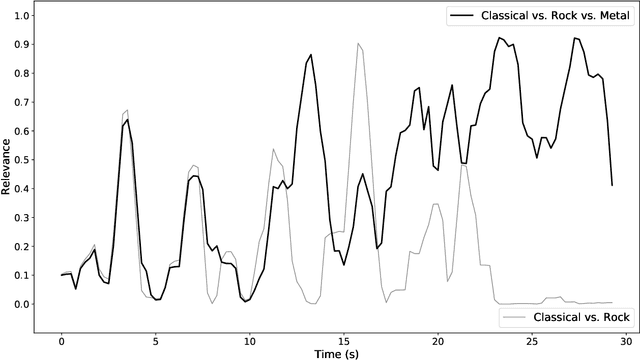

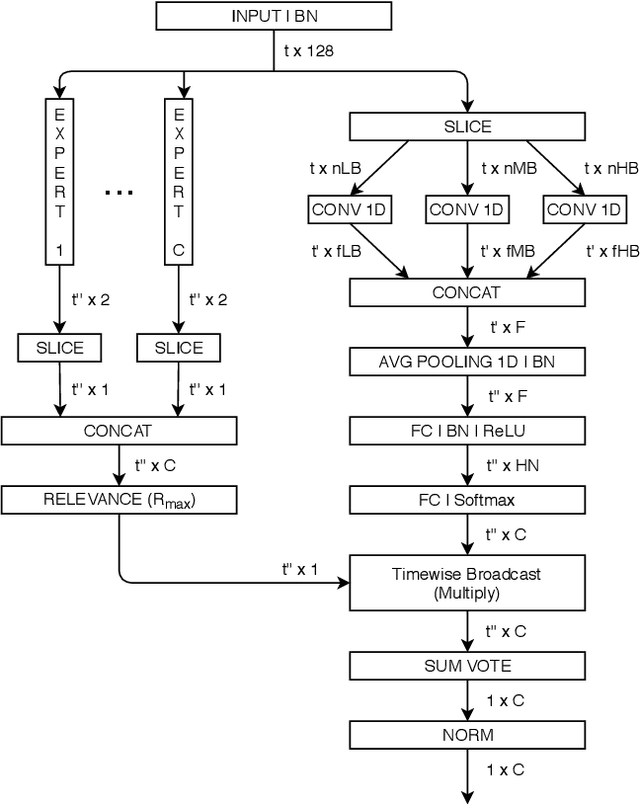

Abstract:We propose a method that quantifies the importance, namely relevance, of audio segments for classification in weakly-labelled problems. It works by drawing information from a set of class-wise one-vs-all classifiers. By selecting the classifiers used in each specific classification problem, the relevance measure adapts to different user-defined viewpoints without requiring additional neural network training. This characteristic allows the relevance measure to highlight audio segments that quickly adapt to user-defined criteria. Such functionality can be used for computer-assisted audio analysis. Also, we propose a neural network architecture, namely RELNET, that leverages the relevance measure for weakly-labelled audio classification problems. RELNET was evaluated in the DCASE2018 dataset and achieved competitive classification results when compared to previous attention-based proposals.

Random Projections of Mel-Spectrograms as Low-Level Features for Automatic Music Genre Classification

Nov 12, 2019

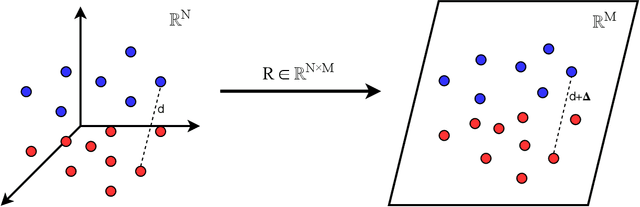

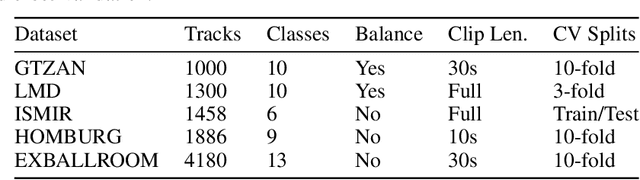

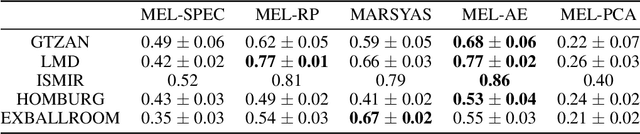

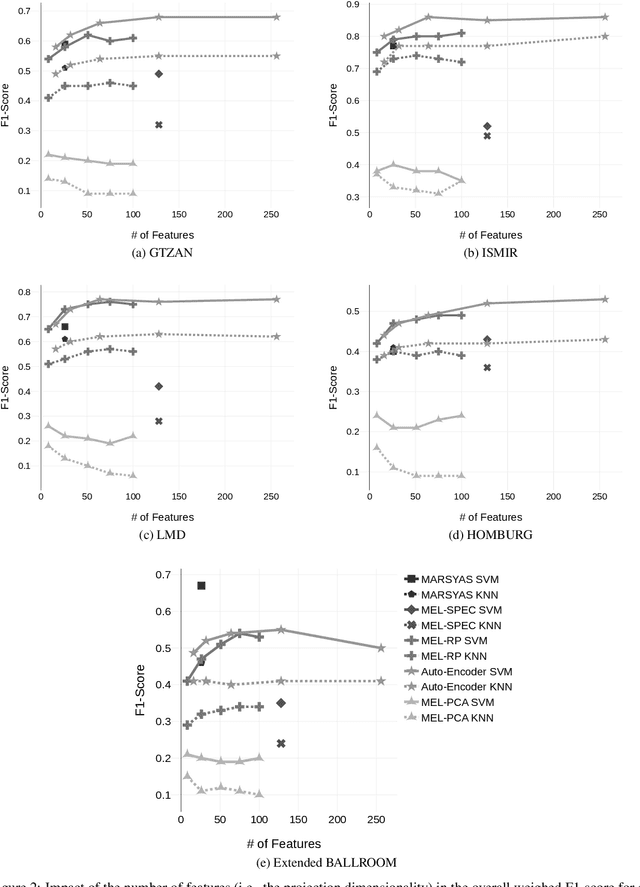

Abstract:In this work, we analyse the random projections of Mel-spectrograms as low-level features for music genre classification. This approach was compared to handcrafted features, features learned using an auto-encoder and features obtained from a transfer learning setting. Tests in five different well-known, publicly available datasets show that random projections leads to results comparable to learned features and outperforms features obtained via transfer learning in a shallow learning scenario. Random projections do not require using extensive specialist knowledge and, simultaneously, requires less computational power for training than other projection-based low-level features. Therefore, they can be are a viable choice for usage in shallow learning content-based music genre classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge