Julian Gaal

Point Cloud Recombination: Systematic Real Data Augmentation Using Robotic Targets for LiDAR Perception Validation

May 05, 2025Abstract:The validation of LiDAR-based perception of intelligent mobile systems operating in open-world applications remains a challenge due to the variability of real environmental conditions. Virtual simulations allow the generation of arbitrary scenes under controlled conditions but lack physical sensor characteristics, such as intensity responses or material-dependent effects. In contrast, real-world data offers true sensor realism but provides less control over influencing factors, hindering sufficient validation. Existing approaches address this problem with augmentation of real-world point cloud data by transferring objects between scenes. However, these methods do not consider validation and remain limited in controllability because they rely on empirical data. We solve these limitations by proposing Point Cloud Recombination, which systematically augments captured point cloud scenes by integrating point clouds acquired from physical target objects measured in controlled laboratory environments. Thus enabling the creation of vast amounts and varieties of repeatable, physically accurate test scenes with respect to phenomena-aware occlusions with registered 3D meshes. Using the Ouster OS1-128 Rev7 sensor, we demonstrate the augmentation of real-world urban and rural scenes with humanoid targets featuring varied clothing and poses, for repeatable positioning. We show that the recombined scenes closely match real sensor outputs, enabling targeted testing, scalable failure analysis, and improved system safety. By providing controlled yet sensor-realistic data, our method enables trustworthy conclusions about the limitations of specific sensors in compound with their algorithms, e.g., object detection.

FeatSense -- A Feature-based Registration Algorithm with GPU-accelerated TSDF-Mapping Backend for NVIDIA Jetson Boards

Oct 09, 2023Abstract:This paper presents FeatSense, a feature-based GPU-accelerated SLAM system for high resolution LiDARs, combined with a map generation algorithm for real-time generation of large Truncated Signed Distance Fields (TSDFs) on embedded hardware. FeatSense uses LiDAR point cloud features for odometry estimation and point cloud registration. The registered point clouds are integrated into a global Truncated Signed Distance Field (TSDF) representation. FeatSense is intended to run on embedded systems with integrated GPU-accelerator like NVIDIA Jetson boards. In this paper, we present a real-time capable TSDF-SLAM system specially tailored for close coupled CPU/GPU systems. The implementation is evaluated in various structured and unstructured environments and benchmarked against existing reference datasets. The main contribution of this paper is the ability to register up to 128 scan lines of an Ouster OS1-128 LiDAR at 10Hz on a NVIDIA AGX Xavier while achieving a TSDF map generation speedup by a factor of 100 compared to previous work on the same power budget.

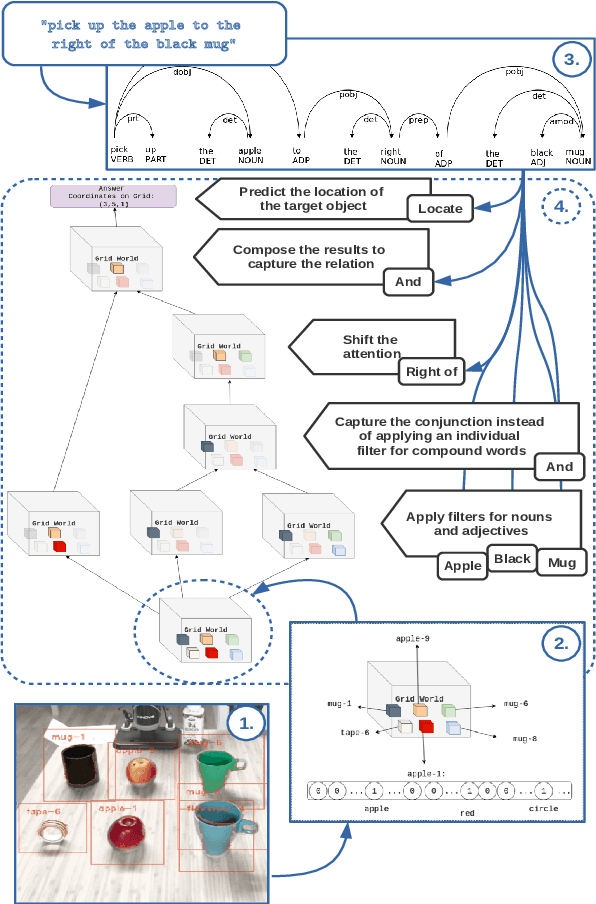

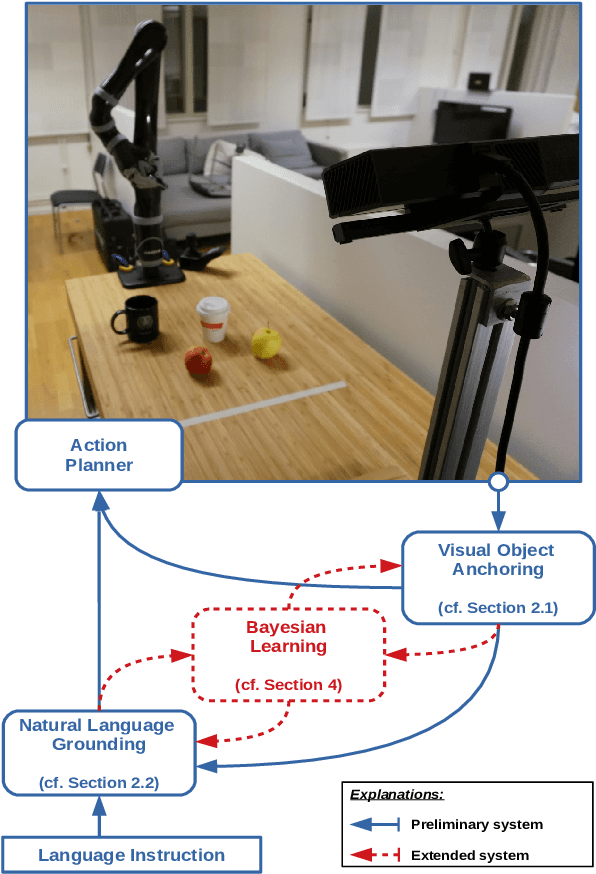

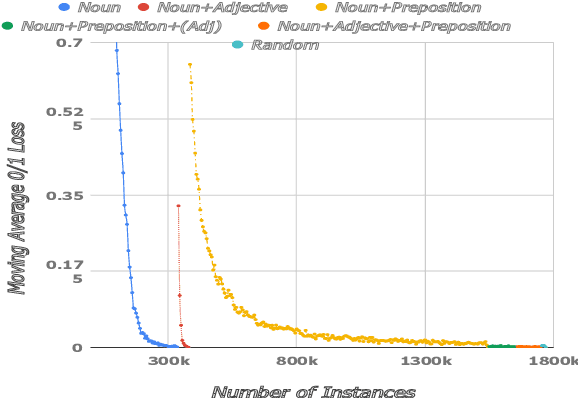

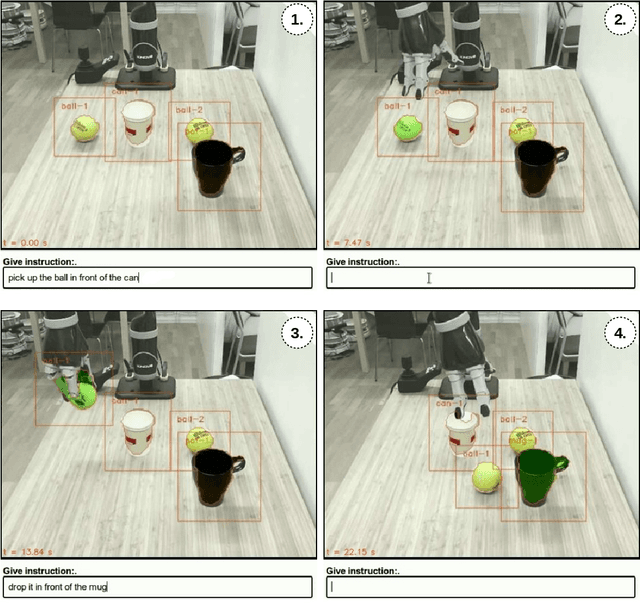

Learning from Implicit Information in Natural Language Instructions for Robotic Manipulations

Apr 30, 2019

Abstract:Human-robot interaction often occurs in the form of instructions given from a human to a robot. For a robot to successfully follow instructions, a common representation of the world and objects in it should be shared between humans and the robot so that the instructions can be grounded. Achieving this representation can be done via learning, where both the world representation and the language grounding are learned simultaneously. However, in robotics this can be a difficult task due to the cost and scarcity of data. In this paper, we tackle the problem by separately learning the world representation of the robot and the language grounding. While this approach can address the challenges in getting sufficient data, it may give rise to inconsistencies between both learned components. Therefore, we further propose Bayesian learning to resolve such inconsistencies between the natural language grounding and a robot's world representation by exploiting spatio-relational information that is implicitly present in instructions given by a human. Moreover, we demonstrate the feasibility of our approach on a scenario involving a robotic arm in the physical world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge