José M. Amigó

Applications of Entropy in Data Analysis and Machine Learning: A Review

Mar 04, 2025Abstract:Since its origin in the thermodynamics of the 19th century, the concept of entropy has also permeated other fields of physics and mathematics, such as Classical and Quantum Statistical Mechanics, Information Theory, Probability Theory, Ergodic Theory and the Theory of Dynamical Systems. Specifically, we are referring to the classical entropies: the Boltzmann-Gibbs, von Neumann, Shannon, Kolmogorov-Sinai and topological entropies. In addition to their common name, which is historically justified (as we briefly describe in this review), other commonality of the classical entropies is the important role that they have played and are still playing in the theory and applications of their respective fields and beyond. Therefore, it is not surprising that, in the course of time, many other instances of the overarching concept of entropy have been proposed, most of them tailored to specific purposes. Following the current usage, we will refer to all of them, whether classical or new, simply as entropies. Precisely, the subject of this review is their applications in data analysis and machine learning. The reason for these particular applications is that entropies are very well suited to characterize probability mass distributions, typically generated by finite-state processes or symbolized signals. Therefore, we will focus on entropies defined as positive functionals on probability mass distributions and provide an axiomatic characterization that goes back to Shannon and Khinchin. Given the plethora of entropies in the literature, we have selected a representative group, including the classical ones. The applications summarized in this review finely illustrate the power and versatility of entropy in data analysis and machine learning.

* 39 pages, 3 figures, 282 references

Differentiating patients with obstructive sleep apnea from healthy controls based on heart rate - blood pressure coupling quantified by entropy-based indices

Nov 04, 2023Abstract:We introduce an entropy-based classification method for pairs of sequences (ECPS) for quantifying mutual dependencies in heart rate and beat-to-beat blood pressure recordings. The purpose of the method is to build a classifier for data in which each item consists of the two intertwined data series taken for each subject. The method is based on ordinal patterns, and uses entropy-like indices. Machine learning is used to select a subset of indices most suitable for our classification problem in order to build an optimal yet simple model for distinguishing between patients suffering from obstructive sleep apnea and a control group.

* 7 figures

Differentiable programming: Generalization, characterization and limitations of deep learning

May 13, 2022

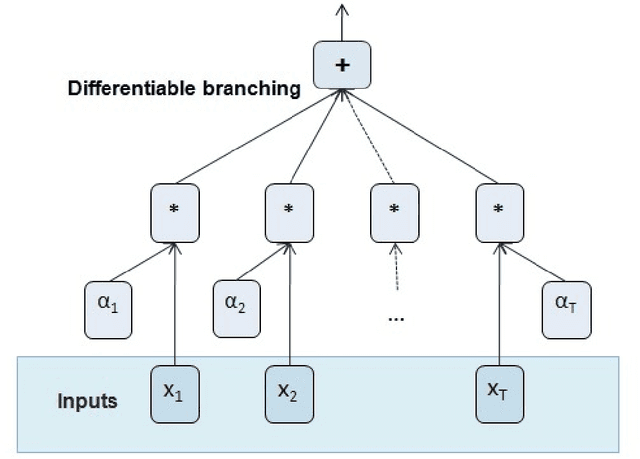

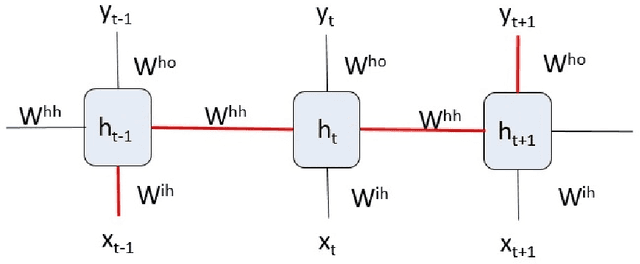

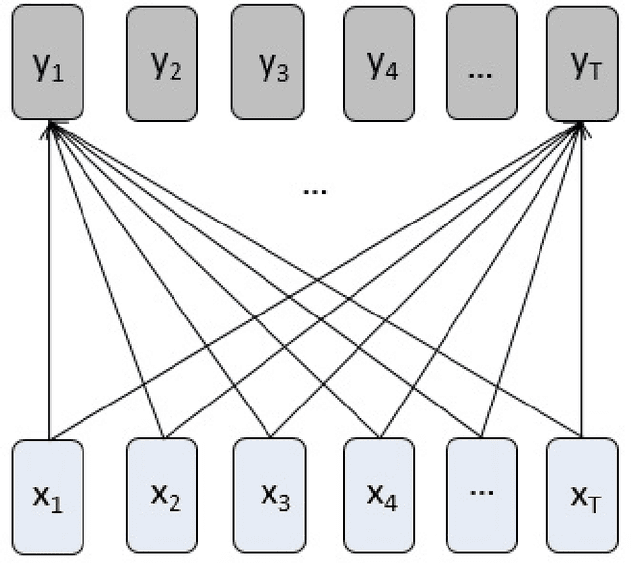

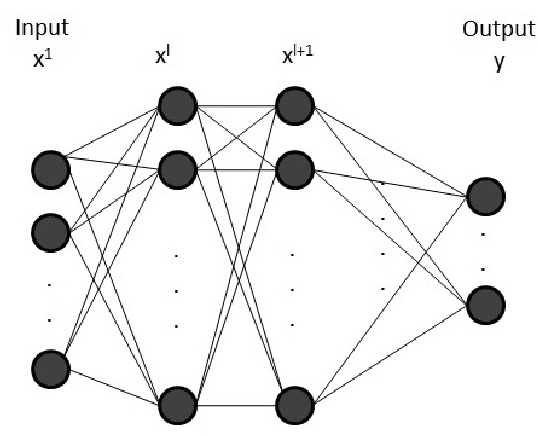

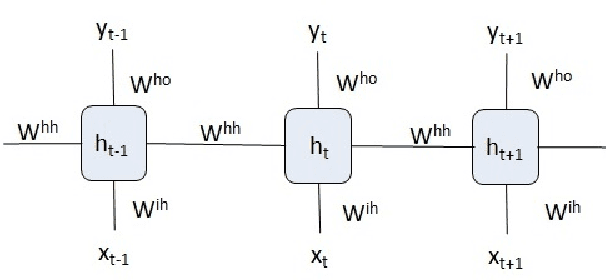

Abstract:In the past years, deep learning models have been successfully applied in several cognitive tasks. Originally inspired by neuroscience, these models are specific examples of differentiable programs. In this paper we define and motivate differentiable programming, as well as specify some program characteristics that allow us to incorporate the structure of the problem in a differentiable program. We analyze different types of differentiable programs, from more general to more specific, and evaluate, for a specific problem with a graph dataset, its structure and knowledge with several differentiable programs using those characteristics. Finally, we discuss some inherent limitations of deep learning and differentiable programs, which are key challenges in advancing artificial intelligence, and then analyze possible solutions

Differentiable programming and its applications to dynamical systems

Dec 17, 2019

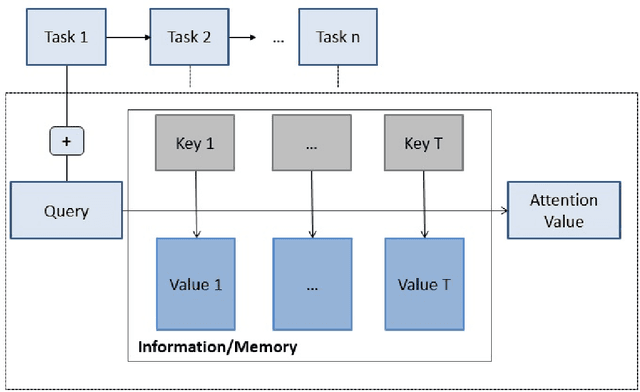

Abstract:Differentiable programming is the combination of classical neural networks modules with algorithmic ones in an end-to-end differentiable model. These new models, that use automatic differentiation to calculate gradients, have new learning capabilities (reasoning, attention and memory). In this tutorial, aimed at researchers in nonlinear systems with prior knowledge of deep learning, we present this new programming paradigm, describe some of its new features such as attention mechanisms, and highlight the benefits they bring. Then, we analyse the uses and limitations of traditional deep learning models in the modeling and prediction of dynamical systems. Here, a dynamical system is meant to be a set of state variables that evolve in time under general internal and external interactions. Finally, we review the advantages and applications of differentiable programming to dynamical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge