Joonsung Kang

Robust Causal Directionality Inference in Quantum Inference under MNAR Observation and High-Dimensional Noise

Dec 18, 2025Abstract:In quantum mechanics, observation actively shapes the system, paralleling the statistical notion of Missing Not At Random (MNAR). This study introduces a unified framework for \textbf{robust causal directionality inference} in quantum engineering, determining whether relations are system$\to$observation, observation$\to$system, or bidirectional. The method integrates CVAE-based latent constraints, MNAR-aware selection models, GEE-stabilized regression, penalized empirical likelihood, and Bayesian optimization. It jointly addresses quantum and classical noise while uncovering causal directionality, with theoretical guarantees for double robustness, perturbation stability, and oracle inequalities. Simulation and real-data analyses (TCGA gene expression, proteomics) show that the proposed MNAR-stabilized CVAE+GEE+AIPW+PEL framework achieves lower bias and variance, near-nominal coverage, and superior quantum-specific diagnostics. This establishes robust causal directionality inference as a key methodological advance for reliable quantum engineering.

Doubly robust outlier resistant inference on causal treatment effect

Jul 23, 2025Abstract:Outliers can severely distort causal effect estimation in observational studies, yet this issue has received limited attention in the literature. Their influence is especially pronounced in small sample sizes, where detecting and removing outliers becomes increasingly difficult. Therefore, it is essential to estimate treatment effects robustly without excluding these influential data points. To address this, we propose a doubly robust point estimator for the average treatment effect under a contaminated model that includes outliers. Robustness in outcome regression is achieved through a robust estimating equation, while covariate balancing propensity scores (CBPS) ensure resilience in propensity score modeling. To prevent model overfitting due to the inclusion of numerous parameters, we incorporate variable selection. All these components are unified under a penalized empirical likelihood framework. For confidence interval estimation, most existing approaches rely on asymptotic properties, which may be unreliable in finite samples. We derive an optimal finite-sample confidence interval for the average treatment effect using our proposed estimating equation, ensuring that the interval bounds remain unaffected by outliers. Through simulations and a real-world application involving hypertension data with outliers, we demonstrate that our method consistently outperforms existing approaches in both accuracy and robustness.

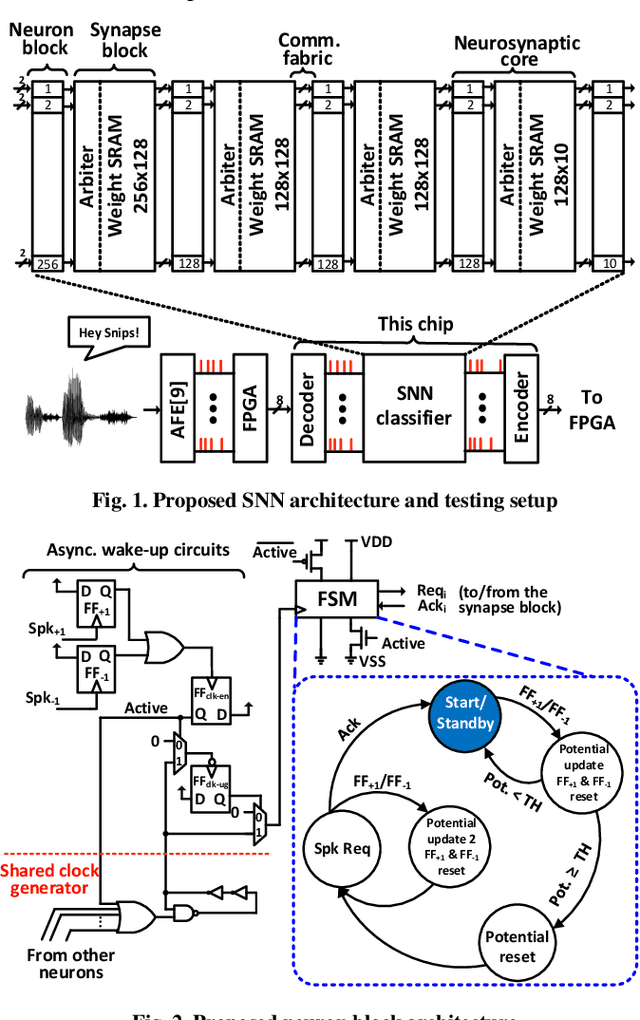

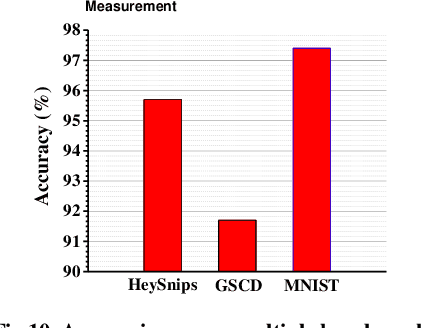

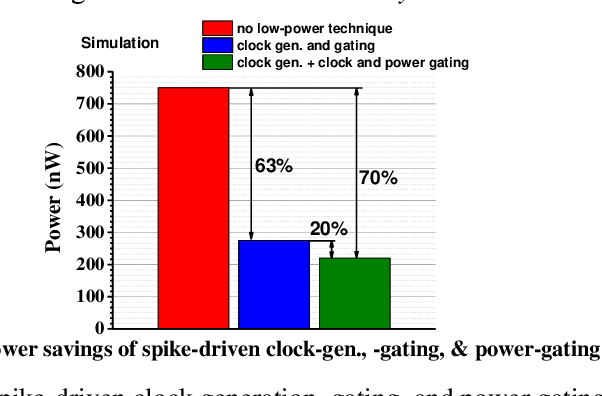

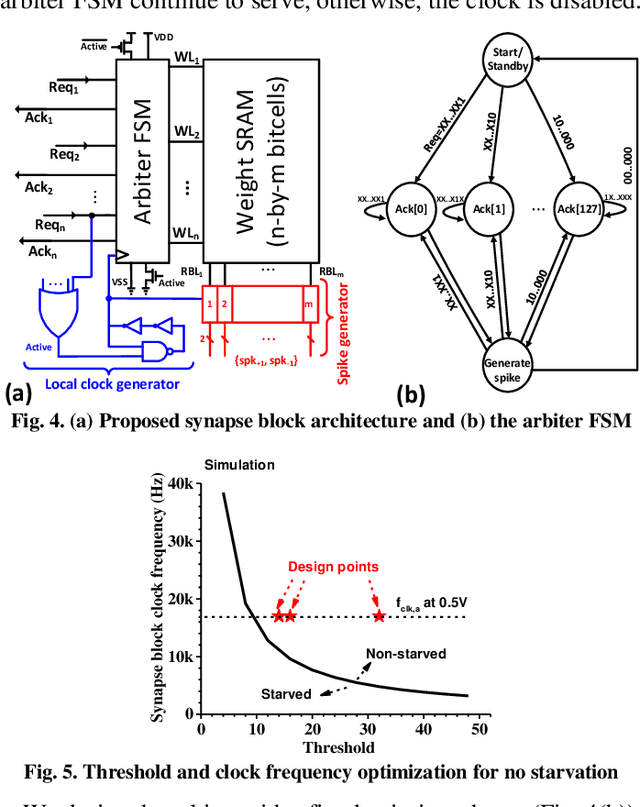

Always-On, Sub-300-nW, Event-Driven Spiking Neural Network based on Spike-Driven Clock-Generation and Clock- and Power-Gating for an Ultra-Low-Power Intelligent Device

Jun 23, 2020

Abstract:Always-on artificial intelligent (AI) functions such as keyword spotting (KWS) and visual wake-up tend to dominate total power consumption in ultra-low power devices. A key observation is that the signals to an always-on function are sparse in time, which a spiking neural network (SNN) classifier can leverage for power savings, because the switching activity and power consumption of SNNs tend to scale with spike rate. Toward this goal, we present a novel SNN classifier architecture for always-on functions, demonstrating sub-300nW power consumption at the competitive inference accuracy for a KWS and other always-on classification workloads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge