Johannes Schneider

Foundation models in brief: A historical, socio-technical focus

Dec 17, 2022Abstract:Foundation models can be disruptive for future AI development by scaling up deep learning in terms of model size and training data's breadth and size. These models achieve state-of-the-art performance (often through further adaptation) on a variety of tasks in domains such as natural language processing and computer vision. Foundational models exhibit a novel {emergent behavior}: {In-context learning} enables users to provide a query and a few examples from which a model derives an answer without being trained on such queries. Additionally, {homogenization} of models might replace a myriad of task-specific models with fewer very large models controlled by few corporations leading to a shift in power and control over AI. This paper provides a short introduction to foundation models. It contributes by crafting a crisp distinction between foundation models and prior deep learning models, providing a history of machine learning leading to foundation models, elaborating more on socio-technical aspects, i.e., organizational issues and end-user interaction, and a discussion of future research.

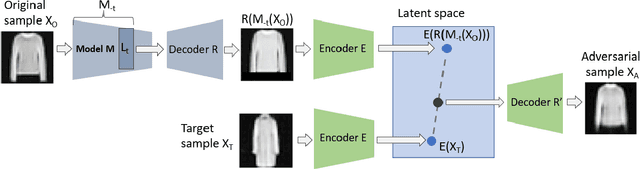

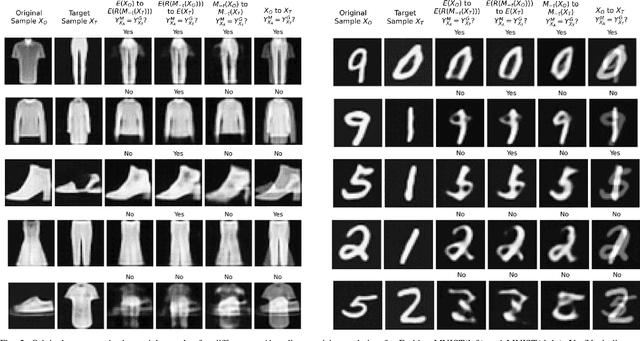

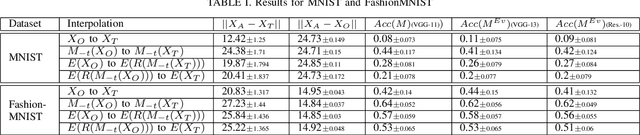

Concept-based Adversarial Attacks: Tricking Humans and Classifiers Alike

Mar 18, 2022

Abstract:We propose to generate adversarial samples by modifying activations of upper layers encoding semantically meaningful concepts. The original sample is shifted towards a target sample, yielding an adversarial sample, by using the modified activations to reconstruct the original sample. A human might (and possibly should) notice differences between the original and the adversarial sample. Depending on the attacker-provided constraints, an adversarial sample can exhibit subtle differences or appear like a "forged" sample from another class. Our approach and goal are in stark contrast to common attacks involving perturbations of single pixels that are not recognizable by humans. Our approach is relevant in, e.g., multi-stage processing of inputs, where both humans and machines are involved in decision-making because invisible perturbations will not fool a human. Our evaluation focuses on deep neural networks. We also show the transferability of our adversarial examples among networks.

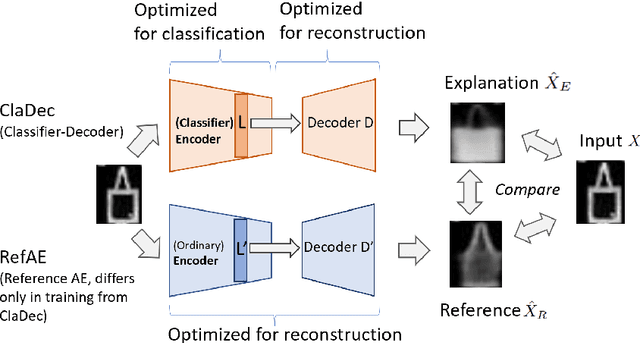

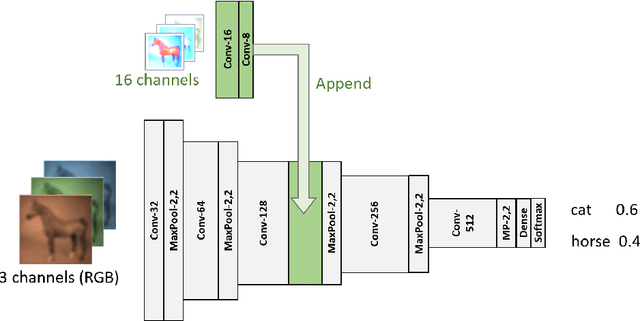

Explaining Classifiers by Constructing Familiar Concepts

Mar 07, 2022

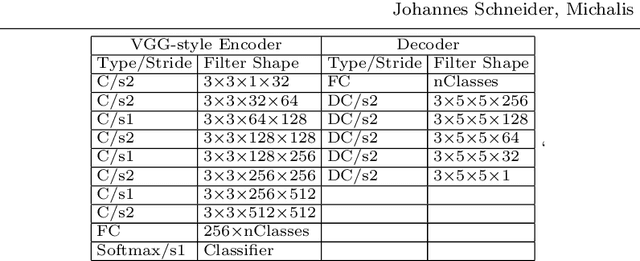

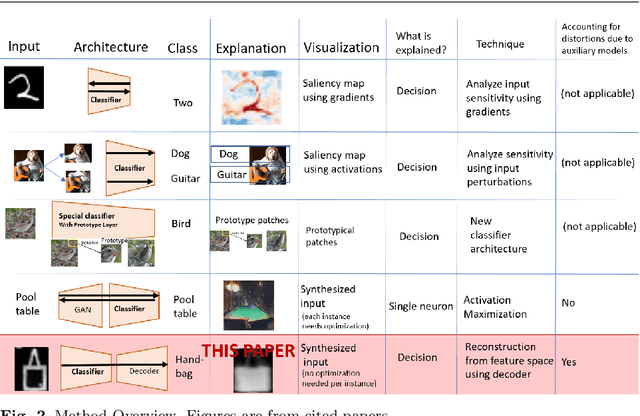

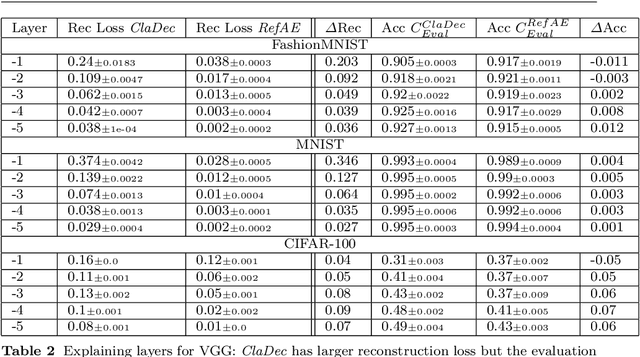

Abstract:Interpreting a large number of neurons in deep learning is difficult. Our proposed `CLAssifier-DECoder' architecture (ClaDec) facilitates the understanding of the output of an arbitrary layer of neurons or subsets thereof. It uses a decoder that transforms the incomprehensible representation of the given neurons to a representation that is more similar to the domain a human is familiar with. In an image recognition problem, one can recognize what information (or concepts) a layer maintains by contrasting reconstructed images of ClaDec with those of a conventional auto-encoder(AE) serving as reference. An extension of ClaDec allows trading comprehensibility and fidelity. We evaluate our approach for image classification using convolutional neural networks. We show that reconstructed visualizations using encodings from a classifier capture more relevant classification information than conventional AEs. This holds although AEs contain more information on the original input. Our user study highlights that even non-experts can identify a diverse set of concepts contained in images that are relevant (or irrelevant) for the classifier. We also compare against saliency based methods that focus on pixel relevance rather than concepts. We show that ClaDec tends to highlight more relevant input areas to classification though outcomes depend on classifier architecture. Code is at \url{https://github.com/JohnTailor/ClaDec}

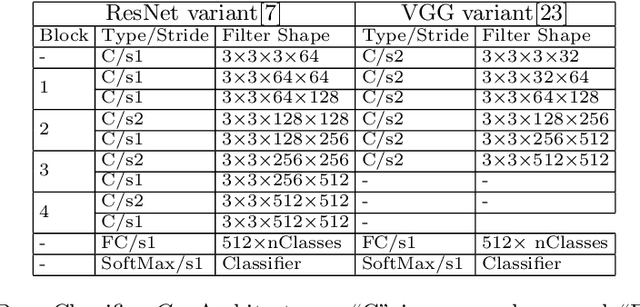

The learning phases in NN: From Fitting the Majority to Fitting a Few

Feb 16, 2022Abstract:The learning dynamics of deep neural networks are subject to controversy. Using the information bottleneck (IB) theory separate fitting and compression phases have been put forward but have since been heavily debated. We approach learning dynamics by analyzing a layer's reconstruction ability of the input and prediction performance based on the evolution of parameters during training. We show that a prototyping phase decreasing reconstruction loss initially, followed by reducing classification loss of a few samples, which increases reconstruction loss, exists under mild assumptions on the data. Aside from providing a mathematical analysis of single layer classification networks, we also assess the behavior using common datasets and architectures from computer vision such as ResNet and VGG.

Towards Trustworthy AutoGrading of Short, Multi-lingual, Multi-type Answers

Jan 02, 2022

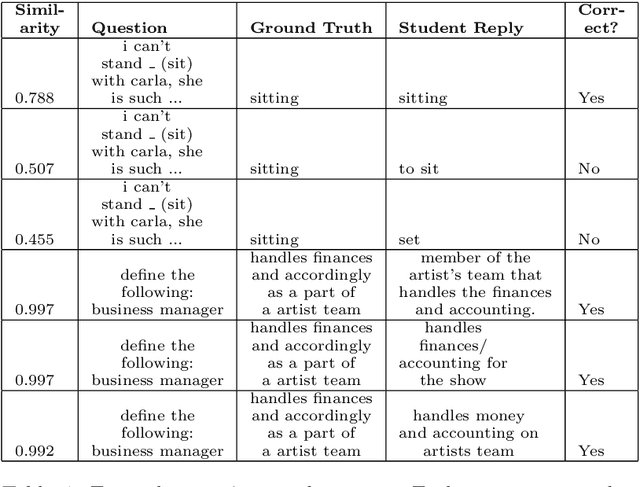

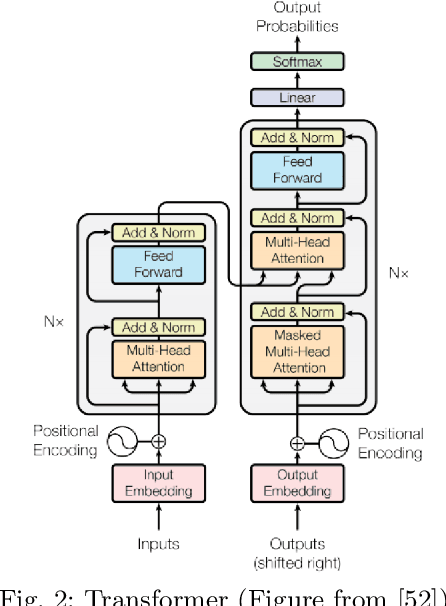

Abstract:Autograding short textual answers has become much more feasible due to the rise of NLP and the increased availability of question-answer pairs brought about by a shift to online education. Autograding performance is still inferior to human grading. The statistical and black-box nature of state-of-the-art machine learning models makes them untrustworthy, raising ethical concerns and limiting their practical utility. Furthermore, the evaluation of autograding is typically confined to small, monolingual datasets for a specific question type. This study uses a large dataset consisting of about 10 million question-answer pairs from multiple languages covering diverse fields such as math and language, and strong variation in question and answer syntax. We demonstrate the effectiveness of fine-tuning transformer models for autograding for such complex datasets. Our best hyperparameter-tuned model yields an accuracy of about 86.5\%, comparable to the state-of-the-art models that are less general and more tuned to a specific type of question, subject, and language. More importantly, we address trust and ethical concerns. By involving humans in the autograding process, we show how to improve the accuracy of automatically graded answers, achieving accuracy equivalent to that of teaching assistants. We also show how teachers can effectively control the type of errors made by the system and how they can validate efficiently that the autograder's performance on individual exams is close to the expected performance.

Domain Transformer: Predicting Samples of Unseen, Future Domains

Jun 10, 2021

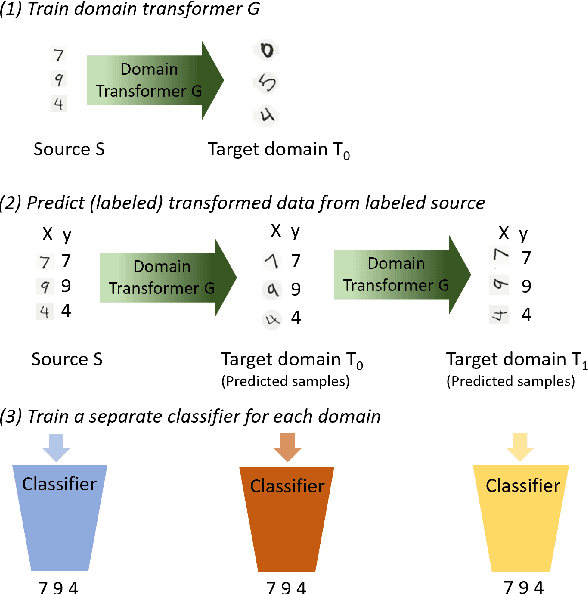

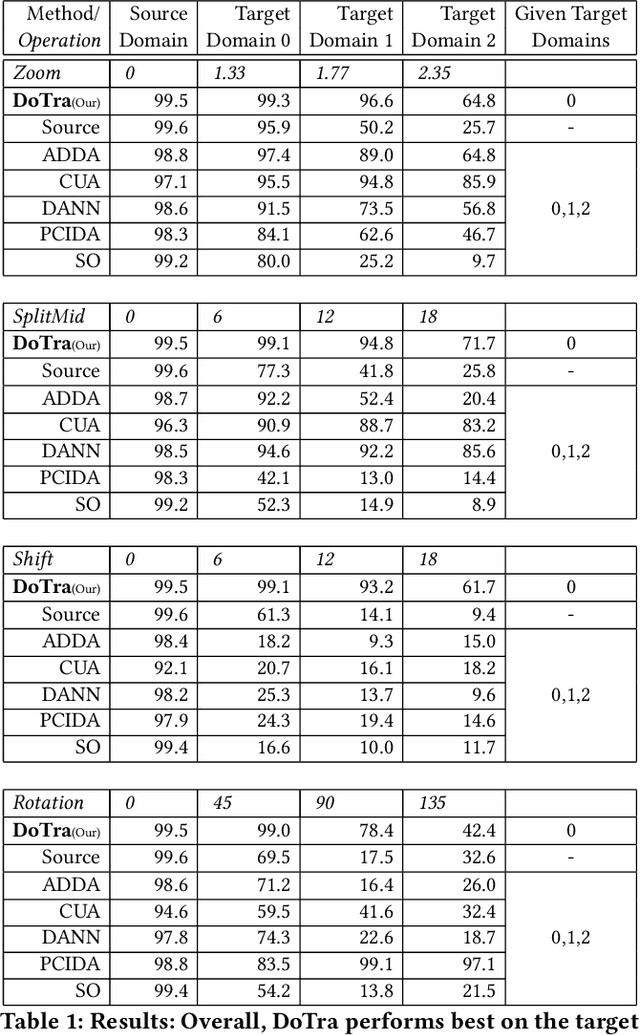

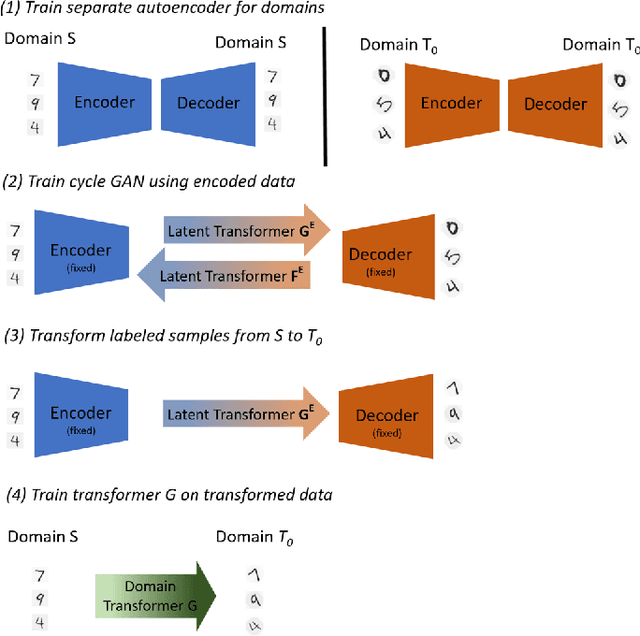

Abstract:The data distribution commonly evolves over time leading to problems such as concept drift that often decrease classifier performance. We seek to predict unseen data (and their labels) allowing us to tackle challenges due to a non-constant data distribution in a \emph{proactive} manner rather than detecting and reacting to already existing changes that might already have led to errors. To this end, we learn a domain transformer in an unsupervised manner that allows generating data of unseen domains. Our approach first matches independently learned latent representations of two given domains obtained from an auto-encoder using a Cycle-GAN. In turn, a transformation of the original samples can be learned that can be applied iteratively to extrapolate to unseen domains. Our evaluation on CNNs on image data confirms the usefulness of the approach. It also achieves very good results on the well-known problem of unsupervised domain adaption, where labels but not samples have to be predicted.

Creativity of Deep Learning: Conceptualization and Assessment

Dec 03, 2020

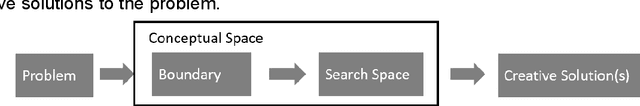

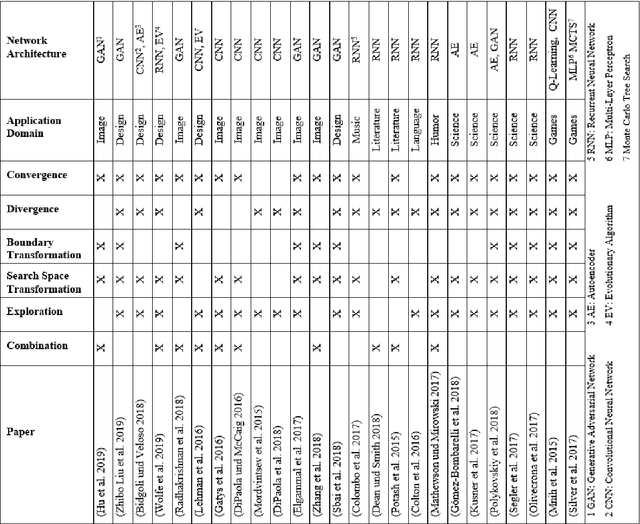

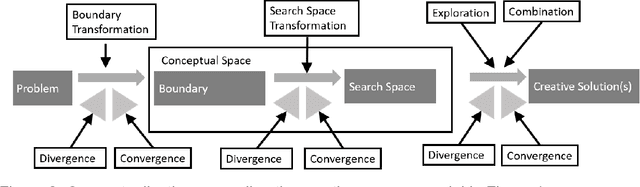

Abstract:While the potential of deep learning(DL) for automating simple tasks is already well explored, recent research started investigating the use of deep learning for creative design, both for complete artifact creation and supporting humans in the creation process. In this paper, we use insights from computational creativity to conceptualize and assess current applications of generative deep learning in creative domains identified in a literature review. We highlight parallels between current systems and different models of human creativity as well as their shortcomings. While deep learning yields results of high value, such as high quality images, their novelity is typically limited due to multiple reasons such a being tied to a conceptual space defined by training data and humans. Current DL methods also do not allow for changes in the internal problem representation and they lack the capability to identify connections across highly different domains, both of which are seen as major drivers of human creativity.

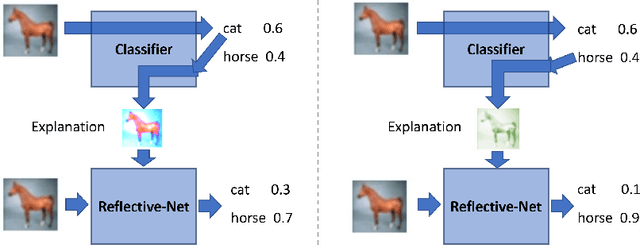

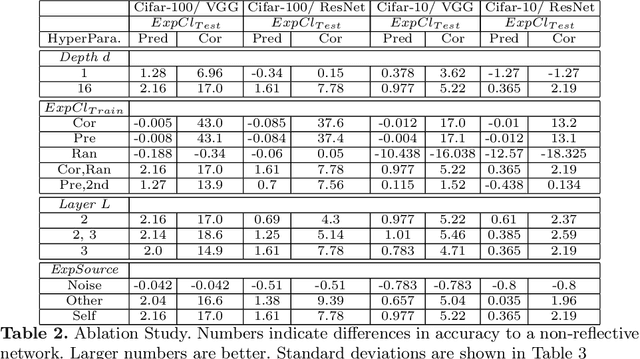

Reflective-Net: Learning from Explanations

Nov 27, 2020

Abstract:Humans possess a remarkable capability to make fast, intuitive decisions, but also to self-reflect, i.e., to explain to oneself, and to efficiently learn from explanations by others. This work provides the first steps toward mimicking this process by capitalizing on the explanations generated based on existing explanation methods, i.e. Grad-CAM. Learning from explanations combined with conventional labeled data yields significant improvements for classification in terms of accuracy and training time.

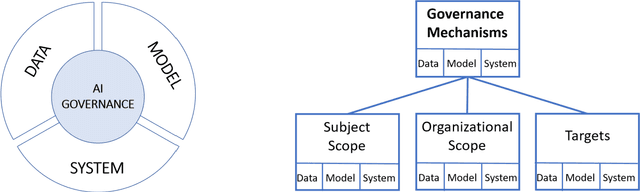

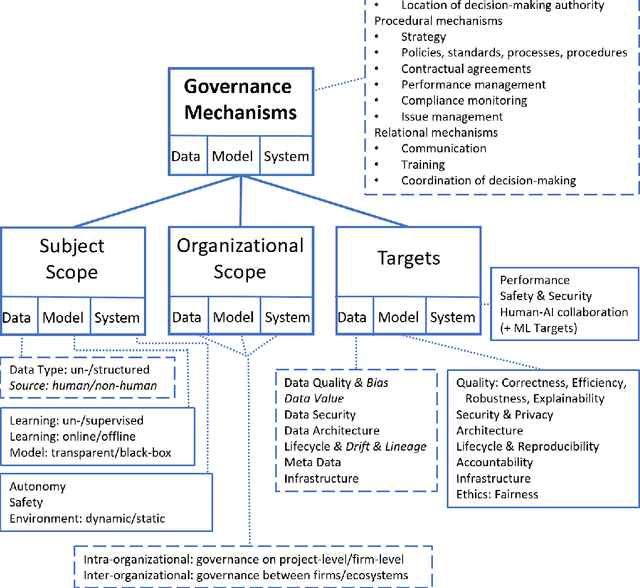

AI Governance for Businesses

Nov 20, 2020

Abstract:Artificial Intelligence (AI) governance regulates the exercise of authority and control over the management of AI. It aims at leveraging AI through effective use of data and minimization of AI-related cost and risk. While topics such as AI governance and AI ethics are thoroughly discussed on a theoretical, philosophical, societal and regulatory level, there is limited work on AI governance targeted to companies and corporations. This work views AI products as systems, where key functionality is delivered by machine learning (ML) models leveraging (training) data. We derive a conceptual framework by synthesizing literature on AI and related fields such as ML. Our framework decomposes AI governance into governance of data, (ML) models and (AI) systems along four dimensions. It relates to existing IT and data governance frameworks and practices. It can be adopted by practitioners and academics alike. For practitioners the synthesis of mainly research papers, but also practitioner publications and publications of regulatory bodies provides a valuable starting point to implement AI governance, while for academics the paper highlights a number of areas of AI governance that deserve more attention.

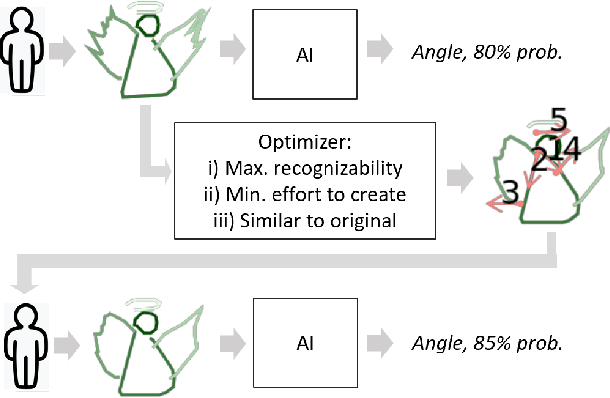

Humans learn too: Better Human-AI Interaction using Optimized Human Inputs

Sep 19, 2020

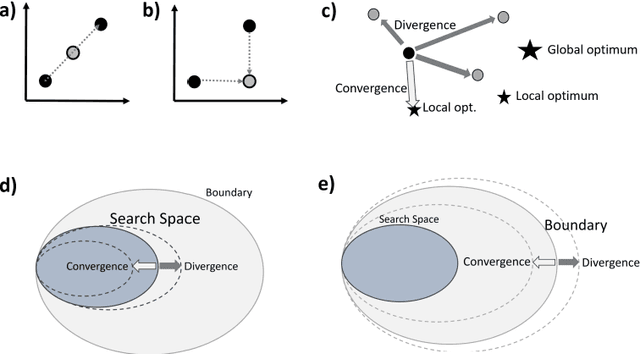

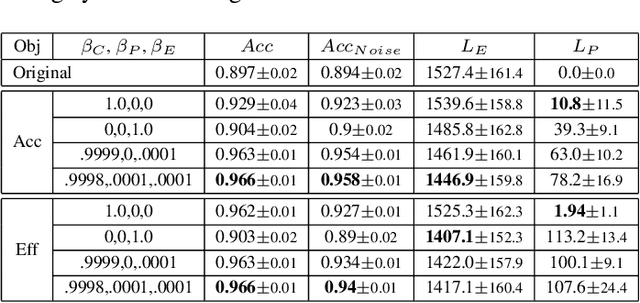

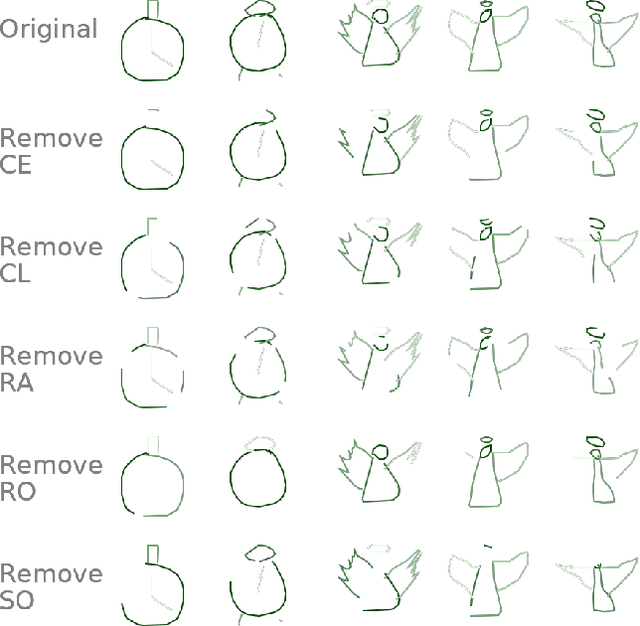

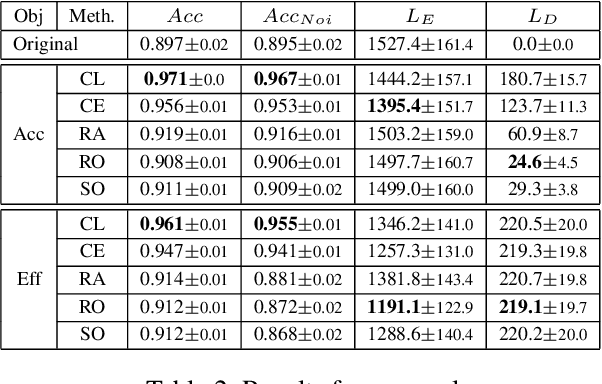

Abstract:Humans rely more and more on systems with AI components. The AI community typically treats human inputs as a given and optimizes AI models only. This thinking is one-sided and it neglects the fact that humans can learn, too. In this work, human inputs are optimized for better interaction with an AI model while keeping the model fixed. The optimized inputs are accompanied by instructions on how to create them. They allow humans to save time and cut on errors, while keeping required changes to original inputs limited. We propose continuous and discrete optimization methods modifying samples in an iterative fashion. Our quantitative and qualitative evaluation including a human study on different hand-generated inputs shows that the generated proposals lead to lower error rates, require less effort to create and differ only modestly from the original samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge