Jochen Cremer

Residual Power Flow for Neural Solvers

Jan 14, 2026Abstract:The energy transition challenges operational tasks based on simulations and optimisation. These computations need to be fast and flexible as the grid is ever-expanding, and renewables' uncertainty requires a flexible operational environment. Learned approximations, proxies or surrogates -- we refer to them as Neural Solvers -- excel in terms of evaluation speed, but are inflexible with respect to adjusting to changing tasks. Hence, neural solvers are usually applicable to highly specific tasks, which limits their usefulness in practice; a widely reusable, foundational neural solver is required. Therefore, this work proposes the Residual Power Flow (RPF) formulation. RPF formulates residual functions based on Kirchhoff's laws to quantify the infeasibility of an operating condition. The minimisation of the residuals determines the voltage solution; an additional slack variable is needed to achieve AC-feasibility. RPF forms a natural, foundational subtask of tasks subject to power flow constraints. We propose to learn RPF with neural solvers to exploit their speed. Furthermore, RPF improves learning performance compared to common power flow formulations. To solve operational tasks, we integrate the neural solver in a Predict-then-Optimise (PO) approach to combine speed and flexibility. The case study investigates the IEEE 9-bus system and three tasks (AC Optimal Power Flow (OPF), power-flow and quasi-steady state power flow) solved by PO. The results demonstrate the accuracy and flexibility of learning with RPF.

Learning a Reward Function for User-Preferred Appliance Scheduling

Oct 11, 2023

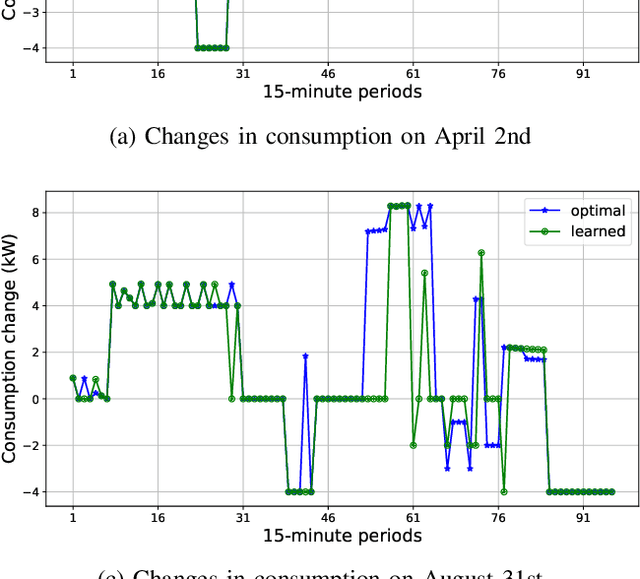

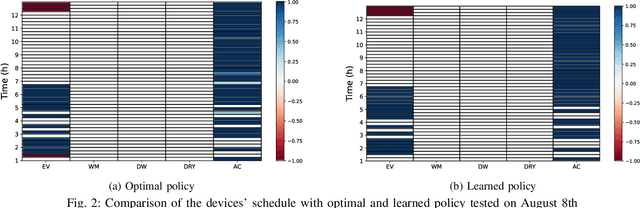

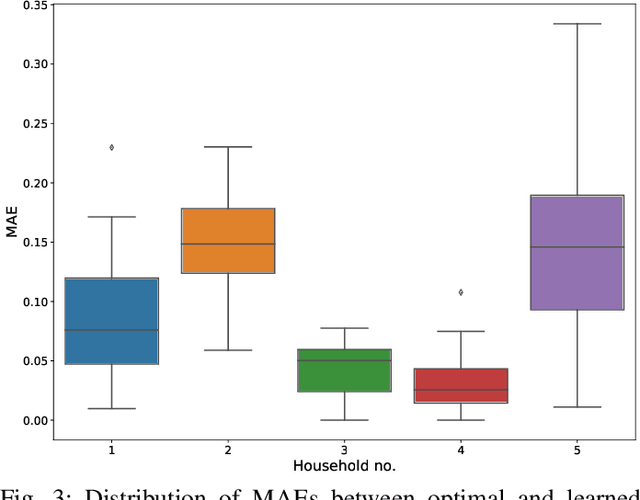

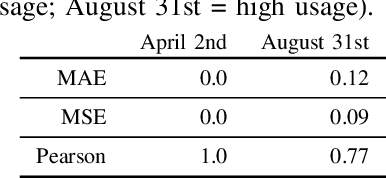

Abstract:Accelerated development of demand response service provision by the residential sector is crucial for reducing carbon-emissions in the power sector. Along with the infrastructure advancement, encouraging the end users to participate is crucial. End users highly value their privacy and control, and want to be included in the service design and decision-making process when creating the daily appliance operation schedules. Furthermore, unless they are financially or environmentally motivated, they are generally not prepared to sacrifice their comfort to help balance the power system. In this paper, we present an inverse-reinforcement-learning-based model that helps create the end users' daily appliance schedules without asking them to explicitly state their needs and wishes. By using their past consumption data, the end consumers will implicitly participate in the creation of those decisions and will thus be motivated to continue participating in the provision of demand response services.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge