Joachim Meyer

Finding Patterns in Visualized Data by Adding Redundant Visual Information

May 27, 2022

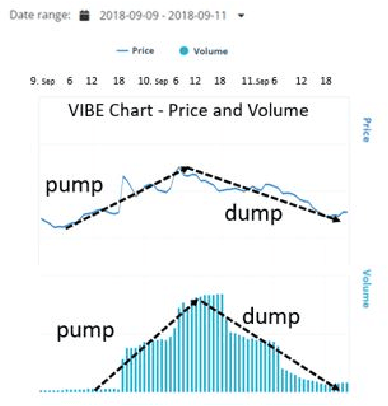

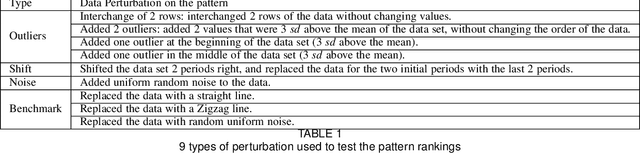

Abstract:We present "PATRED", a technique that uses the addition of redundant information to facilitate the detection of specific, generally described patterns in line-charts during the visual exploration of the charts. We compared different versions of this technique, that differed in the way redundancy was added, using nine distance metrics (such as Euclidean, Pearson, Mutual Information and Jaccard) with judgments from data scientists which served as the "ground truth". Results were analyzed with correlations (R2), F1 scores and Mutual Information with the average ranking by the data scientists. Some distance metrics consistently benefit from the addition of redundant information, while others are only enhanced for specific types of data perturbations. The results demonstrate the value of adding redundancy to improve the identification of patterns in time-series data during visual exploration.

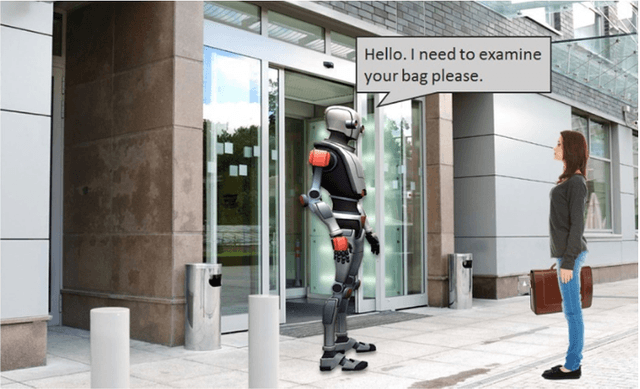

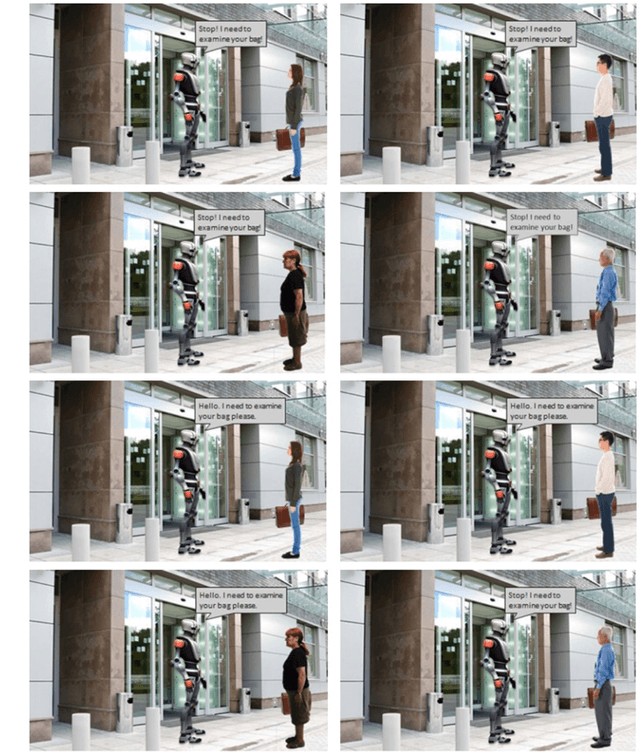

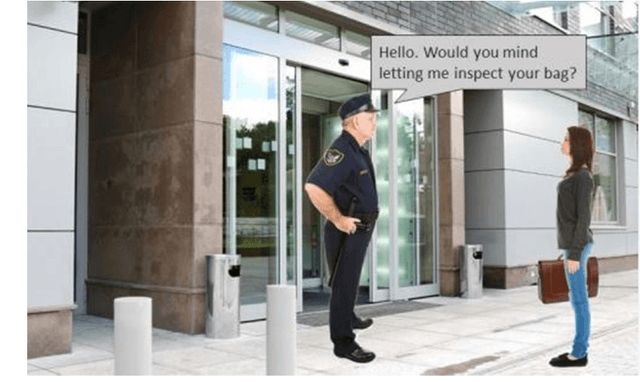

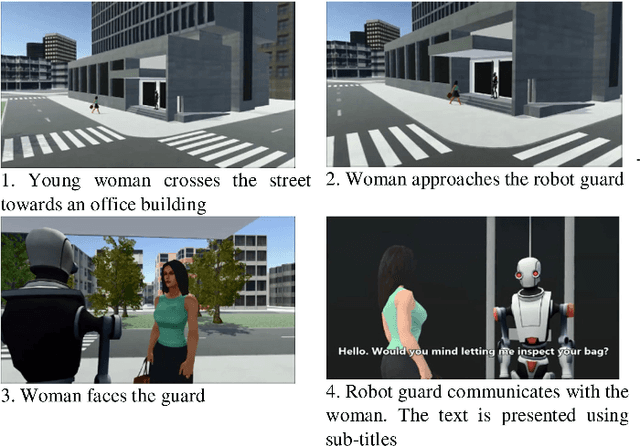

Politeness Counts: Perceptions of Peacekeeping Robots

May 19, 2022

Abstract:The 'intuitive' trust people feel when encountering robots in public spaces is a key determinant of their willingness to cooperate with these robots. We conducted four experiments to study this topic in the context of peacekeeping robots. Participants viewed scenarios, presented as static images or animations, involving a robot or a human guard performing an access-control task. The guards interacted more or less politely with younger and older male and female people. Our results show strong effects of the guard's politeness. Age and sex of the people interacting with the guard had no significant effect on participants' impressions of its attributes. There were no differences between responses to robot and human guards. This study advances the notion that politeness is a crucial determinant of people's perception of peacekeeping robots.

Visual Analytics and Human Involvement in Machine Learning

May 12, 2020

Abstract:The rapidly developing AI systems and applications still require human involvement in practically all parts of the analytics process. Human decisions are largely based on visualizations, providing data scientists details of data properties and the results of analytical procedures. Different visualizations are used in the different steps of the Machine Learning (ML) process. The decision which visualization to use depends on factors, such as the data domain, the data model and the step in the ML process. In this chapter, we describe the seven steps in the ML process and review different visualization techniques that are relevant for the different steps for different types of data, models and purposes.

The Responsibility Quantification (ResQu) Model of Human Interaction with Automation

Oct 30, 2018

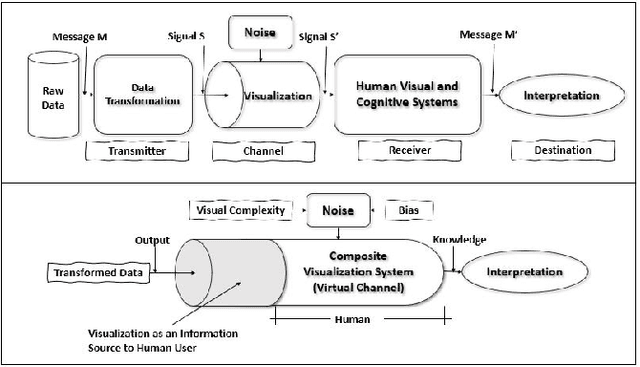

Abstract:Advanced automation is involved in information collection and evaluation, in decision-making and in the implementation of chosen actions. In such systems, human responsibility becomes equivocal, and there may exist a responsibility gap. Understanding human responsibility is particularly important when systems can harm people, as with autonomous vehicles or, most notably, with Autonomous Weapon Systems (AWS). Using Information Theory, we develop a responsibility quantification (ResQu) model of human interaction in automated systems and demonstrate its applications on decisions involving AWS. The analysis reveals that human comparative responsibility is often low, even when major functions are allocated to the human. Thus, broadly stated policies of keeping humans in the loop and having meaningful human control are misleading and cannot truly direct decisions on how to involve humans in advanced automation. Our responsibility model can guide system design decisions and can aid policy and legal decisions regarding human responsibility in highly automated systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge