Jiaxuan Zhang

PITE: Multi-Prototype Alignment for Individual Treatment Effect Estimation

Nov 13, 2025

Abstract:Estimating Individual Treatment Effects (ITE) from observational data is challenging due to confounding bias. Most studies tackle this bias by balancing distributions globally, but ignore individual heterogeneity and fail to capture the local structure that represents the natural clustering among individuals, which ultimately compromises ITE estimation. While instance-level alignment methods consider heterogeneity, they similarly overlook the local structure information. To address these issues, we propose an end-to-end Multi-\textbf{P}rototype alignment method for \textbf{ITE} estimation (\textbf{PITE}). PITE effectively captures local structure within groups and enforces cross-group alignment, thereby achieving robust ITE estimation. Specifically, we first define prototypes as cluster centroids based on similar individuals under the same treatment. To identify local similarity and the distribution consistency, we perform instance-to-prototype matching to assign individuals to the nearest prototype within groups, and design a multi-prototype alignment strategy to encourage the matched prototypes to be close across treatment arms in the latent space. PITE not only reduces distribution shift through fine-grained, prototype-level alignment, but also preserves the local structures of treated and control groups, which provides meaningful constraints for ITE estimation. Extensive evaluations on benchmark datasets demonstrate that PITE outperforms 13 state-of-the-art methods, achieving more accurate and robust ITE estimation.

A Bidirectional Conversion Network for Cross-Spectral Face Recognition

May 03, 2022

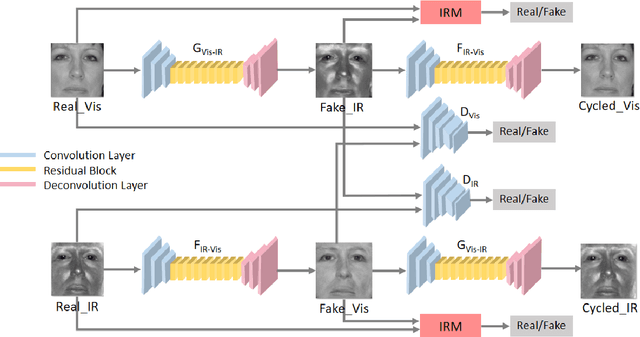

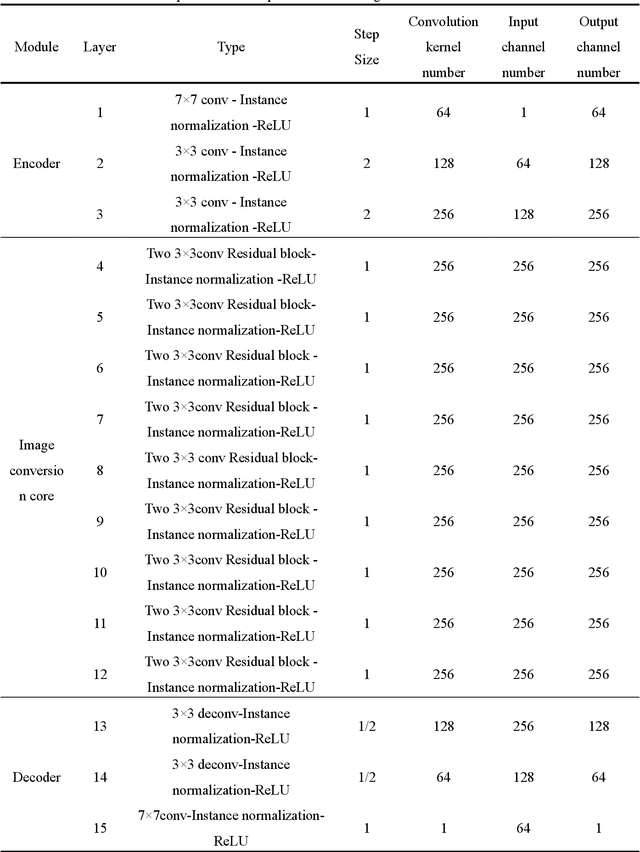

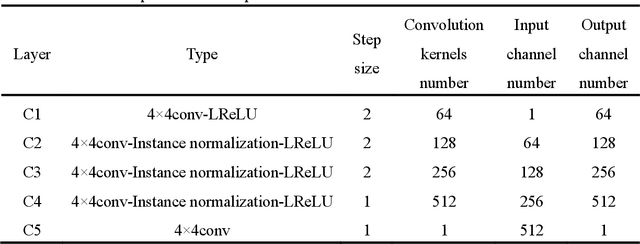

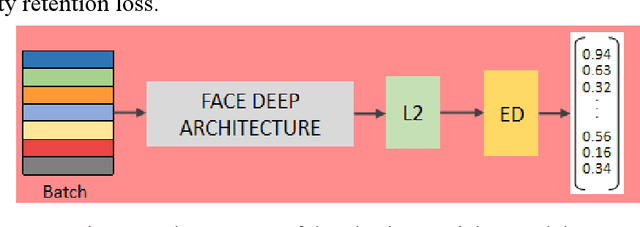

Abstract:Face recognition in the infrared (IR) band has become an important supplement to visible light face recognition due to its advantages of independent background light, strong penetration, ability of imaging under harsh environments such as nighttime, rain and fog. However, cross-spectral face recognition (i.e., VIS to IR) is very challenging due to the dramatic difference between the visible light and IR imageries as well as the lack of paired training data. This paper proposes a framework of bidirectional cross-spectral conversion (BCSC-GAN) between the heterogeneous face images, and designs an adaptive weighted fusion mechanism based on information fusion theory. The network reduces the cross-spectral recognition problem into an intra-spectral problem, and improves performance by fusing bidirectional information. Specifically, a face identity retaining module (IRM) is introduced with the ability to preserve identity features, and a new composite loss function is designed to overcome the modal differences caused by different spectral characteristics. Two datasets of TINDERS and CASIA were tested, where performance metrics of FID, recognition rate, equal error rate and normalized distance were compared. Results show that our proposed network is superior than other state-of-the-art methods. Additionally, the proposed rule of Self Adaptive Weighted Fusion (SAWF) is better than the recognition results of the unfused case and other traditional fusion rules that are commonly used, which further justifies the effectiveness and superiority of the proposed bidirectional conversion approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge