Jacob Steeves

Incentivizing Permissionless Distributed Learning of LLMs

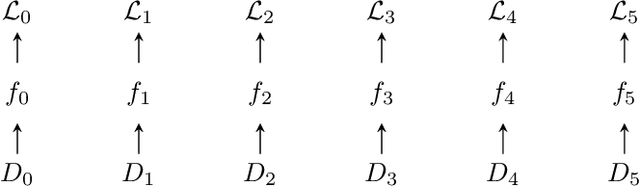

May 27, 2025Abstract:We describe an incentive system for distributed deep learning of foundational models where peers are rewarded for contributions. The incentive system, \textit{Gauntlet}, has been deployed on the bittensor blockchain and used to train a 1.2B LLM with completely permissionless contributions of pseudo-gradients: no control over the users that can register or their hardware. \textit{Gauntlet} can be applied to any synchronous distributed training scheme that relies on aggregating updates or pseudo-gradients. We rely on a two-stage mechanism for fast filtering of peer uptime, reliability, and synchronization, combined with the core component that estimates the loss before and after individual pseudo-gradient contributions. We utilized an OpenSkill rating system to track competitiveness of pseudo-gradient scores across time. Finally, we introduce a novel mechanism to ensure peers on the network perform unique computations. Our live 1.2B run, which has paid out real-valued tokens to participants based on the value of their contributions, yielded a competitive (on a per-iteration basis) 1.2B model that demonstrates the utility of our incentive system.

BitTensor: An Intermodel Intelligence Measure

Mar 09, 2020

Abstract:A purely inter-model version of a machine intelligence benchmark would allow us to measure intelligence directly as information without projecting that information onto labeled datasets. We propose a framework in which other learners measure the informational significance of their peers across a network and use a digital ledger to negotiate the scores. However, the main benefits of measuring intelligence with other learners are lost if the underlying scores are dishonest. As a solution, we show how competition for connectivity in the network can be used to force honest bidding. We first prove that selecting inter-model scores using gradient descent is a regret-free strategy: one which generates the best subjective outcome regardless of the behavior of others. We then empirically show that when nodes apply this strategy, the network converges to a ranking that correlates with the one found in a fully coordinated and centralized setting. The result is a fair mechanism for training an internet-wide, decentralized and incentivized machine learning system, one which produces a continually hardening and expanding benchmark at the generalized intersection of the participants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge