Jacob L Jaremko

Unsupervised multi-latent space reinforcement learning framework for video summarization in ultrasound imaging

Sep 03, 2021

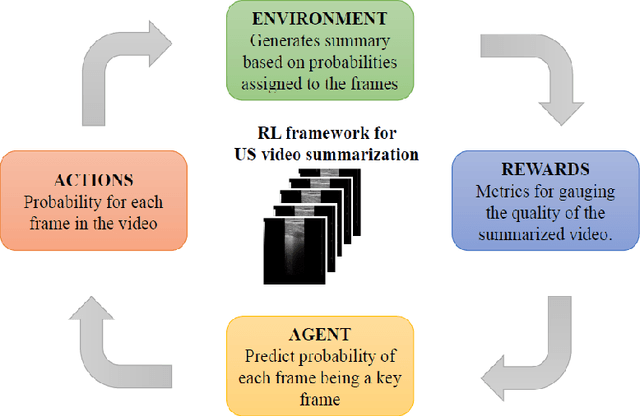

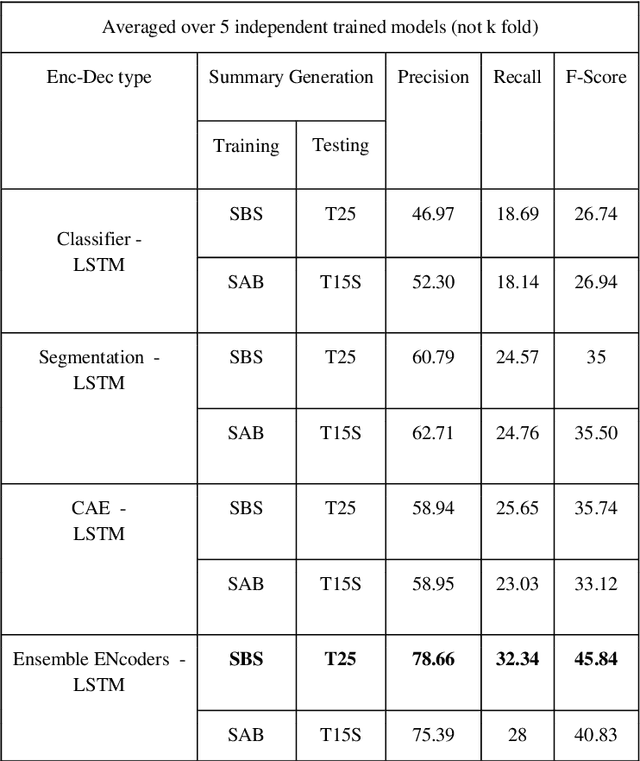

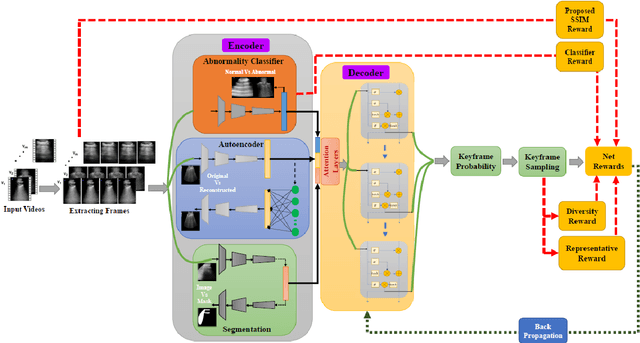

Abstract:The COVID-19 pandemic has highlighted the need for a tool to speed up triage in ultrasound scans and provide clinicians with fast access to relevant information. The proposed video-summarization technique is a step in this direction that provides clinicians access to relevant key-frames from a given ultrasound scan (such as lung ultrasound) while reducing resource, storage and bandwidth requirements. We propose a new unsupervised reinforcement learning (RL) framework with novel rewards that facilitates unsupervised learning avoiding tedious and impractical manual labelling for summarizing ultrasound videos to enhance its utility as a triage tool in the emergency department (ED) and for use in telemedicine. Using an attention ensemble of encoders, the high dimensional image is projected into a low dimensional latent space in terms of: a) reduced distance with a normal or abnormal class (classifier encoder), b) following a topology of landmarks (segmentation encoder), and c) the distance or topology agnostic latent representation (convolutional autoencoders). The decoder is implemented using a bi-directional long-short term memory (Bi-LSTM) which utilizes the latent space representation from the encoder. Our new paradigm for video summarization is capable of delivering classification labels and segmentation of key landmarks for each of the summarized keyframes. Validation is performed on lung ultrasound (LUS) dataset, that typically represent potential use cases in telemedicine and ED triage acquired from different medical centers across geographies (India, Spain and Canada).

Learning the Imaging Landmarks: Unsupervised Key point Detection in Lung Ultrasound Videos

Jun 13, 2021

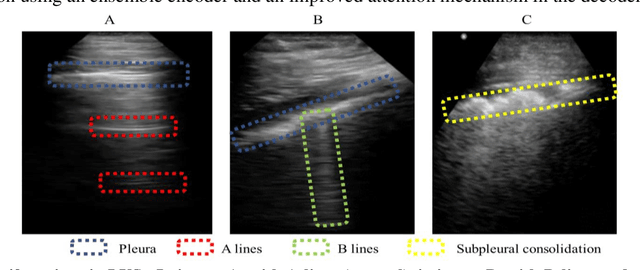

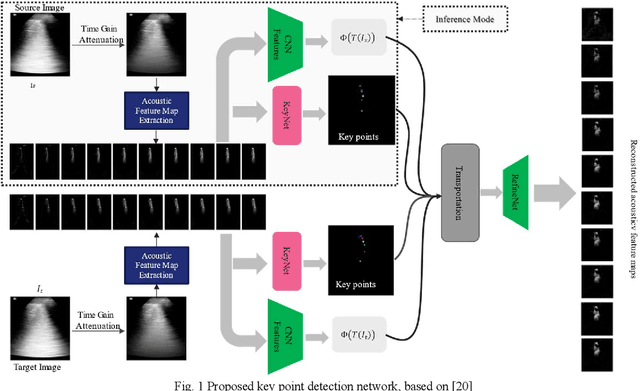

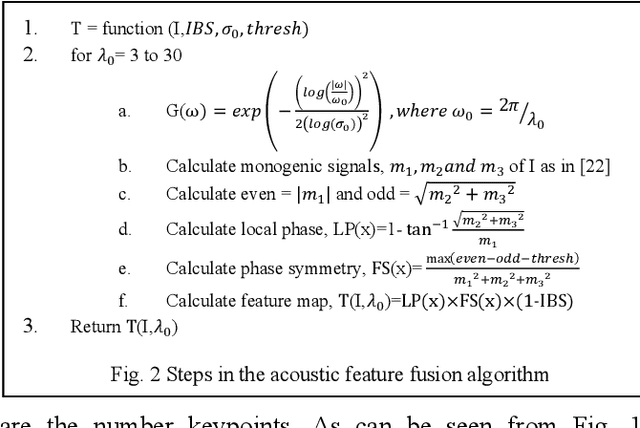

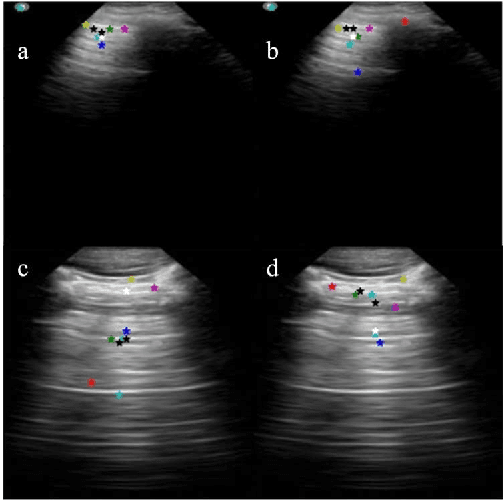

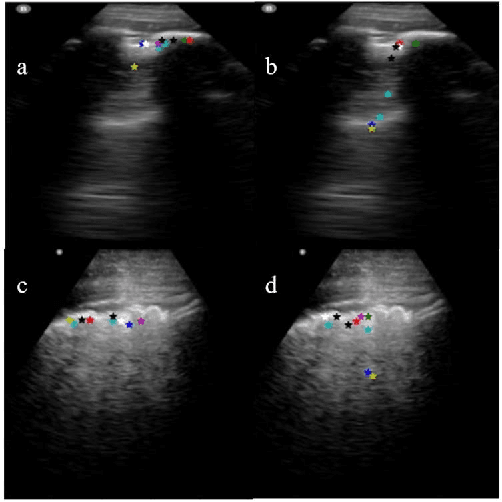

Abstract:Lung ultrasound (LUS) is an increasingly popular diagnostic imaging modality for continuous and periodic monitoring of lung infection, given its advantages of non-invasiveness, non-ionizing nature, portability and easy disinfection. The major landmarks assessed by clinicians for triaging using LUS are pleura, A and B lines. There have been many efforts for the automatic detection of these landmarks. However, restricting to a few pre-defined landmarks may not reveal the actual imaging biomarkers particularly in case of new pathologies like COVID-19. Rather, the identification of key landmarks should be driven by data given the availability of a plethora of neural network algorithms. This work is a first of its kind attempt towards unsupervised detection of the key LUS landmarks in LUS videos of COVID-19 subjects during various stages of infection. We adapted the relatively newer approach of transporter neural networks to automatically mark and track pleura, A and B lines based on their periodic motion and relatively stable appearance in the videos. Initial results on unsupervised pleura detection show an accuracy of 91.8% employing 1081 LUS video frames.

A systematic review on the role of artificial intelligence in sonographic diagnosis of thyroid cancer: Past, present and future

Jun 10, 2020

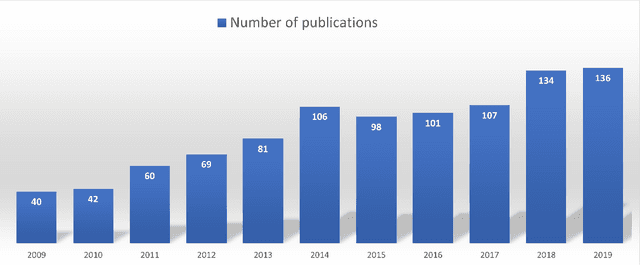

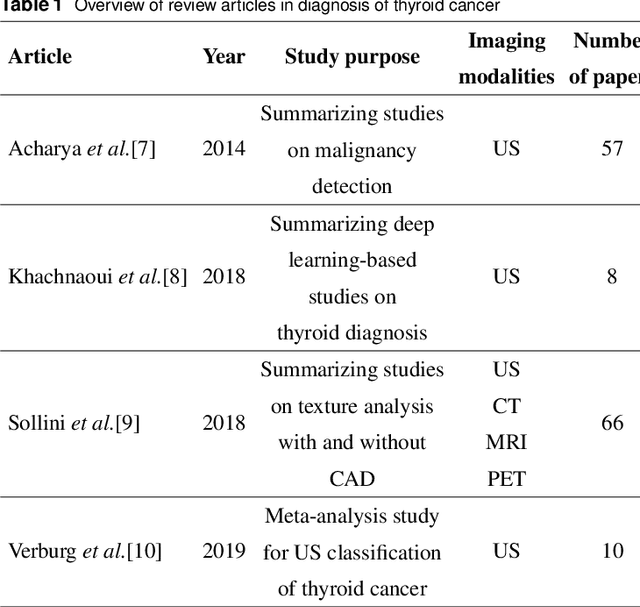

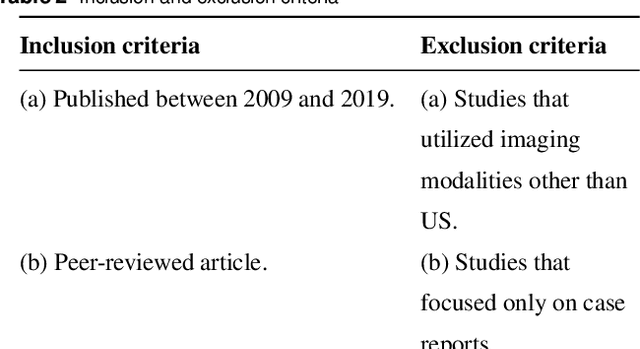

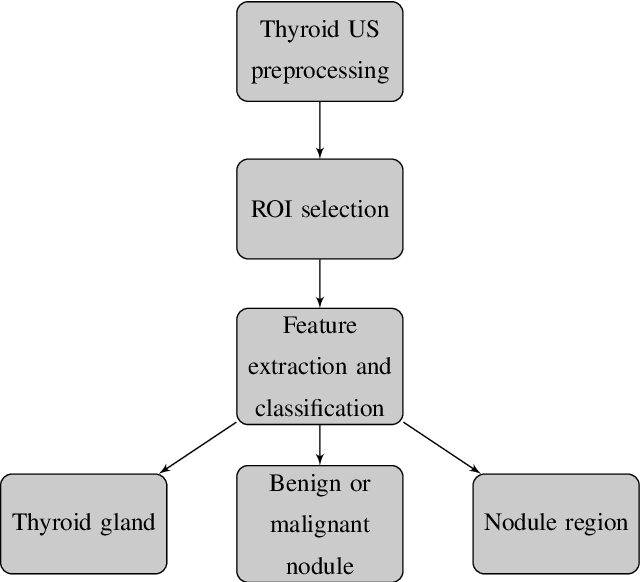

Abstract:Thyroid cancer is common worldwide, with a rapid increase in prevalence across North America in recent years. While most patients present with palpable nodules through physical examination, a large number of small and medium-sized nodules are detected by ultrasound examination. Suspicious nodules are then sent for biopsy through fine needle aspiration. Since biopsies are invasive and sometimes inconclusive, various research groups have tried to develop computer-aided diagnosis systems. Earlier approaches along these lines relied on clinically relevant features that were manually identified by radiologists. With the recent success of artificial intelligence (AI), various new methods are being developed to identify these features in thyroid ultrasound automatically. In this paper, we present a systematic review of state-of-the-art on AI application in sonographic diagnosis of thyroid cancer. This review follows a methodology-based classification of the different techniques available for thyroid cancer diagnosis. With more than 50 papers included in this review, we reflect on the trends and challenges of the field of sonographic diagnosis of thyroid malignancies and potential of computer-aided diagnosis to increase the impact of ultrasound applications on the future of thyroid cancer diagnosis. Machine learning will continue to play a fundamental role in the development of future thyroid cancer diagnosis frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge