Iyad Dayoub

Deep Reinforcement Learning for Joint Time and Power Management in SWIPT-EH CIoT

Dec 17, 2025Abstract:This letter presents a novel deep reinforcement learning (DRL) approach for joint time allocation and power control in a cognitive Internet of Things (CIoT) system with simultaneous wireless information and power transfer (SWIPT). The CIoT transmitter autonomously manages energy harvesting (EH) and transmissions using a learnable time switching factor while optimizing power to enhance throughput and lifetime. The joint optimization is modeled as a Markov decision process under small-scale fading, realistic EH, and interference constraints. We develop a double deep Q-network (DDQN) enhanced with an upper confidence bound. Simulations benchmark our approach, showing superior performance over existing DRL methods.

* Published in IEEE Communications Letters, 2025. This arXiv version is the authors' accepted manuscript

A Survey of Applied Machine Learning Techniques for Optical OFDM based Networks

May 07, 2021

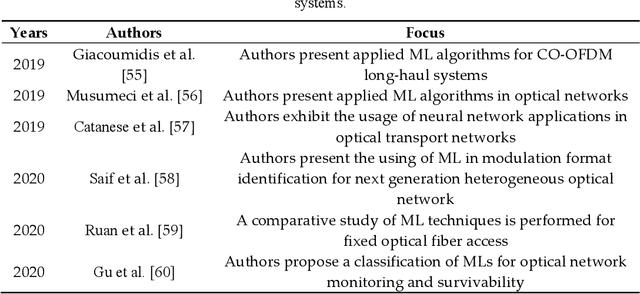

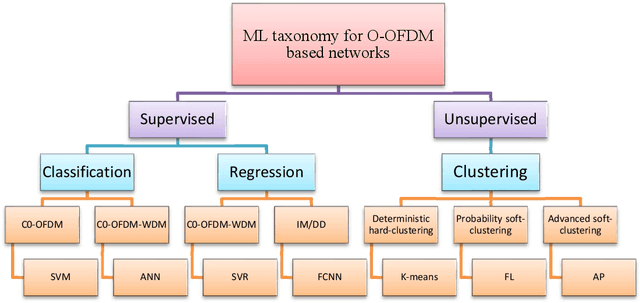

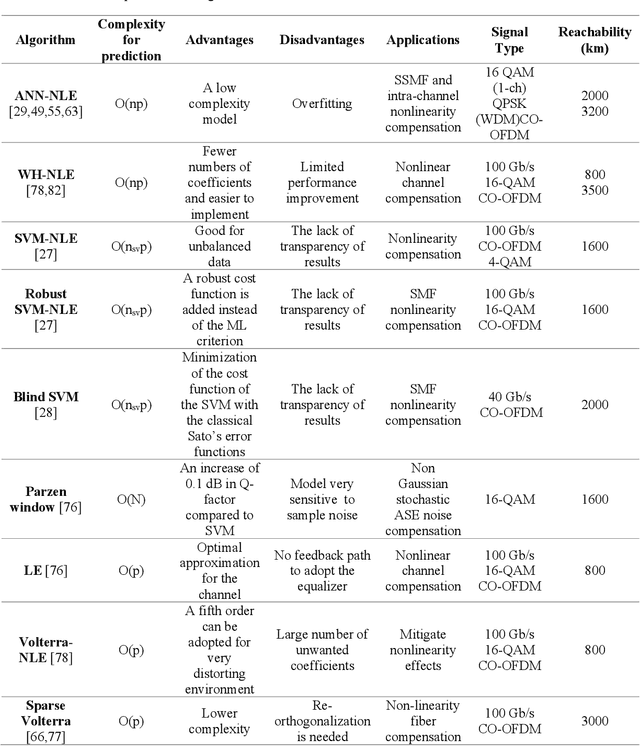

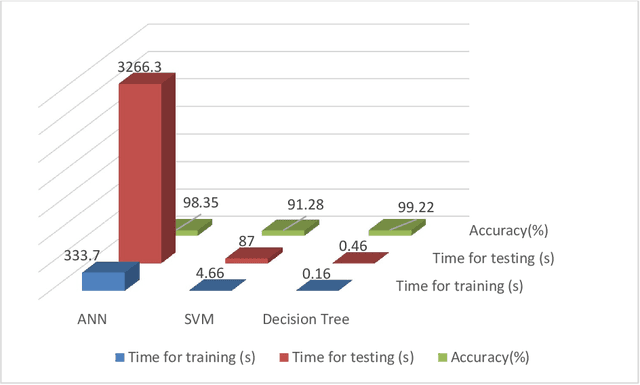

Abstract:In this survey, we analyze the newest machine learning (ML) techniques for optical orthogonal frequency division multiplexing (O-OFDM)-based optical communications. ML has been proposed to mitigate channel and transceiver imperfections. For instance, ML can improve the signal quality under low modulation extinction ratio or can tackle both determinist and stochastic-induced nonlinearities such as parametric noise amplification in long-haul transmission. The proposed ML algorithms for O-OFDM can in particularly tackle inter-subcarrier nonlinear effects such as four-wave mixing and cross-phase modulation. In essence, these ML techniques could be beneficial for any multi-carrier approach (e.g. filter bank modulation). Supervised and unsupervised ML techniques are analyzed in terms of both O-OFDM transmission performance and computational complexity for potential real-time implementation. We indicate the strict conditions under which a ML algorithm should perform classification, regression or clustering. The survey also discusses open research issues and future directions towards the ML implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge