Ioannis Fudos

Deep and Fast Approximate Order Independent Transparency

May 17, 2023Abstract:We present a machine learning approach for efficiently computing order independent transparency (OIT). Our method is fast, requires a small constant amount of memory (depends only on the screen resolution and not on the number of triangles or transparent layers), is more accurate as compared to previous approximate methods, works for every scene without setup and is portable to all platforms running even with commodity GPUs. Our method requires a rendering pass to extract all features that are subsequently used to predict the overall OIT pixel color with a pre-trained neural network. We provide a comparative experimental evaluation and shader source code of all methods for reproduction of the experiments.

Temporal Parameter-free Deep Skinning of Animated Meshes

Sep 15, 2021

Abstract:In computer graphics, animation compression is essential for efficient storage, streaming and reproduction of animated meshes. Previous work has presented efficient techniques for compression by deriving skinning transformations and weights using clustering of vertices based on geometric features of vertices over time. In this work we present a novel approach that assigns vertices to bone-influenced clusters and derives weights using deep learning through a training set that consists of pairs of vertex trajectories (temporal vertex sequences) and the corresponding weights drawn from fully rigged animated characters. The approximation error of the resulting linear blend skinning scheme is significantly lower than the error of competent previous methods by producing at the same time a minimal number of bones. Furthermore, the optimal set of transformation and vertices is derived in fewer iterations due to the better initial positioning in the multidimensional variable space. Our method requires no parameters to be determined or tuned by the user during the entire process of compressing a mesh animation sequence.

Deep Tiling: Texture Tile Synthesis Using a Deep Learning Approach

Mar 14, 2021

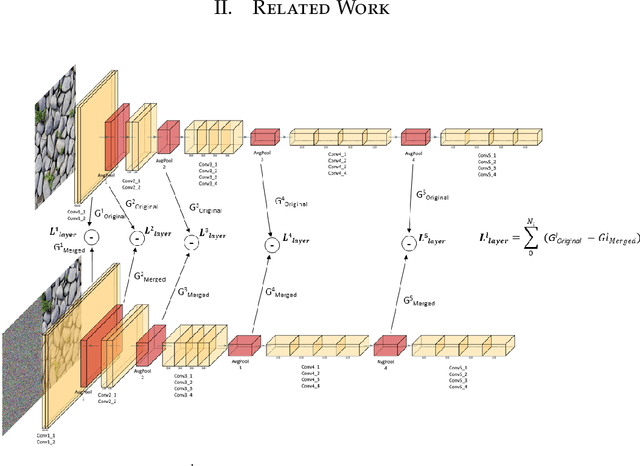

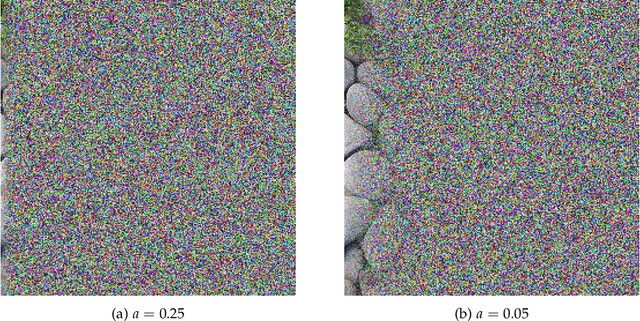

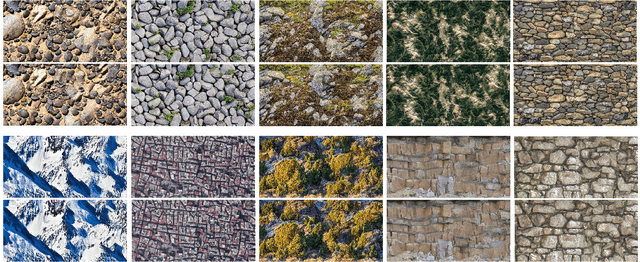

Abstract:Texturing is a fundamental process in computer graphics. Texture is leveraged to enhance the visualization outcome for a 3D scene. In many cases a texture image cannot cover a large 3D model surface because of its small resolution. Conventional techniques like repeating, mirror repeating or clamp to edge do not yield visually acceptable results. Deep learning based texture synthesis has proven to be very effective in such cases. All deep texture synthesis methods trying to create larger resolution textures are limited in terms of GPU memory resources. In this paper, we propose a novel approach to example-based texture synthesis by using a robust deep learning process for creating tiles of arbitrary resolutions that resemble the structural components of an input texture. In this manner, our method is firstly much less memory limited owing to the fact that a new texture tile of small size is synthesized and merged with the original texture and secondly can easily produce missing parts of a large texture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge