Inci Ayhan

Predictive Event Segmentation and Representation with Neural Networks: A Self-Supervised Model Assessed by Psychological Experiments

Oct 04, 2022

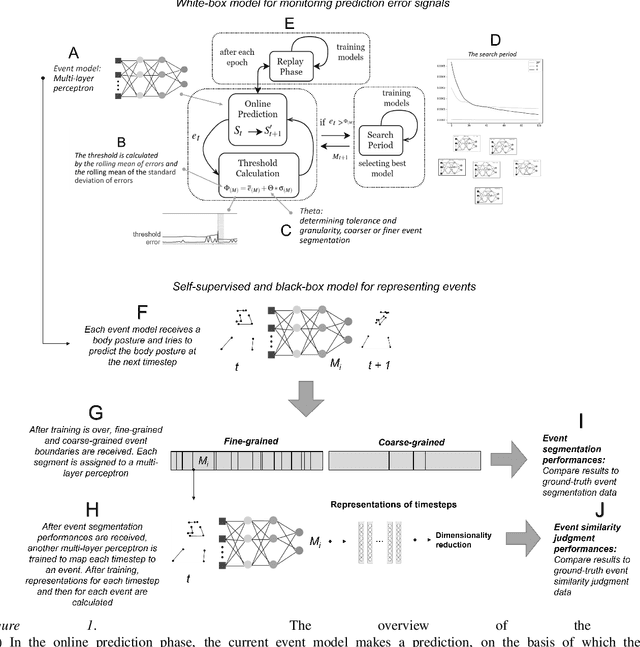

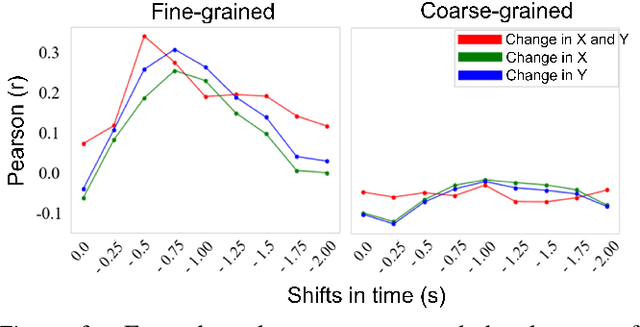

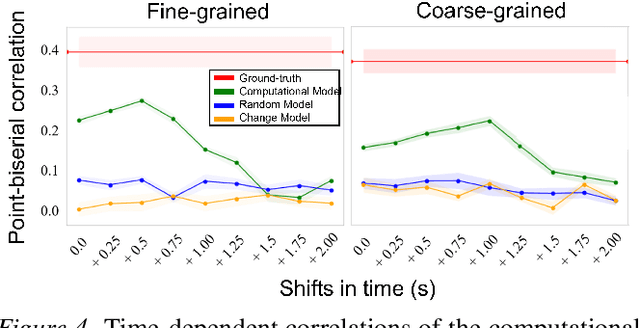

Abstract:People segment complex, ever-changing and continuous experience into basic, stable and discrete spatio-temporal experience units, called events. Event segmentation literature investigates the mechanisms that allow people to extract events. Event segmentation theory points out that people predict ongoing activities and observe prediction error signals to find event boundaries that keep events apart. In this study, we investigated the mechanism giving rise to this ability by a computational model and accompanying psychological experiments. Inspired from event segmentation theory and predictive processing, we introduced a self-supervised model of event segmentation. This model consists of neural networks that predict the sensory signal in the next time-step to represent different events, and a cognitive model that regulates these networks on the basis of their prediction errors. In order to verify the ability of our model in segmenting events, learning them during passive observation, and representing them in its internal representational space, we prepared a video that depicts human behaviors represented by point-light displays. We compared event segmentation behaviors of participants and our model with this video in two hierarchical event segmentation levels. By using point-biserial correlation technique, we demonstrated that event segmentation decisions of our model correlated with the responses of participants. Moreover, by approximating representation space of participants by a similarity-based technique, we showed that our model formed a similar representation space with those of participants. The result suggests that our model that tracks the prediction error signals can produce human-like event boundaries and event representations. Finally, we discussed our contribution to the literature of event cognition and our understanding of how event segmentation is implemented in the brain.

Time Perception: A Review on Psychological, Computational and Robotic Models

Aug 01, 2020

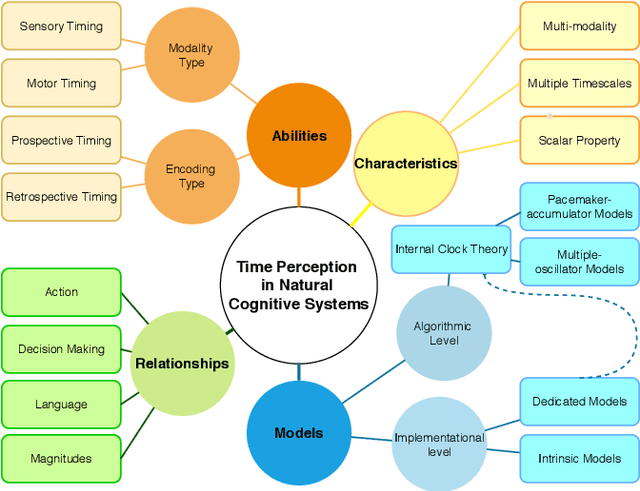

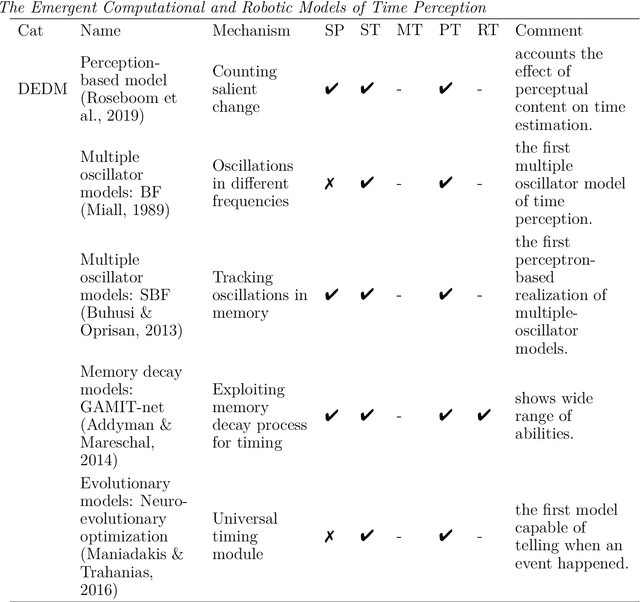

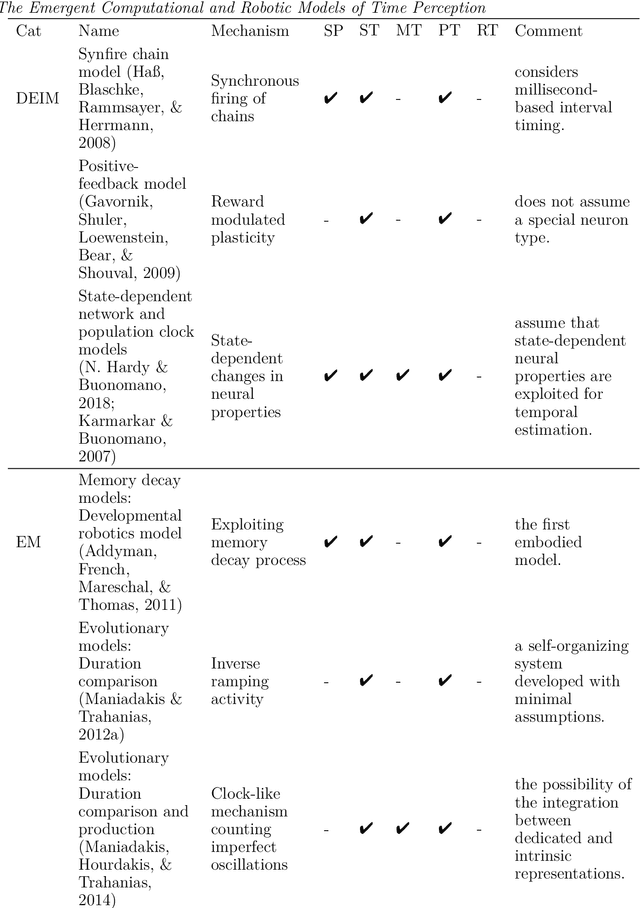

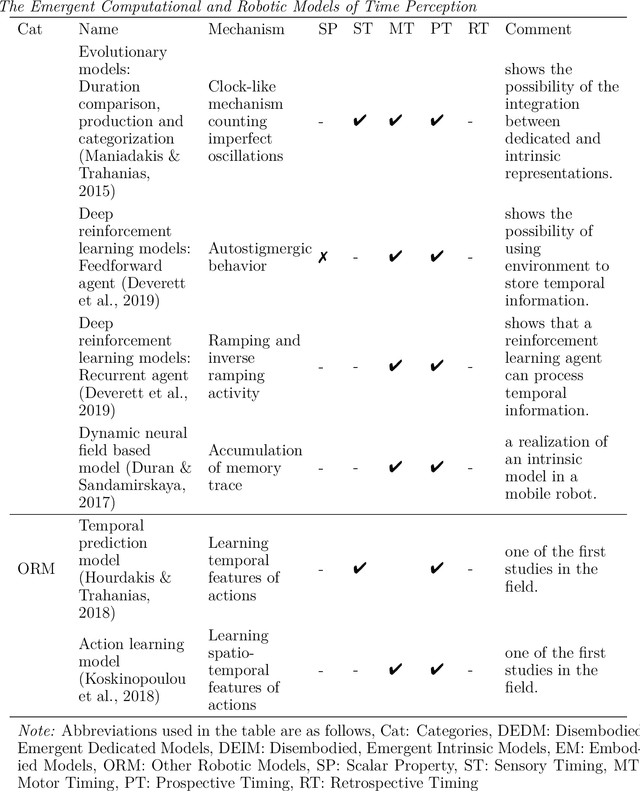

Abstract:Animals exploit time to survive in the world. Temporal information is required for higher-level cognitive abilities such as planning, decision making, communication and effective cooperation. Since time is an inseparable part of cognition, there is a growing interest in the artificial intelligence approach to subjective time, which has a possibility of advancing the field. The current survey study aims to provide researchers with an interdisciplinary perspective on time perception. Firstly, we introduce a brief background from the psychology and neuroscience literature, covering the characteristics and models of time perception and the related abilities. Secondly, we summarize the emergent computational and robotic models of time perception. A general overview to the literature reveals that a substantial amount of timing models are based on a dedicated time processing like the emergence of a clock-like mechanism from the neural network dynamics and reveal a relationship between the embodiment and time perception. We also notice that most models of timing are developed for either sensory timing (i.e. the ability of assessment of an interval) or motor timing (i.e. ability to reproduce an interval). The number of timing models capable of retrospective timing, which is the ability to track time without paying attention, is insufficient. In this light, we discuss the possible research directions to promote interdisciplinary collaboration for time perception.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge