Ilker Bozcan

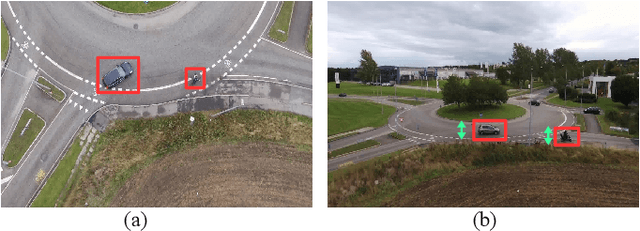

Context-Dependent Anomaly Detection for Low Altitude Traffic Surveillance

Apr 14, 2021

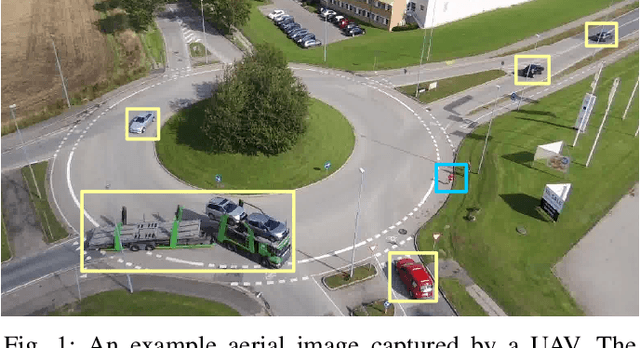

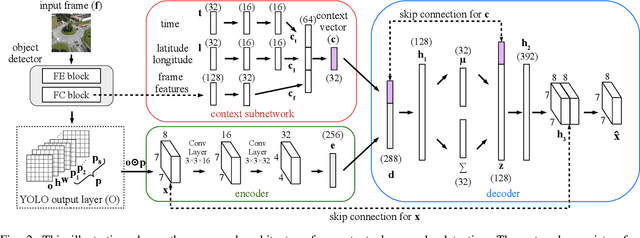

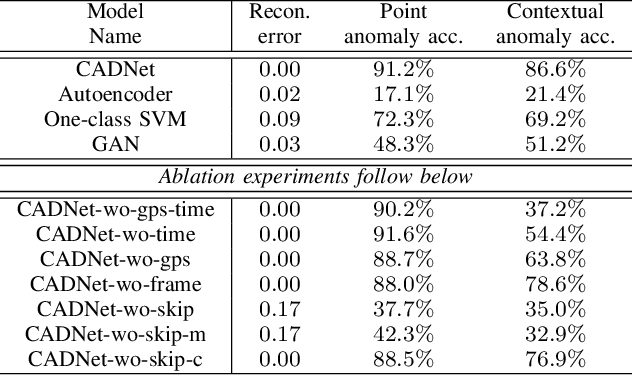

Abstract:The detection of contextual anomalies is a challenging task for surveillance since an observation can be considered anomalous or normal in a specific environmental context. An unmanned aerial vehicle (UAV) can utilize its aerial monitoring capability and employ multiple sensors to gather contextual information about the environment and perform contextual anomaly detection. In this work, we introduce a deep neural network-based method (CADNet) to find point anomalies (i.e., single instance anomalous data) and contextual anomalies (i.e., context-specific abnormality) in an environment using a UAV. The method is based on a variational autoencoder (VAE) with a context sub-network. The context sub-network extracts contextual information regarding the environment using GPS and time data, then feeds it to the VAE to predict anomalies conditioned on the context. To the best of our knowledge, our method is the first contextual anomaly detection method for UAV-assisted aerial surveillance. We evaluate our method on the AU-AIR dataset in a traffic surveillance scenario. Quantitative comparisons against several baselines demonstrate the superiority of our approach in the anomaly detection tasks. The codes and data will be available at https://bozcani.github.io/cadnet.

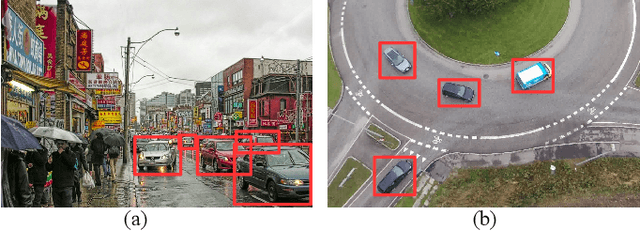

UAV-AdNet: Unsupervised Anomaly Detection using Deep Neural Networks for Aerial Surveillance

Nov 05, 2020

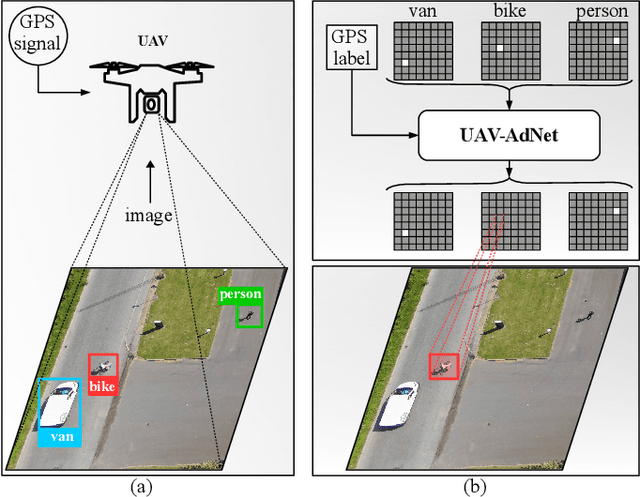

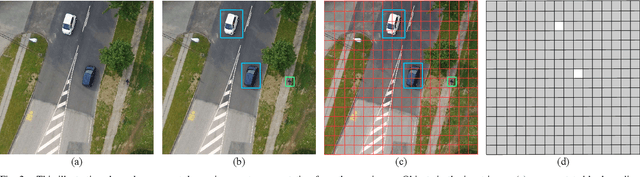

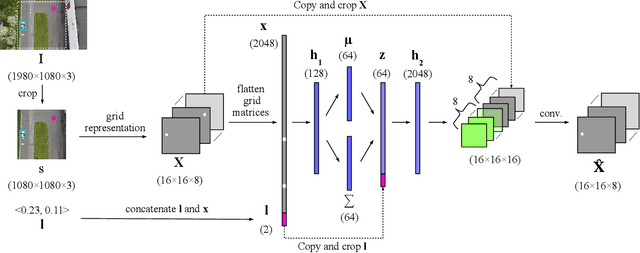

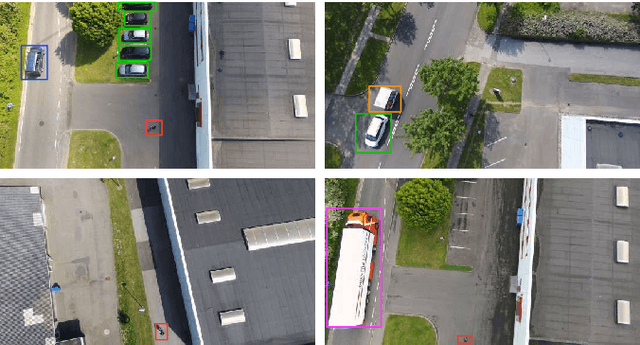

Abstract:Anomaly detection is a key goal of autonomous surveillance systems that should be able to alert unusual observations. In this paper, we propose a holistic anomaly detection system using deep neural networks for surveillance of critical infrastructures (e.g., airports, harbors, warehouses) using an unmanned aerial vehicle (UAV). First, we present a heuristic method for the explicit representation of spatial layouts of objects in bird-view images. Then, we propose a deep neural network architecture for unsupervised anomaly detection (UAV-AdNet), which is trained on environment representations and GPS labels of bird-view images jointly. Unlike studies in the literature, we combine GPS and image data to predict abnormal observations. We evaluate our model against several baselines on our aerial surveillance dataset and show that it performs better in scene reconstruction and several anomaly detection tasks. The codes, trained models, dataset, and video will be available at https://bozcani.github.io/uavadnet.

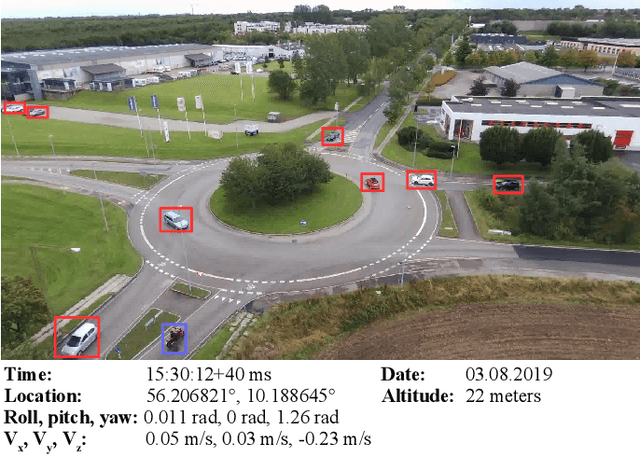

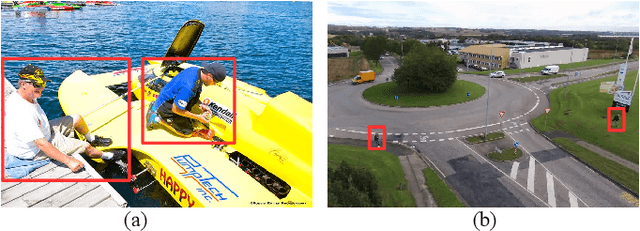

AU-AIR: A Multi-modal Unmanned Aerial Vehicle Dataset for Low Altitude Traffic Surveillance

Feb 03, 2020

Abstract:Unmanned aerial vehicles (UAVs) with mounted cameras have the advantage of capturing aerial (bird-view) images. The availability of aerial visual data and the recent advances in object detection algorithms led the computer vision community to focus on object detection tasks on aerial images. As a result of this, several aerial datasets have been introduced, including visual data with object annotations. UAVs are used solely as flying-cameras in these datasets, discarding different data types regarding the flight (e.g., time, location, internal sensors). In this work, we propose a multi-purpose aerial dataset (AU-AIR) that has multi-modal sensor data (i.e., visual, time, location, altitude, IMU, velocity) collected in real-world outdoor environments. The AU-AIR dataset includes meta-data for extracted frames (i.e., bounding box annotations for traffic-related object category) from recorded RGB videos. Moreover, we emphasize the differences between natural and aerial images in the context of object detection task. For this end, we train and test mobile object detectors (including YOLOv3-Tiny and MobileNetv2-SSDLite) on the AU-AIR dataset, which are applicable for real-time object detection using on-board computers with UAVs. Since our dataset has diversity in recorded data types, it contributes to filling the gap between computer vision and robotics. The dataset is available at https://bozcani.github.io/auairdataset.

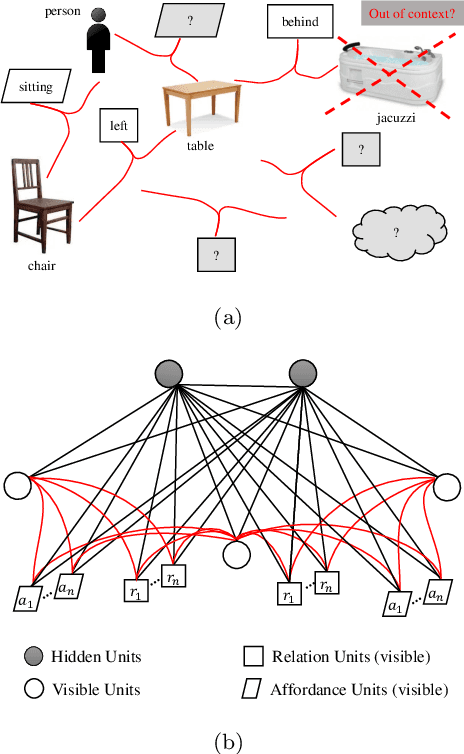

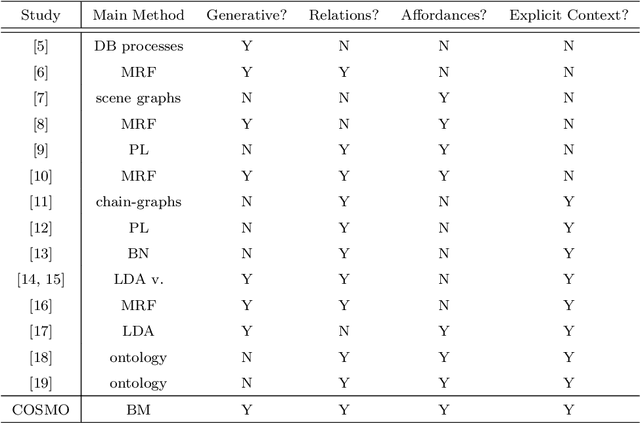

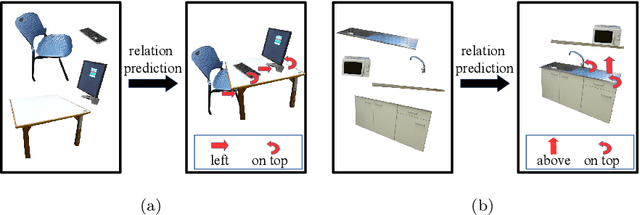

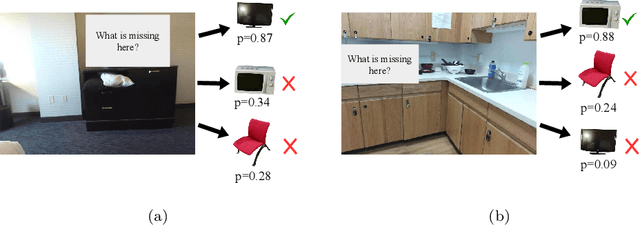

COSMO: Contextualized Scene Modeling with Boltzmann Machines

Dec 19, 2018

Abstract:Scene modeling is very crucial for robots that need to perceive, reason about and manipulate the objects in their environments. In this paper, we adapt and extend Boltzmann Machines (BMs) for contextualized scene modeling. Although there are many models on the subject, ours is the first to bring together objects, relations, and affordances in a highly-capable generative model. For this end, we introduce a hybrid version of BMs where relations and affordances are introduced with shared, tri-way connections into the model. Moreover, we contribute a dataset for relation estimation and modeling studies. We evaluate our method in comparison with several baselines on object estimation, out-of-context object detection, relation estimation, and affordance estimation tasks. Moreover, to illustrate the generative capability of the model, we show several example scenes that the model is able to generate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge