Hyunil Kim

Do All Autoregressive Transformers Remember Facts the Same Way? A Cross-Architecture Analysis of Recall Mechanisms

Sep 10, 2025Abstract:Understanding how Transformer-based language models store and retrieve factual associations is critical for improving interpretability and enabling targeted model editing. Prior work, primarily on GPT-style models, has identified MLP modules in early layers as key contributors to factual recall. However, it remains unclear whether these findings generalize across different autoregressive architectures. To address this, we conduct a comprehensive evaluation of factual recall across several models -- including GPT, LLaMA, Qwen, and DeepSeek -- analyzing where and how factual information is encoded and accessed. Consequently, we find that Qwen-based models behave differently from previous patterns: attention modules in the earliest layers contribute more to factual recall than MLP modules. Our findings suggest that even within the autoregressive Transformer family, architectural variations can lead to fundamentally different mechanisms of factual recall.

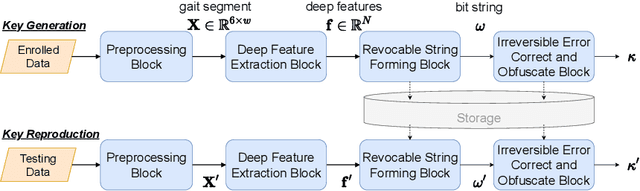

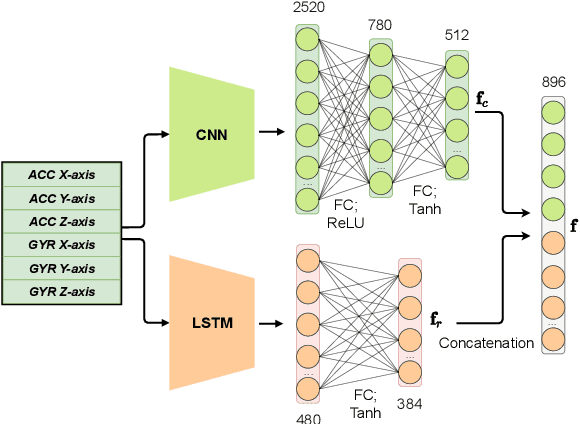

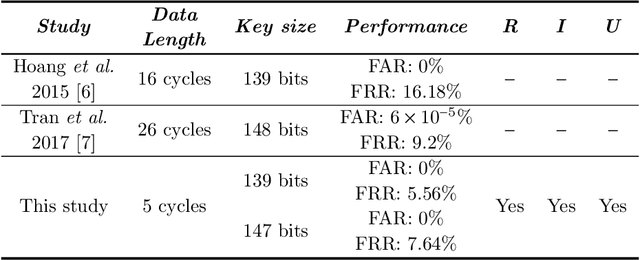

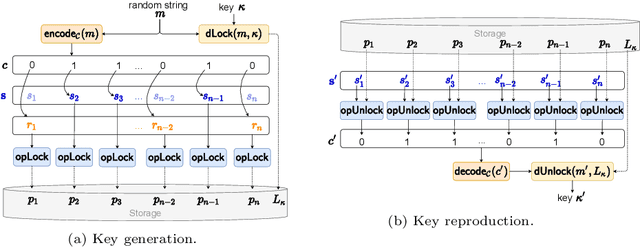

Security and Privacy Enhanced Gait Authentication with Random Representation Learning and Digital Lockers

Aug 05, 2021

Abstract:Gait data captured by inertial sensors have demonstrated promising results on user authentication. However, most existing approaches stored the enrolled gait pattern insecurely for matching with the validating pattern, thus, posed critical security and privacy issues. In this study, we present a gait cryptosystem that generates from gait data the random key for user authentication, meanwhile, secures the gait pattern. First, we propose a revocable and random binary string extraction method using a deep neural network followed by feature-wise binarization. A novel loss function for network optimization is also designed, to tackle not only the intrauser stability but also the inter-user randomness. Second, we propose a new biometric key generation scheme, namely Irreversible Error Correct and Obfuscate (IECO), improved from the Error Correct and Obfuscate (ECO) scheme, to securely generate from the binary string the random and irreversible key. The model was evaluated with two benchmark datasets as OU-ISIR and whuGAIT. We showed that our model could generate the key of 139 bits from 5-second data sequence with zero False Acceptance Rate (FAR) and False Rejection Rate (FRR) smaller than 5.441%. In addition, the security and user privacy analyses showed that our model was secure against existing attacks on biometric template protection, and fulfilled irreversibility and unlinkability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge