Hyungjoon Kim

KoTaP: A Panel Dataset for Corporate Tax Avoidance, Performance, and Governance in Korea

Nov 06, 2025Abstract:This study introduces the Korean Tax Avoidance Panel (KoTaP), a long-term panel dataset of non-financial firms listed on KOSPI and KOSDAQ between 2011 and 2024. After excluding financial firms, firms with non-December fiscal year ends, capital impairment, and negative pre-tax income, the final dataset consists of 12,653 firm-year observations from 1,754 firms. KoTaP is designed to treat corporate tax avoidance as a predictor variable and link it to multiple domains, including earnings management (accrual- and activity-based), profitability (ROA, ROE, CFO, LOSS), stability (LEV, CUR, SIZE, PPE, AGE, INVREC), growth (GRW, MB, TQ), and governance (BIG4, FORN, OWN). Tax avoidance itself is measured using complementary indicators cash effective tax rate (CETR), GAAP effective tax rate (GETR), and book-tax difference measures (TSTA, TSDA) with adjustments to ensure interpretability. A key strength of KoTaP is its balanced panel structure with standardized variables and its consistency with international literature on the distribution and correlation of core indicators. At the same time, it reflects distinctive institutional features of Korean firms, such as concentrated ownership, high foreign shareholding, and elevated liquidity ratios, providing both international comparability and contextual uniqueness. KoTaP enables applications in benchmarking econometric and deep learning models, external validity checks, and explainable AI analyses. It further supports policy evaluation, audit planning, and investment analysis, making it a critical open resource for accounting, finance, and interdisciplinary research.

Real-Time Shape Tracking of Facial Landmarks

Jul 14, 2018

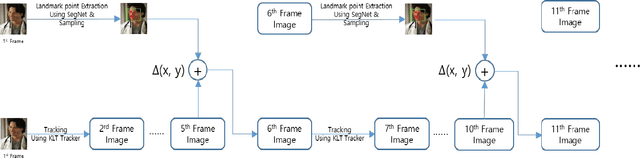

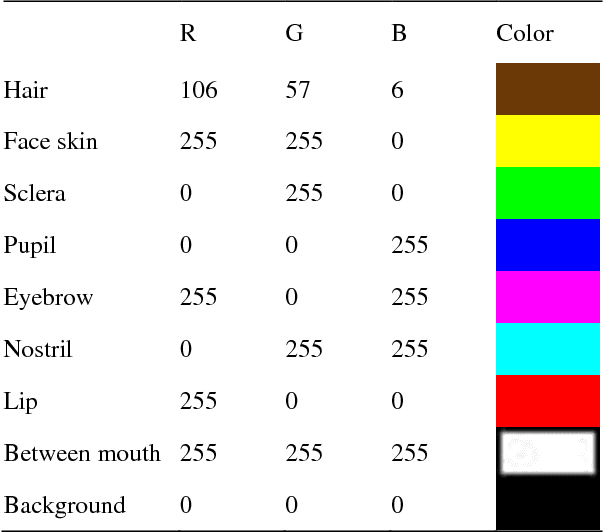

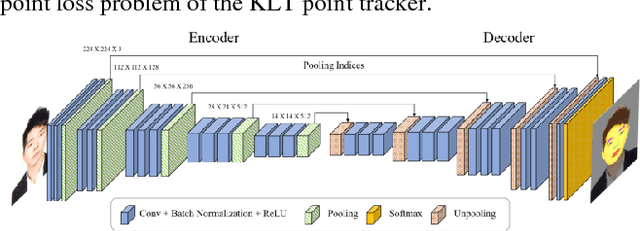

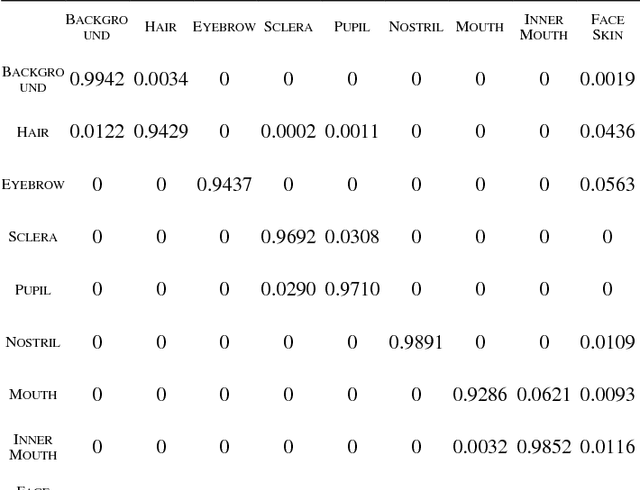

Abstract:Detection of facial landmarks and accurate tracking of their shape are essential in real-time virtual makeup applications, where users can see the makeups effect by moving their face in different directions. Typical face tracking techniques detect diverse facial landmarks and track them using a point tracker such as the Kanade-Lucas-Tomasi (KLT) point tracker. Typically, 5 or 64 points are used for tracking a face. Even though these points are sufficient to track the approximate locations of facial landmarks, they are not sufficient to track the exact shape of facial landmarks. In this paper, we propose a method that can track the exact shape of facial landmarks in real-time by combining a deep learning technique and a point tracker. We detect facial landmarks accurately using SegNet, which performs semantic segmentation based on deep learning. Edge points of detected landmarks are tracked using the KLT point tracker. In spite of its popularity, the KLT point tracker suffers from the point loss problem. We solve this problem by executing SegNet periodically to calculate the shape of facial landmarks. That is, by combining the two techniques, we can avoid the computational overhead of SegNet for real-time shape tracking and the point loss problem of the KLT point tracker. We performed several experiments to evaluate the performance of our method and report some of the results herein.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge