Hung-Wei Tseng

GPTPU: Accelerating Applications using Edge Tensor Processing Units

Jul 13, 2021

Abstract:Neural network (NN) accelerators have been integrated into a wide-spectrum of computer systems to accommodate the rapidly growing demands for artificial intelligence (AI) and machine learning (ML) applications. NN accelerators share the idea of providing native hardware support for operations on multidimensional tensor data. Therefore, NN accelerators are theoretically tensor processors that can improve system performance for any problem that uses tensors as inputs/outputs. Unfortunately, commercially available NN accelerators only expose computation capabilities through AI/ML-specific interfaces. Furthermore, NN accelerators reveal very few hardware design details, so applications cannot easily leverage the tensor operations NN accelerators provide. This paper introduces General-Purpose Computing on Edge Tensor Processing Units (GPTPU), an open-source, open-architecture framework that allows the developer and research communities to discover opportunities that NN accelerators enable for applications. GPTPU includes a powerful programming interface with efficient runtime system-level support -- similar to that of CUDA/OpenCL in GPGPU computing -- to bridge the gap between application demands and mismatched hardware/software interfaces. We built GPTPU machine uses Edge Tensor Processing Units (Edge TPUs), which are widely available and representative of many commercial NN accelerators. We identified several novel use cases and revisited the algorithms. By leveraging the underlying Edge TPUs to perform tensor-algorithm-based compute kernels, our results reveal that GPTPU can achieve a 2.46x speedup over high-end CPUs and reduce energy consumption by 40%.

Computational Intractability of Dictionary Learning for Sparse Representation

Nov 05, 2015

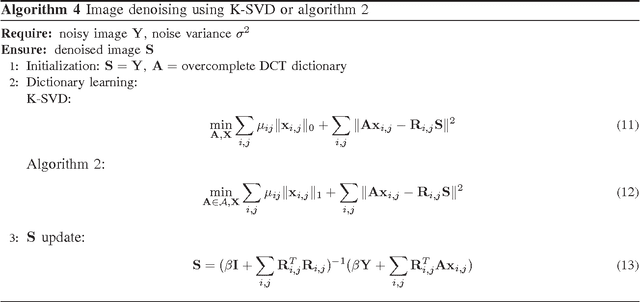

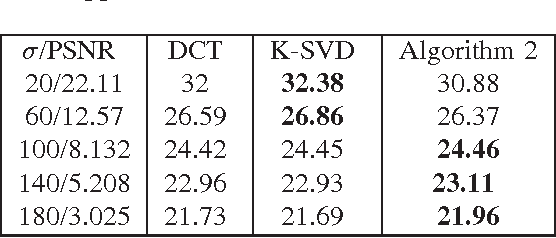

Abstract:In this paper we consider the dictionary learning problem for sparse representation. We first show that this problem is NP-hard by polynomial time reduction of the densest cut problem. Then, using successive convex approximation strategies, we propose efficient dictionary learning schemes to solve several practical formulations of this problem to stationary points. Unlike many existing algorithms in the literature, such as K-SVD, our proposed dictionary learning scheme is theoretically guaranteed to converge to the set of stationary points under certain mild assumptions. For the image denoising application, the performance and the efficiency of the proposed dictionary learning scheme are comparable to that of K-SVD algorithm in simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge