Hiroshi Inazawa

The Method for Storing Patterns in Neural Networks-Memorization and Recall of QR code Patterns-

Apr 09, 2025Abstract:In this paper, we propose a mechanism for storing complex patterns within a neural network and subsequently recalling them. This model is based on our work published in 2018(Inazawa, 2018), which we have refined and extended in this work. With the recent advancements in deep learning and large language model (LLM)-based AI technologies (generative AI), it can be considered that methodologies for the learning are becoming increasingly well-established. In the future, we expect to see further research on memory using models based on Transformers (Vaswani, et. al., 2017, Rae, et. al., 2020), but in this paper we propose a simpler and more powerful model of memory and recall in neural networks. The advantage of storing patterns in a neural network lies in its ability to recall the original pattern even when an incomplete version is presented. The patterns we have produced for use in this study have been QR code (DENSO WAVE, 1994), which has become widely used as an information transmission tool in recent years.

An associative memory model with very high memory rate: Image storage by sequential addition learning

Oct 08, 2022

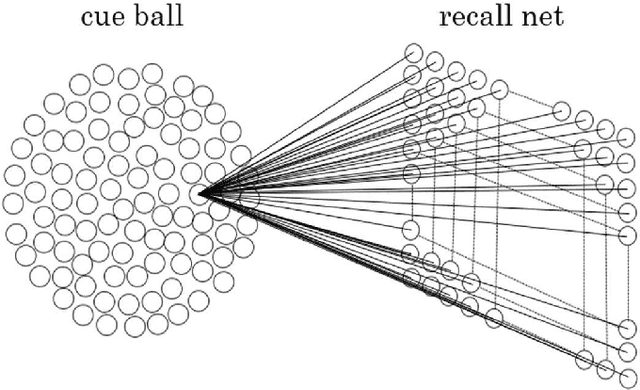

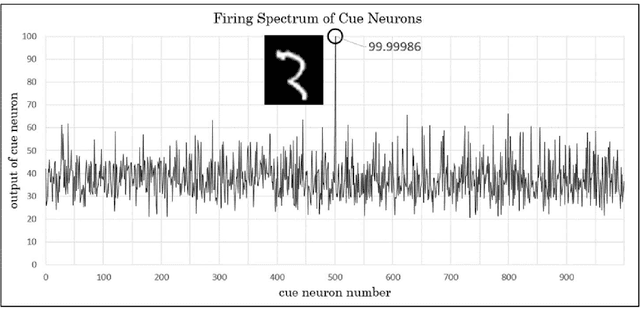

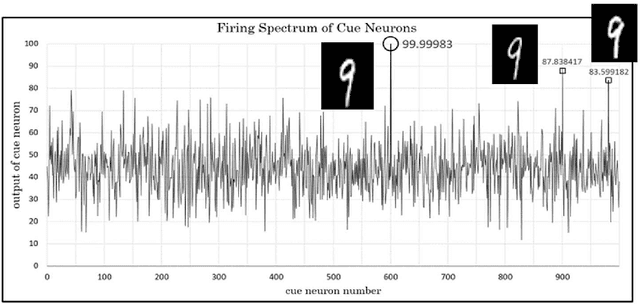

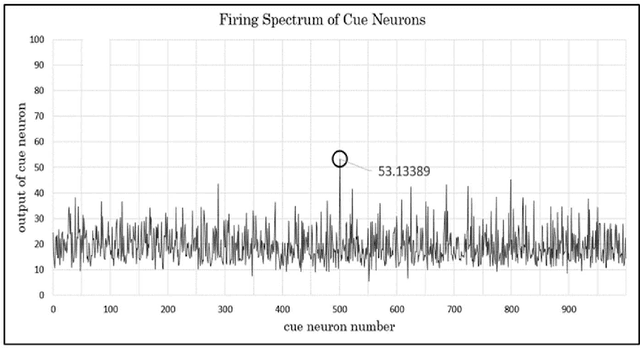

Abstract:In this paper, we present a neural network system related to about memory and recall that consists of one neuron group (the "cue ball") and a one-layer neural net (the "recall net"). This system realizes the bidirectional memorization learning between one cue neuron in the cue ball and the neurons in the recall net. It can memorize many patterns and recall these patterns or those that are similar at any time. Furthermore, the patterns are recalled at most the same time. This model's recall situation seems to resemble human recall of a variety of similar things almost simultaneously when one thing is recalled. It is also possible for additional learning to occur in the system without affecting the patterns memorized in advance. Moreover, the memory rate (the number of memorized patterns / the total number of neurons) is close to 100%; this system's rate is 0.987. Finally, pattern data constraints become an important aspect of this system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge