Hei Chan

When do Numbers Really Matter?

Aug 07, 2014

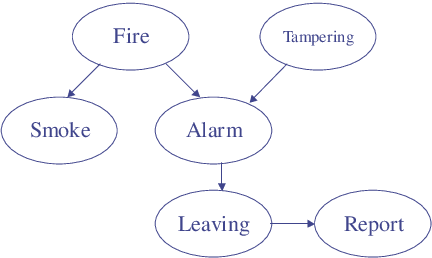

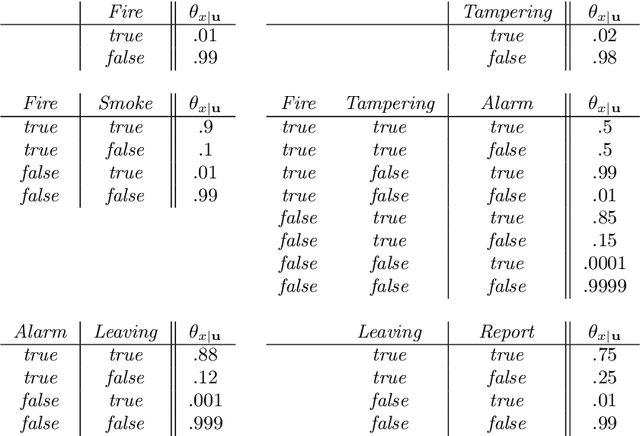

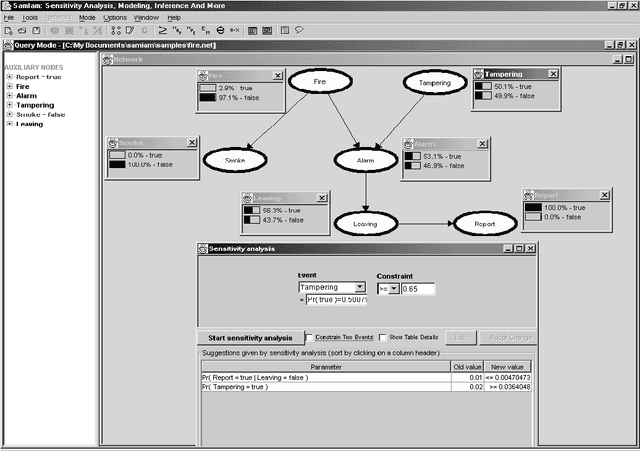

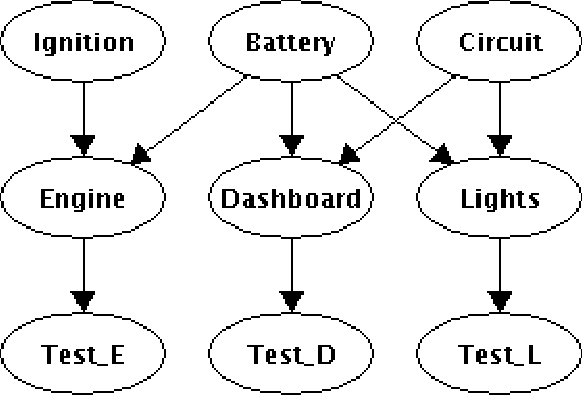

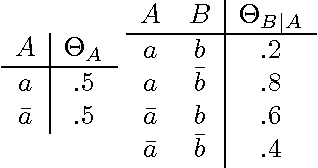

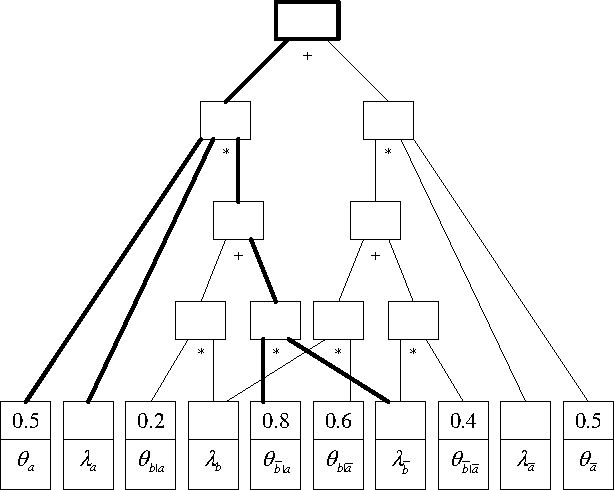

Abstract:Common wisdom has it that small distinctions in the probabilities quantifying a Bayesian network do not matter much for the resultsof probabilistic queries. However, one can easily develop realistic scenarios under which small variations in network probabilities can lead to significant changes in computed queries. A pending theoretical question is then to analytically characterize parameter changes that do or do not matter. In this paper, we study the sensitivity of probabilistic queries to changes in network parameters and prove some tight bounds on the impact that such parameters can have on queries. Our analytical results pinpoint some interesting situations under which parameter changes do or do not matter. These results are important for knowledge engineers as they help them identify influential network parameters. They are also important for approximate inference algorithms that preprocessnetwork CPTs to eliminate small distinctions in probabilities.

Reasoning about Bayesian Network Classifiers

Oct 19, 2012

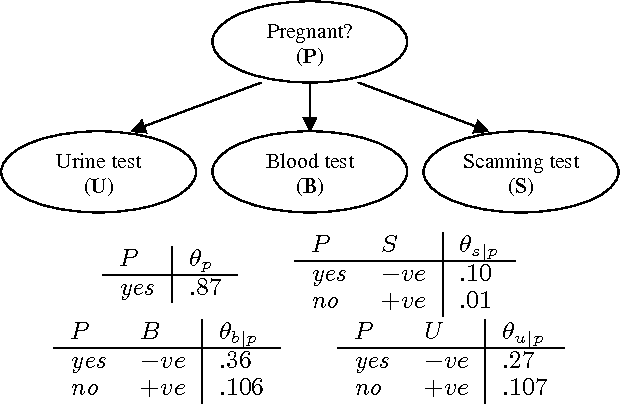

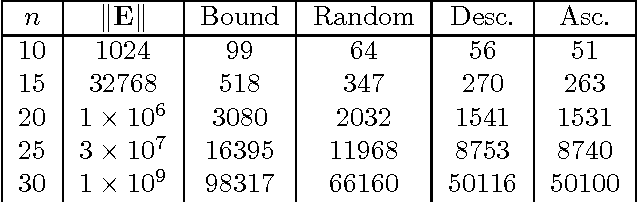

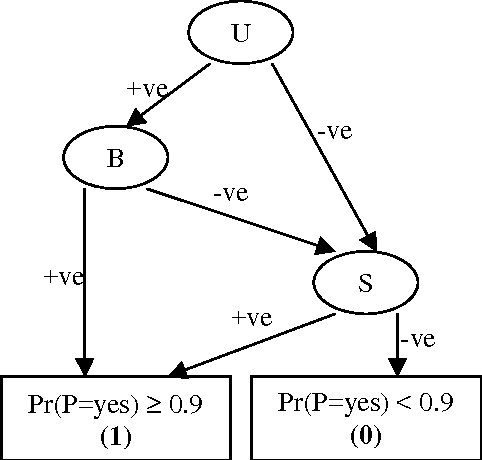

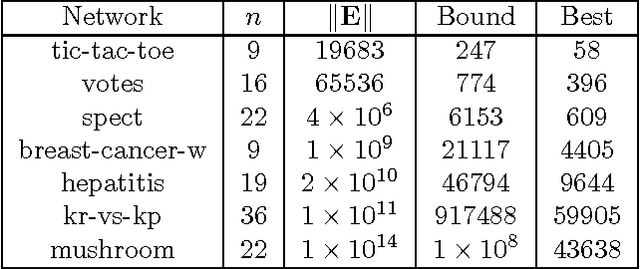

Abstract:Bayesian network classifiers are used in many fields, and one common class of classifiers are naive Bayes classifiers. In this paper, we introduce an approach for reasoning about Bayesian network classifiers in which we explicitly convert them into Ordered Decision Diagrams (ODDs), which are then used to reason about the properties of these classifiers. Specifically, we present an algorithm for converting any naive Bayes classifier into an ODD, and we show theoretically and experimentally that this algorithm can give us an ODD that is tractable in size even given an intractable number of instances. Since ODDs are tractable representations of classifiers, our algorithm allows us to efficiently test the equivalence of two naive Bayes classifiers and characterize discrepancies between them. We also show a number of additional results including a count of distinct classifiers that can be induced by changing some CPT in a naive Bayes classifier, and the range of allowable changes to a CPT which keeps the current classifier unchanged.

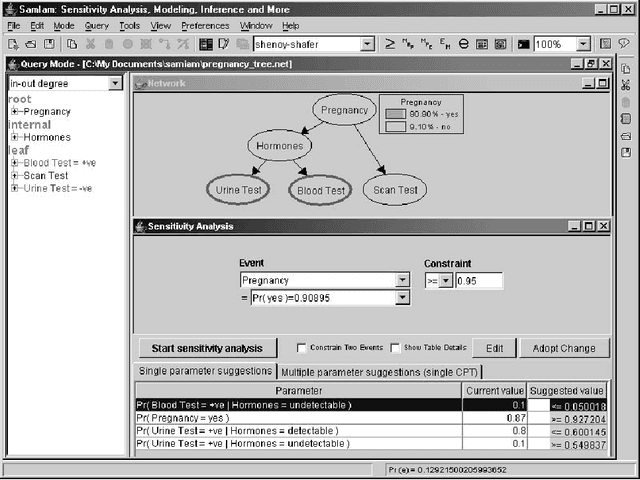

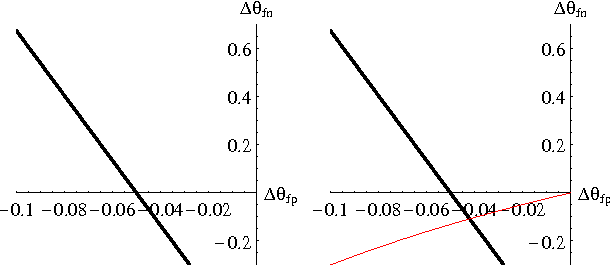

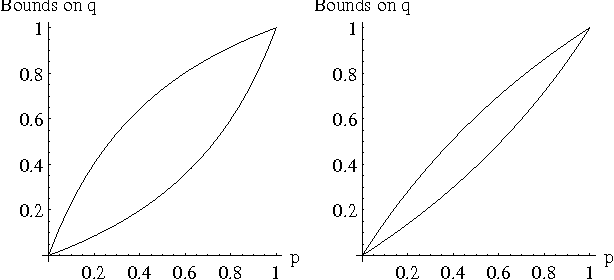

Sensitivity Analysis in Bayesian Networks: From Single to Multiple Parameters

Jul 11, 2012

Abstract:Previous work on sensitivity analysis in Bayesian networks has focused on single parameters, where the goal is to understand the sensitivity of queries to single parameter changes, and to identify single parameter changes that would enforce a certain query constraint. In this paper, we expand the work to multiple parameters which may be in the CPT of a single variable, or the CPTs of multiple variables. Not only do we identify the solution space of multiple parameter changes that would be needed to enforce a query constraint, but we also show how to find the optimal solution, that is, the one which disturbs the current probability distribution the least (with respect to a specific measure of disturbance). We characterize the computational complexity of our new techniques and discuss their applications to developing and debugging Bayesian networks, and to the problem of reasoning about the value (reliability) of new information.

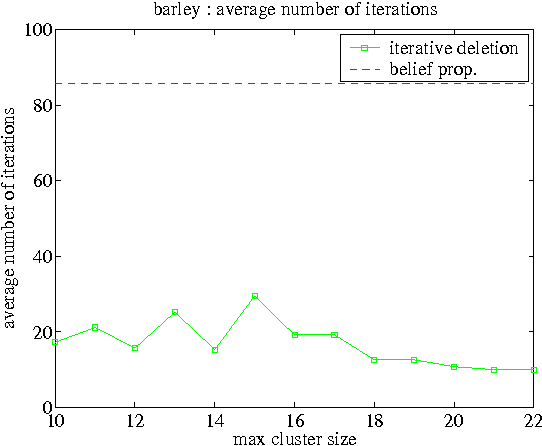

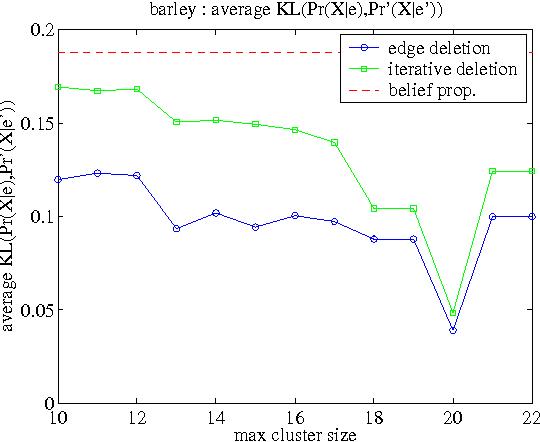

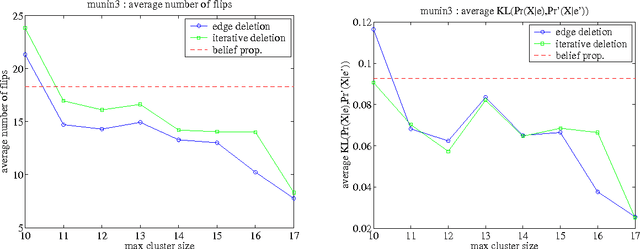

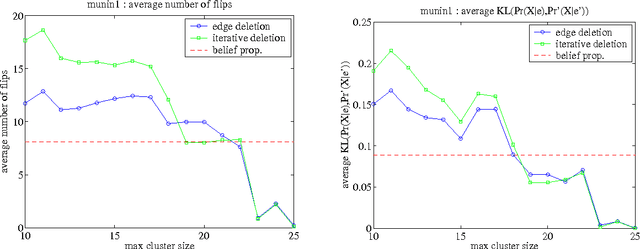

On Bayesian Network Approximation by Edge Deletion

Jul 04, 2012

Abstract:We consider the problem of deleting edges from a Bayesian network for the purpose of simplifying models in probabilistic inference. In particular, we propose a new method for deleting network edges, which is based on the evidence at hand. We provide some interesting bounds on the KL-divergence between original and approximate networks, which highlight the impact of given evidence on the quality of approximation and shed some light on good and bad candidates for edge deletion. We finally demonstrate empirically the promise of the proposed edge deletion technique as a basis for approximate inference.

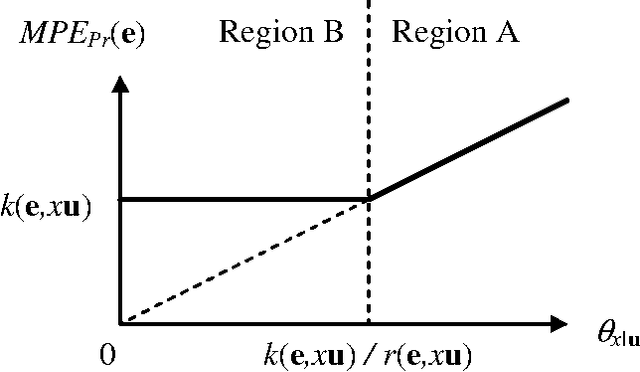

On the Robustness of Most Probable Explanations

Jun 27, 2012

Abstract:In Bayesian networks, a Most Probable Explanation (MPE) is a complete variable instantiation with a highest probability given the current evidence. In this paper, we discuss the problem of finding robustness conditions of the MPE under single parameter changes. Specifically, we ask the question: How much change in a single network parameter can we afford to apply while keeping the MPE unchanged? We will describe a procedure, which is the first of its kind, that computes this answer for each parameter in the Bayesian network variable in time O(n exp(w)), where n is the number of network variables and w is its treewidth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge