Hans Dermot Doran

Workflow for Safe-AI

Mar 20, 2025

Abstract:The development and deployment of safe and dependable AI models is crucial in applications where functional safety is a key concern. Given the rapid advancement in AI research and the relative novelty of the safe-AI domain, there is an increasing need for a workflow that balances stability with adaptability. This work proposes a transparent, complete, yet flexible and lightweight workflow that highlights both reliability and qualifiability. The core idea is that the workflow must be qualifiable, which demands the use of qualified tools. Tool qualification is a resource-intensive process, both in terms of time and cost. We therefore place value on a lightweight workflow featuring a minimal number of tools with limited features. The workflow is built upon an extended ONNX model description allowing for validation of AI algorithms from their generation to runtime deployment. This validation is essential to ensure that models are validated before being reliably deployed across different runtimes, particularly in mixed-criticality systems. Keywords-AI workflows, safe-AI, dependable-AI, functional safety, v-model development

Performance Examination of Symbolic Aggregate Approximation in IoT Applications

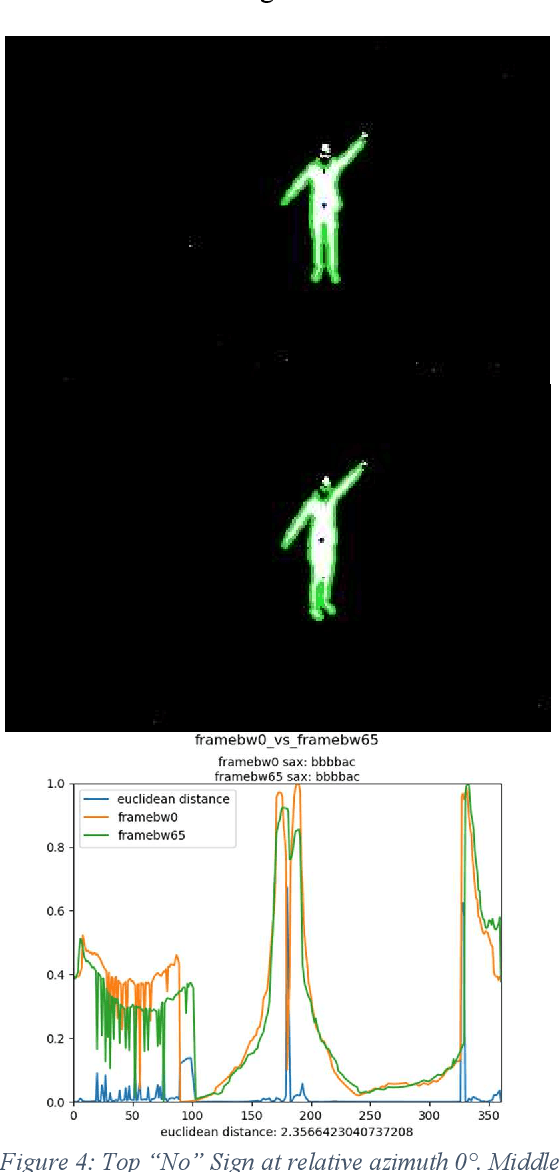

May 30, 2024Abstract:Symbolic Aggregate approXimation (SAX) is a common dimensionality reduction approach for time-series data which has been employed in a variety of domains, including classification and anomaly detection in time-series data. Domains also include shape recognition where the shape outline is converted into time-series data forinstance epoch classification of archived arrowheads. In this paper we propose a dimensionality reduction and shape recognition approach based on the SAX algorithm, an application which requires responses on cost efficient, IoT-like, platforms. The challenge is largely dealing with the computational expense of the SAX algorithm in IoT-like applications, from simple time-series dimension reduction through shape recognition. The approach is based on lowering the dimensional space while capturing and preserving the most representative features of the shape. We present three scenarios of increasing computational complexity backing up our statements with measurement of performance characteristics

Hybrid Convolutional Neural Networks with Reliability Guarantee

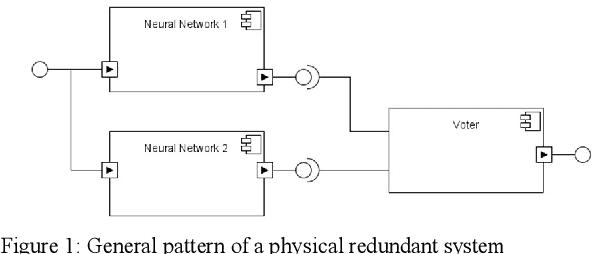

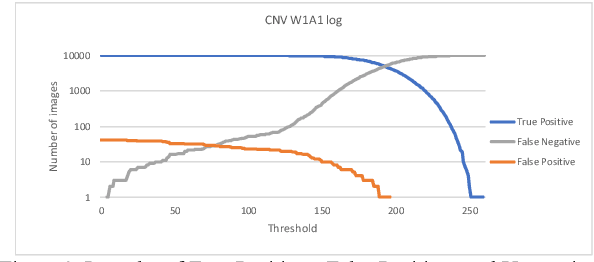

May 08, 2024Abstract:Making AI safe and dependable requires the generation of dependable models and dependable execution of those models. We propose redundant execution as a well-known technique that can be used to ensure reliable execution of the AI model. This generic technique will extend the application scope of AI-accelerators that do not feature well-documented safety or dependability properties. Typical redundancy techniques incur at least double or triple the computational expense of the original. We adopt a co-design approach, integrating reliable model execution with non-reliable execution, focusing that additional computational expense only where it is strictly necessary. We describe the design, implementation and some preliminary results of a hybrid CNN.

Mixed Criticality Communication within an Unmanned Delivery Rotorcraft

Sep 10, 2022

Abstract:Stand-alone functions additional to a UAV flight-controller, such as safety-relevant flight-path monitoring or payload-monitoring and control, may be SORA-required or advised for specific flight paths of delivery-drones. These functions, articulated as discrete electronic components either internal or external to the main fuselage, can be networked with other on-board electronics systems. Such an integration requires respecting the integrity levels of each component on the network both in terms of function and in terms of power-supply. In this body of work we detail an intra-component communication system for small autonomous and semi-autonomous unmanned aerial vehicles (UAVs.) We discuss the context and the (conservative) design decisions before detailing the hardware and software interfaces and reporting on a first implementation. We finish by drawing conclusions and proposing future work.

Security and Safety Aspects of AI in Industry Applications

Jul 16, 2022

Abstract:In this relatively informal discussion-paper we summarise issues in the domains of safety and security in machine learning that will affect industry sectors in the next five to ten years. Various products using neural network classification, most often in vision related applications but also in predictive maintenance, have been researched and applied in real-world applications in recent years. Nevertheless, reports of underlying problems in both safety and security related domains, for instance adversarial attacks have unsettled early adopters and are threatening to hinder wider scale adoption of this technology. The problem for real-world applicability lies in being able to assess the risk of applying these technologies. In this discussion-paper we describe the process of arriving at a machine-learnt neural network classifier pointing out safety and security vulnerabilities in that workflow, citing relevant research where appropriate.

Dependable Neural Networks Through Redundancy, A Comparison of Redundant Architectures

Jul 30, 2021

Abstract:With edge-AI finding an increasing number of real-world applications, especially in industry, the question of functionally safe applications using AI has begun to be asked. In this body of work, we explore the issue of achieving dependable operation of neural networks. We discuss the issue of dependability in general implementation terms before examining lockstep solutions. We intuit that it is not necessarily a given that two similar neural networks generate results at precisely the same time and that synchronization between the platforms will be required. We perform some preliminary measurements that may support this intuition and introduce some work in implementing lockstep neural network engines.

Examining Redundancy in the Context of Safe Machine Learning

Jul 03, 2020

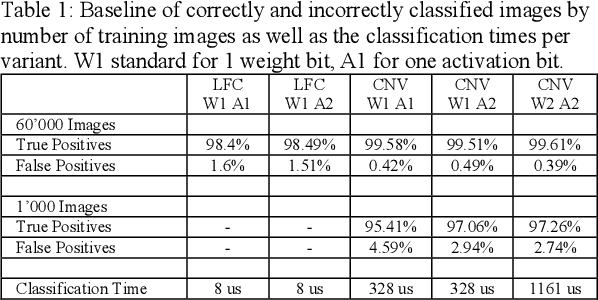

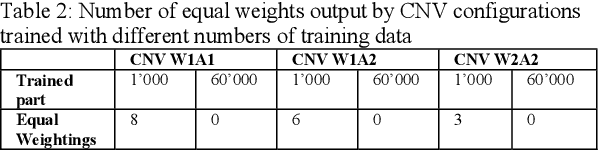

Abstract:This paper describes a set of experiments with neural network classifiers on the MNIST database of digits. The purpose is to investigate na\"ive implementations of redundant architectures as a first step towards safe and dependable machine learning. We report on a set of measurements using the MNIST database which ultimately serve to underline the expected difficulties in using NN classifiers in safe and dependable systems.

Conceptual Design of Human-Drone Communication in Collaborative Environments

Apr 30, 2020

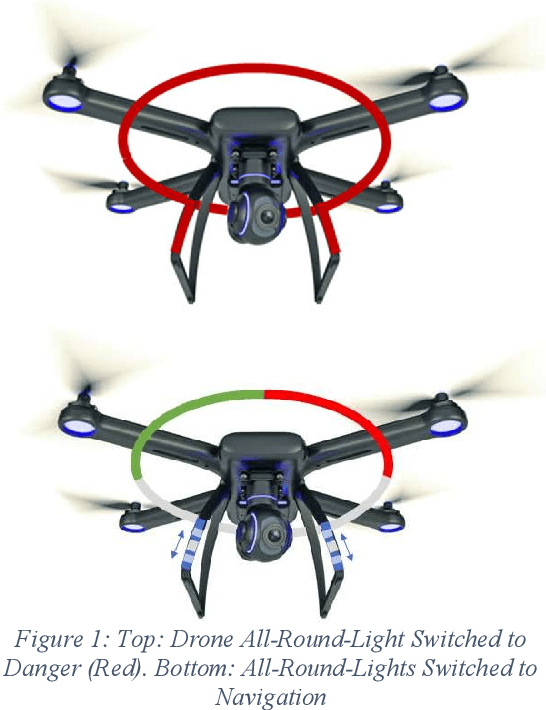

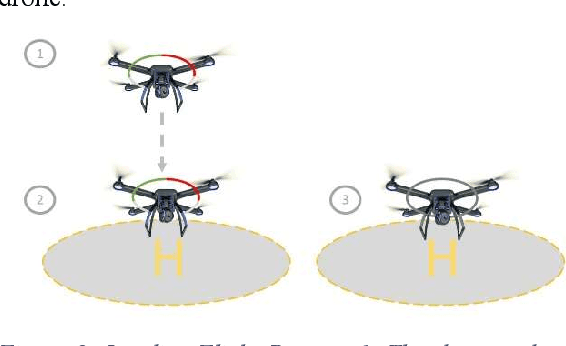

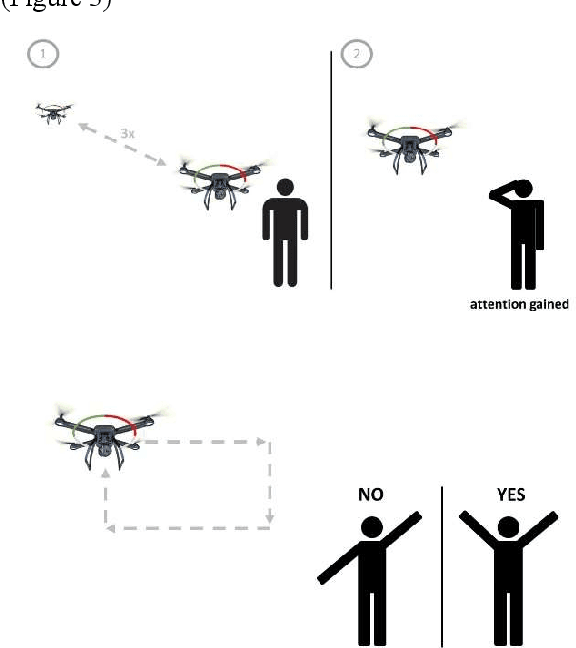

Abstract:Autonomous robots and drones will work collaboratively and cooperatively in tomorrow's industry and agriculture. Before this becomes a reality, some form of standardised communication between man and machine must be established that specifically facilitates communication between autonomous machines and both trained and untrained human actors in the working environment. We present preliminary results on a human-drone and a drone-human language situated in the agricultural industry where interactions with trained and untrained workers and visitors can be expected. We present basic visual indicators enhanced with flight patterns for drone-human interaction and human signaling based on aircraft marshaling for humane-drone interaction. We discuss preliminary results on image recognition and future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge