Hamidreza Saffari

More or Less Wrong: A Benchmark for Directional Bias in LLM Comparative Reasoning

Jun 04, 2025Abstract:Large language models (LLMs) are known to be sensitive to input phrasing, but the mechanisms by which semantic cues shape reasoning remain poorly understood. We investigate this phenomenon in the context of comparative math problems with objective ground truth, revealing a consistent and directional framing bias: logically equivalent questions containing the words ``more'', ``less'', or ``equal'' systematically steer predictions in the direction of the framing term. To study this effect, we introduce MathComp, a controlled benchmark of 300 comparison scenarios, each evaluated under 14 prompt variants across three LLM families. We find that model errors frequently reflect linguistic steering, systematic shifts toward the comparative term present in the prompt. Chain-of-thought prompting reduces these biases, but its effectiveness varies: free-form reasoning is more robust, while structured formats may preserve or reintroduce directional drift. Finally, we show that including demographic identity terms (e.g., ``a woman'', ``a Black person'') in input scenarios amplifies directional drift, despite identical underlying quantities, highlighting the interplay between semantic framing and social referents. These findings expose critical blind spots in standard evaluation and motivate framing-aware benchmarks for diagnosing reasoning robustness and fairness in LLMs.

Parkinson's Disease Diagnosis based on Gait Cycle Analysis Through an Interpretable Interval Type-2 Neuro-Fuzzy System

Sep 08, 2021

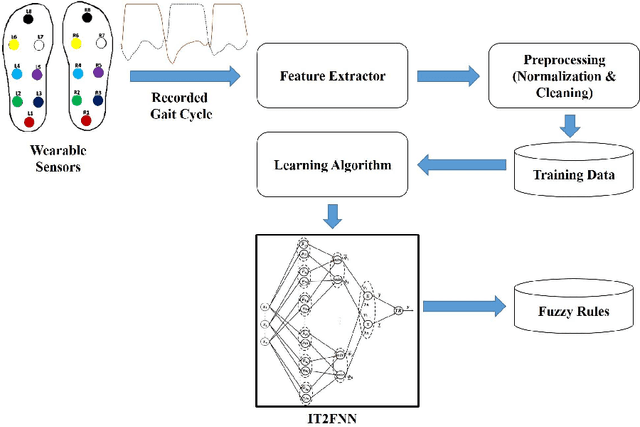

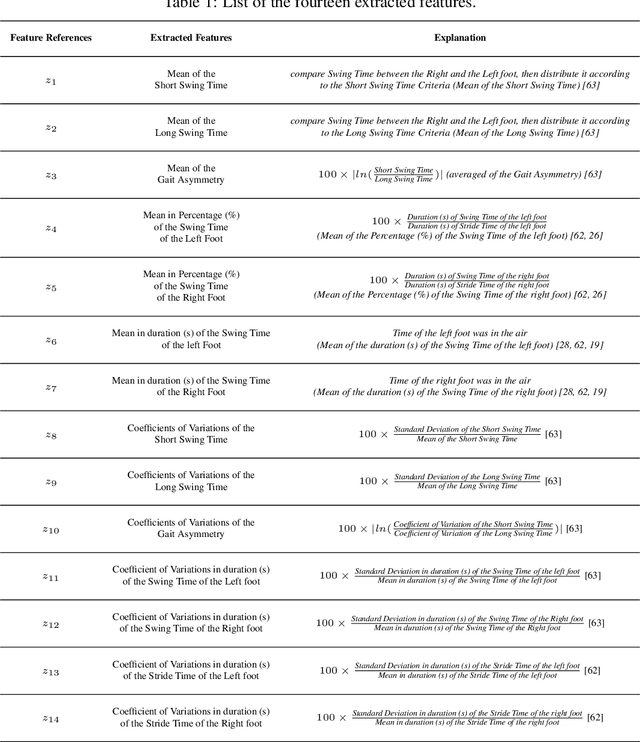

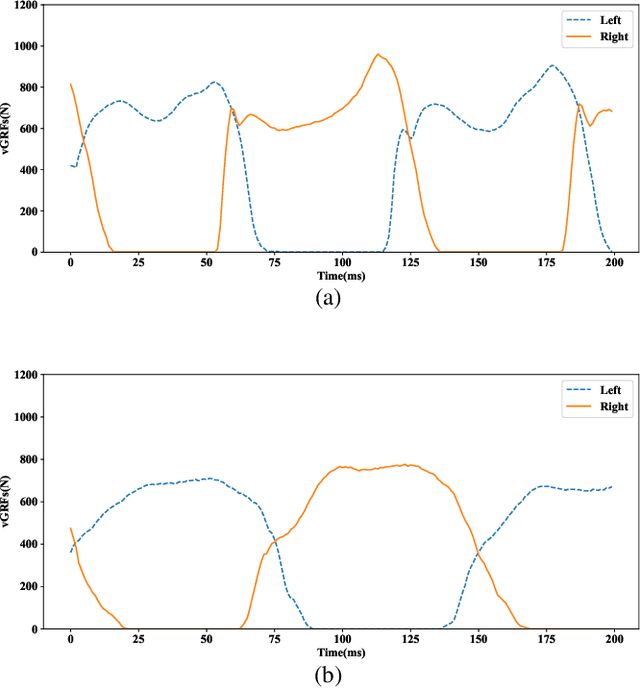

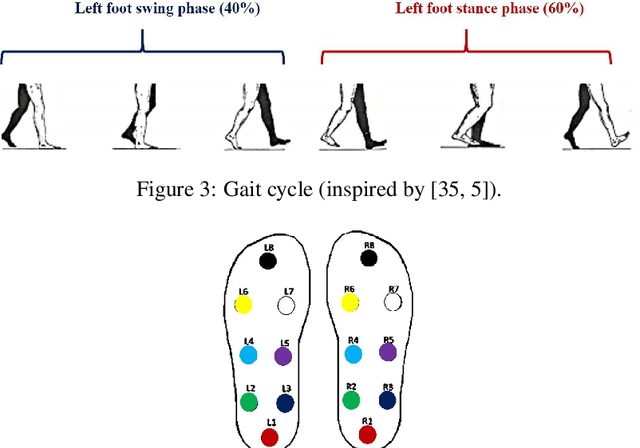

Abstract:In this paper, an interpretable classifier using an interval type-2 fuzzy neural network for detecting patients suffering from Parkinson's Disease (PD) based on analyzing the gait cycle is presented. The proposed method utilizes clinical features extracted from the vertical Ground Reaction Force (vGRF), measured by 16 wearable sensors placed in the soles of subjects' shoes and learns interpretable fuzzy rules. Therefore, experts can verify the decision made by the proposed method based on investigating the firing strength of interpretable fuzzy rules. Moreover, experts can utilize the extracted fuzzy rules for patient diagnosing or adjust them based on their knowledge. To improve the robustness of the proposed method against uncertainty and noisy sensor measurements, Interval Type-2 Fuzzy Logic is applied. To learn fuzzy rules, two paradigms are proposed: 1- A batch learning approach based on clustering available samples is applied to extract initial fuzzy rules, 2- A complementary online learning is proposed to improve the rule base encountering new labeled samples. The performance of the method is evaluated for classifying patients and healthy subjects in different conditions including the presence of noise or observing new instances. Moreover, the performance of the model is compared to some previous supervised and unsupervised machine learning approaches. The final Accuracy, Precision, Recall, and F1 Score of the proposed method are 88.74%, 89.41%, 95.10%, and 92.16%. Finally, the extracted fuzzy sets for each feature are reported.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge