Giovanni Apruzzese

Concept-based Adversarial Attacks: Tricking Humans and Classifiers Alike

Mar 18, 2022

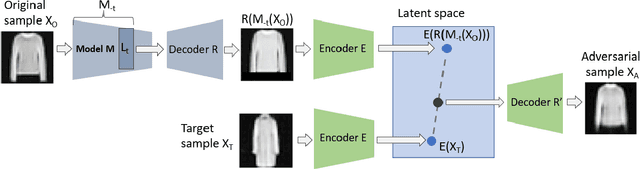

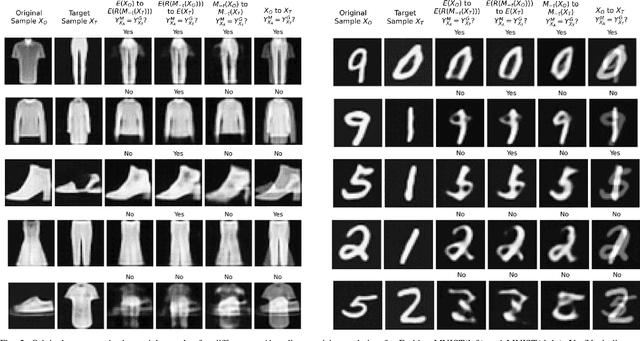

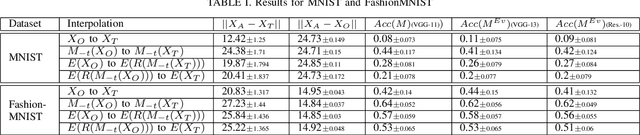

Abstract:We propose to generate adversarial samples by modifying activations of upper layers encoding semantically meaningful concepts. The original sample is shifted towards a target sample, yielding an adversarial sample, by using the modified activations to reconstruct the original sample. A human might (and possibly should) notice differences between the original and the adversarial sample. Depending on the attacker-provided constraints, an adversarial sample can exhibit subtle differences or appear like a "forged" sample from another class. Our approach and goal are in stark contrast to common attacks involving perturbations of single pixels that are not recognizable by humans. Our approach is relevant in, e.g., multi-stage processing of inputs, where both humans and machines are involved in decision-making because invisible perturbations will not fool a human. Our evaluation focuses on deep neural networks. We also show the transferability of our adversarial examples among networks.

The Cross-evaluation of Machine Learning-based Network Intrusion Detection Systems

Mar 09, 2022

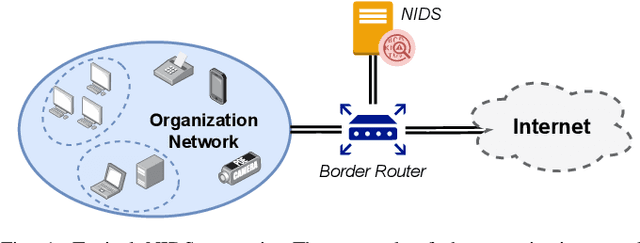

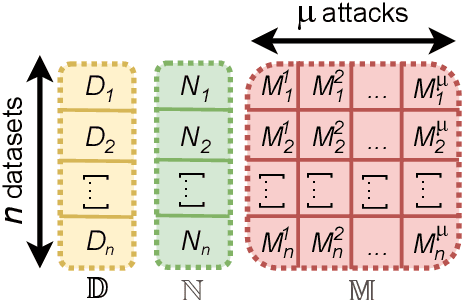

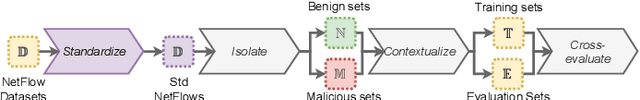

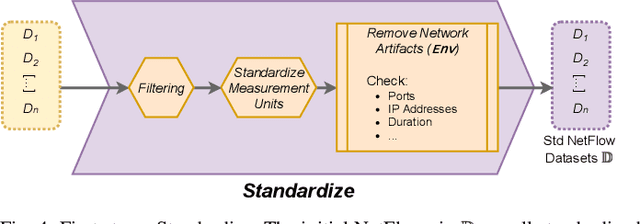

Abstract:Enhancing Network Intrusion Detection Systems (NIDS) with supervised Machine Learning (ML) is tough. ML-NIDS must be trained and evaluated, operations requiring data where benign and malicious samples are clearly labelled. Such labels demand costly expert knowledge, resulting in a lack of real deployments, as well as on papers always relying on the same outdated data. The situation improved recently, as some efforts disclosed their labelled datasets. However, most past works used such datasets just as a 'yet another' testbed, overlooking the added potential provided by such availability. In contrast, we promote using such existing labelled data to cross-evaluate ML-NIDS. Such approach received only limited attention and, due to its complexity, requires a dedicated treatment. We hence propose the first cross-evaluation model. Our model highlights the broader range of realistic use-cases that can be assessed via cross-evaluations, allowing the discovery of still unknown qualities of state-of-the-art ML-NIDS. For instance, their detection surface can be extended--at no additional labelling cost. However, conducting such cross-evaluations is challenging. Hence, we propose the first framework, XeNIDS, for reliable cross-evaluations based on Network Flows. By using XeNIDS on six well-known datasets, we demonstrate the concealed potential, but also the risks, of cross-evaluations of ML-NIDS.

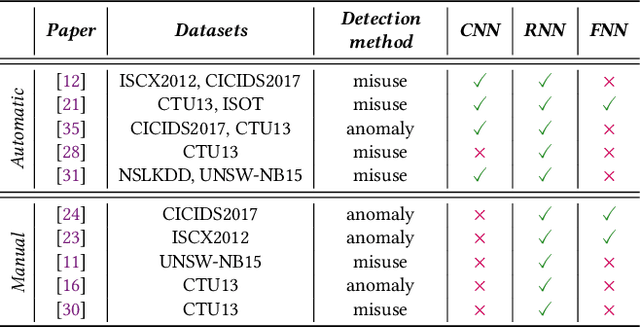

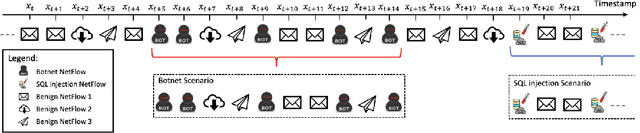

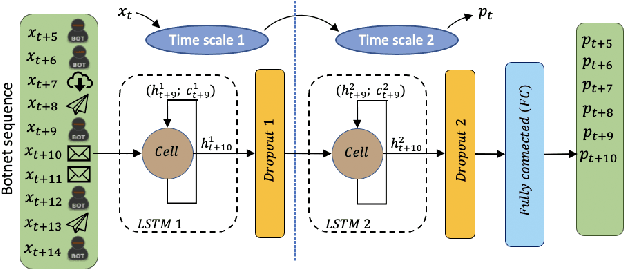

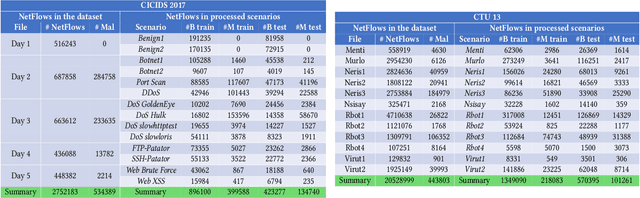

On the Evaluation of Sequential Machine Learning for Network Intrusion Detection

Jun 15, 2021

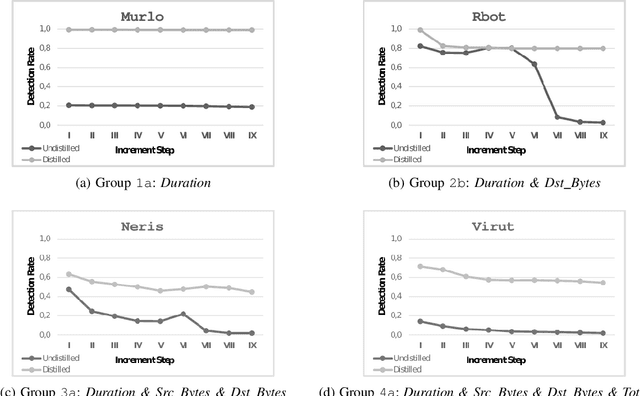

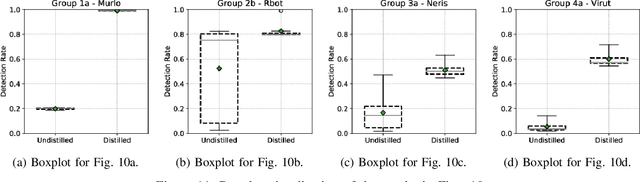

Abstract:Recent advances in deep learning renewed the research interests in machine learning for Network Intrusion Detection Systems (NIDS). Specifically, attention has been given to sequential learning models, due to their ability to extract the temporal characteristics of Network traffic Flows (NetFlows), and use them for NIDS tasks. However, the applications of these sequential models often consist of transferring and adapting methodologies directly from other fields, without an in-depth investigation on how to leverage the specific circumstances of cybersecurity scenarios; moreover, there is a lack of comprehensive studies on sequential models that rely on NetFlow data, which presents significant advantages over traditional full packet captures. We tackle this problem in this paper. We propose a detailed methodology to extract temporal sequences of NetFlows that denote patterns of malicious activities. Then, we apply this methodology to compare the efficacy of sequential learning models against traditional static learning models. In particular, we perform a fair comparison of a `sequential' Long Short-Term Memory (LSTM) against a `static' Feedforward Neural Networks (FNN) in distinct environments represented by two well-known datasets for NIDS: the CICIDS2017 and the CTU13. Our results highlight that LSTM achieves comparable performance to FNN in the CICIDS2017 with over 99.5\% F1-score; while obtaining superior performance in the CTU13, with 95.7\% F1-score against 91.5\%. This paper thus paves the way to future applications of sequential learning models for NIDS.

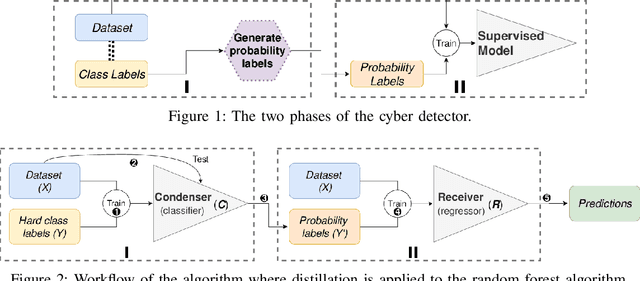

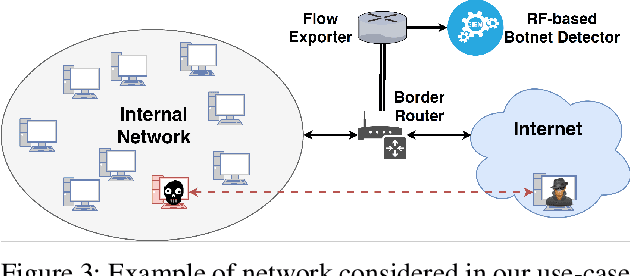

Hardening Random Forest Cyber Detectors Against Adversarial Attacks

Dec 09, 2019

Abstract:Machine learning algorithms are effective in several applications, but they are not as much successful when applied to intrusion detection in cyber security. Due to the high sensitivity to their training data, cyber detectors based on machine learning are vulnerable to targeted adversarial attacks that involve the perturbation of initial samples. Existing defenses assume unrealistic scenarios; their results are underwhelming in non-adversarial settings; or they can be applied only to machine learning algorithms that perform poorly for cyber security. We present an original methodology for countering adversarial perturbations targeting intrusion detection systems based on random forests. As a practical application, we integrate the proposed defense method in a cyber detector analyzing network traffic. The experimental results on millions of labelled network flows show that the new detector has a twofold value: it outperforms state-of-the-art detectors that are subject to adversarial attacks; it exhibits robust results both in adversarial and non-adversarial scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge