Gaia Belardinelli

A Logic of General Attention Using Edge-Conditioned Event Models (Extended Version)

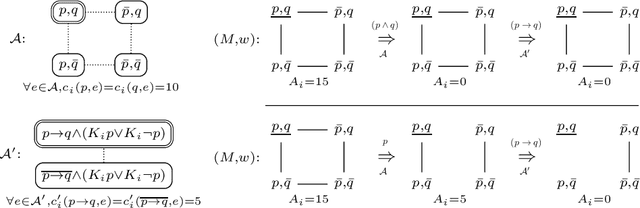

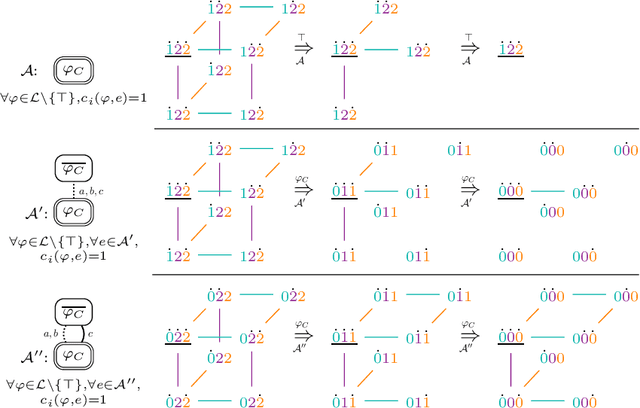

May 20, 2025Abstract:In this work, we present the first general logic of attention. Attention is a powerful cognitive ability that allows agents to focus on potentially complex information, such as logically structured propositions, higher-order beliefs, or what other agents pay attention to. This ability is a strength, as it helps to ignore what is irrelevant, but it can also introduce biases when some types of information or agents are systematically ignored. Existing dynamic epistemic logics for attention cannot model such complex attention scenarios, as they only model attention to atomic formulas. Additionally, such logics quickly become cumbersome, as their size grows exponentially in the number of agents and announced literals. Here, we introduce a logic that overcomes both limitations. First, we generalize edge-conditioned event models, which we show to be as expressive as standard event models yet exponentially more succinct (generalizing both standard event models and generalized arrow updates). Second, we extend attention to arbitrary formulas, allowing agents to also attend to other agents' beliefs or attention. Our work treats attention as a modality, like belief or awareness. We introduce attention principles that impose closure properties on that modality and that can be used in its axiomatization. Throughout, we illustrate our framework with examples of AI agents reasoning about human attentional biases, demonstrating how such agents can discover attentional biases.

Attention! Dynamic Epistemic Logic Models of (In)attentive Agents

Mar 23, 2023

Abstract:Attention is the crucial cognitive ability that limits and selects what information we observe. Previous work by Bolander et al. (2016) proposes a model of attention based on dynamic epistemic logic (DEL) where agents are either fully attentive or not attentive at all. While introducing the realistic feature that inattentive agents believe nothing happens, the model does not represent the most essential aspect of attention: its selectivity. Here, we propose a generalization that allows for paying attention to subsets of atomic formulas. We introduce the corresponding logic for propositional attention, and show its axiomatization to be sound and complete. We then extend the framework to account for inattentive agents that, instead of assuming nothing happens, may default to a specific truth-value of what they failed to attend to (a sort of prior concerning the unattended atoms). This feature allows for a more cognitively plausible representation of the inattentional blindness phenomenon, where agents end up with false beliefs due to their failure to attend to conspicuous but unexpected events. Both versions of the model define attention-based learning through appropriate DEL event models based on a few and clear edge principles. While the size of such event models grow exponentially both with the number of agents and the number of atoms, we introduce a new logical language for describing event models syntactically and show that using this language our event models can be represented linearly in the number of agents and atoms. Furthermore, representing our event models using this language is achieved by a straightforward formalisation of the aforementioned edge principles.

Awareness Logic: Kripke Lattices as a Middle Ground between Syntactic and Semantic Models

Jun 24, 2021

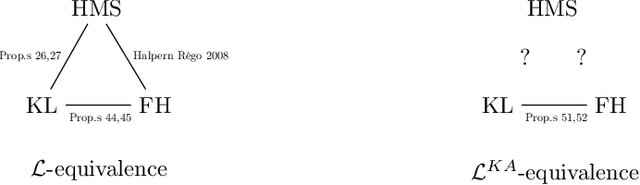

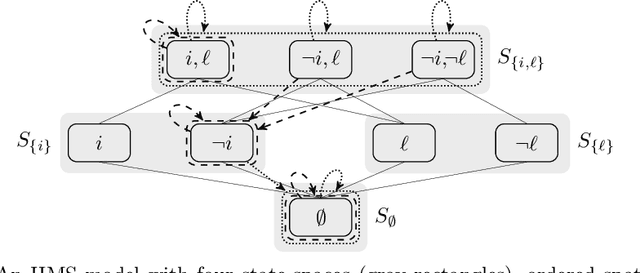

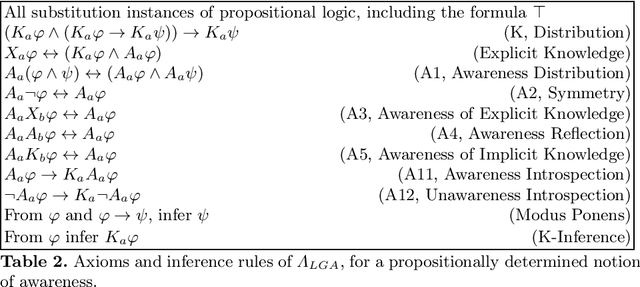

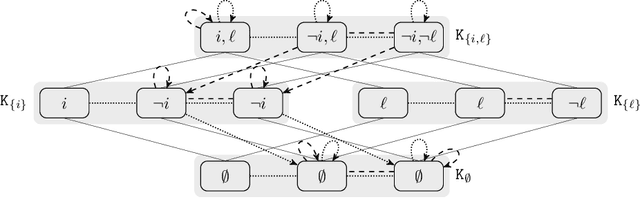

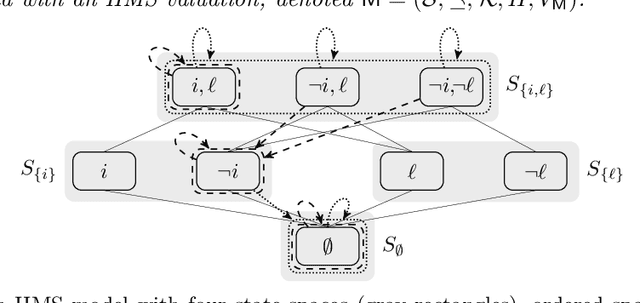

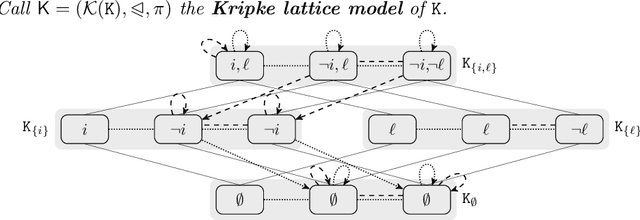

Abstract:The literature on awareness modeling includes both syntax-free and syntax-based frameworks. Heifetz, Meier \& Schipper (HMS) propose a lattice model of awareness that is syntax-free. While their lattice approach is elegant and intuitive, it precludes the simple option of relying on formal language to induce lattices, and does not explicitly distinguish uncertainty from unawareness. Contra this, the most prominent syntax-based solution, the Fagin-Halpern (FH) model, accounts for this distinction and offers a simple representation of awareness, but lacks the intuitiveness of the lattice structure. Here, we combine these two approaches by providing a lattice of Kripke models, induced by atom subset inclusion, in which uncertainty and unawareness are separate. We show our model equivalent to both HMS and FH models by defining transformations between them which preserve satisfaction of formulas of a language for explicit knowledge, and obtain completeness through our and HMS' results. Lastly, we prove that the Kripke lattice model can be shown equivalent to the FH model (when awareness is propositionally determined) also with respect to the language of the Logic of General Awareness, for which the FH model where originally proposed.

Epistemic Planning with Attention as a Bounded Resource

May 20, 2021

Abstract:Where information grows abundant, attention becomes a scarce resource. As a result, agents must plan wisely how to allocate their attention in order to achieve epistemic efficiency. Here, we present a framework for multi-agent epistemic planning with attention, based on Dynamic Epistemic Logic (DEL, a powerful formalism for epistemic planning). We identify the framework as a fragment of standard DEL, and consider its plan existence problem. While in the general case undecidable, we show that when attention is required for learning, all instances of the problem are decidable.

Awareness Logic: A Kripke-based Rendition of the Heifetz-Meier-Schipper Model

Dec 23, 2020

Abstract:Heifetz, Meier and Schipper (HMS) present a lattice model of awareness. The HMS model is syntax-free, which precludes the simple option to rely on formal language to induce lattices, and represents uncertainty and unawareness with one entangled construct, making it difficult to assess the properties of either. Here, we present a model based on a lattice of Kripke models, induced by atom subset inclusion, in which uncertainty and unawareness are separate. We show the models to be equivalent by defining transformations between them which preserve formula satisfaction, and obtain completeness through our and HMS' results.

* 18 pages, 2 figures, proceedings of DaLi conference 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge