Eui-Jin Kim

A Large Language Model for Feasible and Diverse Population Synthesis

May 07, 2025

Abstract:Generating a synthetic population that is both feasible and diverse is crucial for ensuring the validity of downstream activity schedule simulation in activity-based models (ABMs). While deep generative models (DGMs), such as variational autoencoders and generative adversarial networks, have been applied to this task, they often struggle to balance the inclusion of rare but plausible combinations (i.e., sampling zeros) with the exclusion of implausible ones (i.e., structural zeros). To improve feasibility while maintaining diversity, we propose a fine-tuning method for large language models (LLMs) that explicitly controls the autoregressive generation process through topological orderings derived from a Bayesian Network (BN). Experimental results show that our hybrid LLM-BN approach outperforms both traditional DGMs and proprietary LLMs (e.g., ChatGPT-4o) with few-shot learning. Specifically, our approach achieves approximately 95% feasibility, significantly higher than the ~80% observed in DGMs, while maintaining comparable diversity, making it well-suited for practical applications. Importantly, the method is based on a lightweight open-source LLM, enabling fine-tuning and inference on standard personal computing environments. This makes the approach cost-effective and scalable for large-scale applications, such as synthesizing populations in megacities, without relying on expensive infrastructure. By initiating the ABM pipeline with high-quality synthetic populations, our method improves overall simulation reliability and reduces downstream error propagation. The source code for these methods is available for research and practical application.

A Deep Generative Model for Feasible and Diverse Population Synthesis

Aug 01, 2022

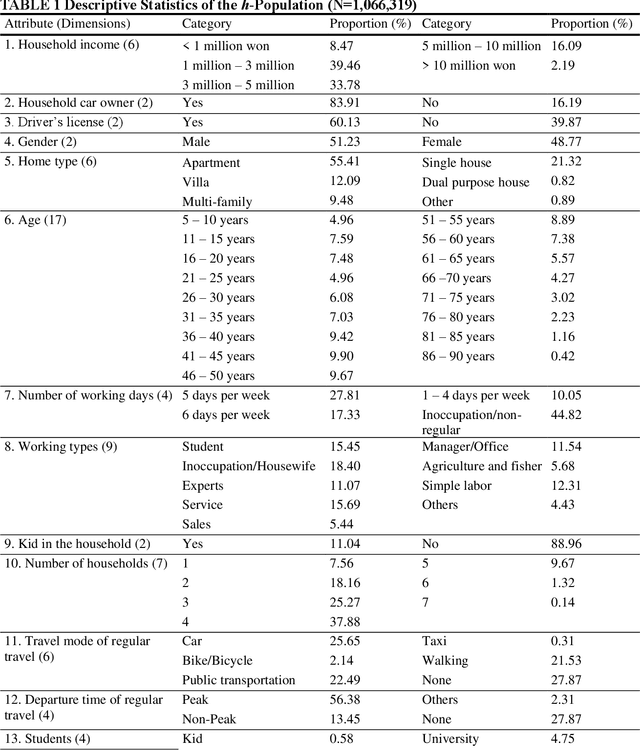

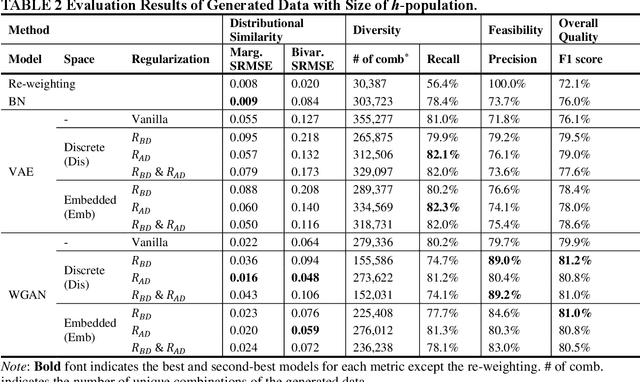

Abstract:An ideal synthetic population, a key input to activity-based models, mimics the distribution of the individual- and household-level attributes in the actual population. Since the entire population's attributes are generally unavailable, household travel survey (HTS) samples are used for population synthesis. Synthesizing population by directly sampling from HTS ignores the attribute combinations that are unobserved in the HTS samples but exist in the population, called 'sampling zeros'. A deep generative model (DGM) can potentially synthesize the sampling zeros but at the expense of generating 'structural zeros' (i.e., the infeasible attribute combinations that do not exist in the population). This study proposes a novel method to minimize structural zeros while preserving sampling zeros. Two regularizations are devised to customize the training of the DGM and applied to a generative adversarial network (GAN) and a variational autoencoder (VAE). The adopted metrics for feasibility and diversity of the synthetic population indicate the capability of generating sampling and structural zeros -- lower structural zeros and lower sampling zeros indicate the higher feasibility and the lower diversity, respectively. Results show that the proposed regularizations achieve considerable performance improvement in feasibility and diversity of the synthesized population over traditional models. The proposed VAE additionally generated 23.5% of the population ignored by the sample with 79.2% precision (i.e., 20.8% structural zeros rates), while the proposed GAN generated 18.3% of the ignored population with 89.0% precision. The proposed improvement in DGM generates a more feasible and diverse synthetic population, which is critical for the accuracy of an activity-based model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge