Dennis H. Murphree

Foundation Models in Dermatopathology: Skin Tissue Classification

Oct 24, 2025Abstract:The rapid generation of whole-slide images (WSIs) in dermatopathology necessitates automated methods for efficient processing and accurate classification. This study evaluates the performance of two foundation models, UNI and Virchow2, as feature extractors for classifying WSIs into three diagnostic categories: melanocytic, basaloid, and squamous lesions. Patch-level embeddings were aggregated into slide-level features using a mean-aggregation strategy and subsequently used to train multiple machine learning classifiers, including logistic regression, gradient-boosted trees, and random forest models. Performance was assessed using precision, recall, true positive rate, false positive rate, and the area under the receiver operating characteristic curve (AUROC) on the test set. Results demonstrate that patch-level features extracted using Virchow2 outperformed those extracted via UNI across most slide-level classifiers, with logistic regression achieving the highest accuracy (90%) for Virchow2, though the difference was not statistically significant. The study also explored data augmentation techniques and image normalization to enhance model robustness and generalizability. The mean-aggregation approach provided reliable slide-level feature representations. All experimental results and metrics were tracked and visualized using WandB.ai, facilitating reproducibility and interpretability. This research highlights the potential of foundation models for automated WSI classification, providing a scalable and effective approach for dermatopathological diagnosis while paving the way for future advancements in slide-level representation learning.

Transfer Learning for Melanoma Detection: Participation in ISIC 2017 Skin Lesion Classification Challenge

Mar 15, 2017

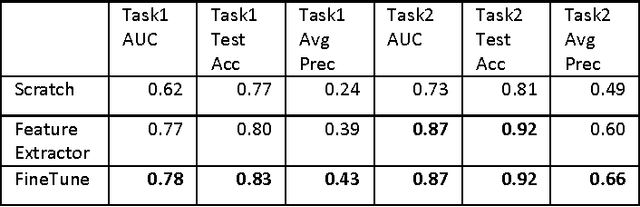

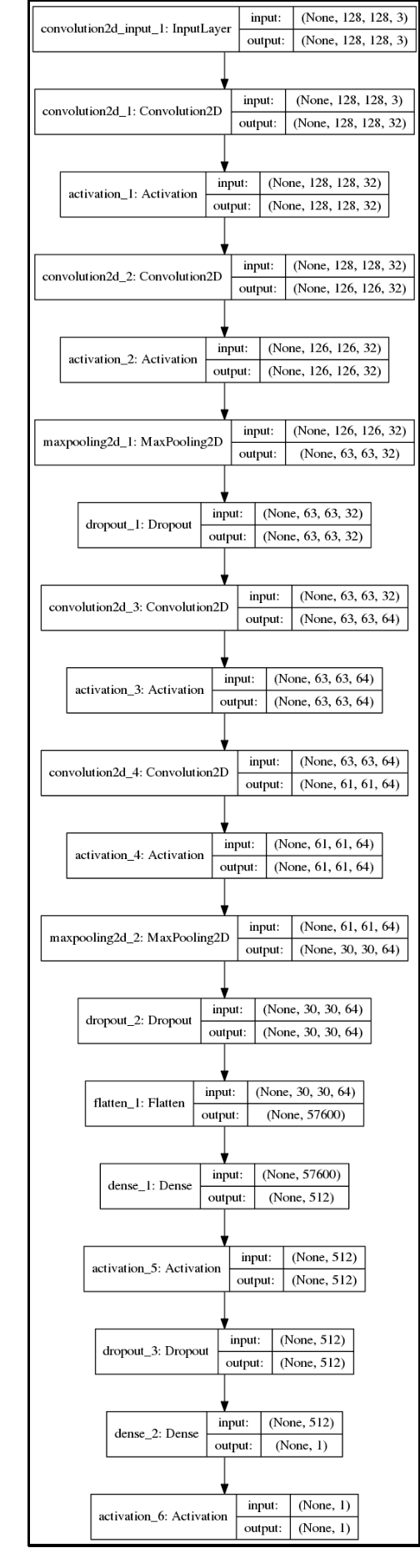

Abstract:This manuscript describes our participation in the International Skin Imaging Collaboration's 2017 Skin Lesion Analysis Towards Melanoma Detection competition. We participated in Part 3: Lesion Classification. The two stated goals of this binary image classification challenge were to distinguish between (a) melanoma and (b) nevus and seborrheic keratosis, followed by distinguishing between (a) seborrheic keratosis and (b) nevus and melanoma. We chose a deep neural network approach with a transfer learning strategy, using a pre-trained Inception V3 network as both a feature extractor to provide input for a multi-layer perceptron as well as fine-tuning an augmented Inception network. This approach yielded validation set AUC's of 0.84 on the second task and 0.76 on the first task, for an average AUC of 0.80. We joined the competition unfortunately late, and we look forward to improving on these results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge