David Zucker

Deeply Explainable Artificial Neural Network

May 10, 2025Abstract:While deep learning models have demonstrated remarkable success in numerous domains, their black-box nature remains a significant limitation, especially in critical fields such as medical image analysis and inference. Existing explainability methods, such as SHAP, LIME, and Grad-CAM, are typically applied post hoc, adding computational overhead and sometimes producing inconsistent or ambiguous results. In this paper, we present the Deeply Explainable Artificial Neural Network (DxANN), a novel deep learning architecture that embeds explainability ante hoc, directly into the training process. Unlike conventional models that require external interpretation methods, DxANN is designed to produce per-sample, per-feature explanations as part of the forward pass. Built on a flow-based framework, it enables both accurate predictions and transparent decision-making, and is particularly well-suited for image-based tasks. While our focus is on medical imaging, the DxANN architecture is readily adaptable to other data modalities, including tabular and sequential data. DxANN marks a step forward toward intrinsically interpretable deep learning, offering a practical solution for applications where trust and accountability are essential.

Does Your Phone Know Your Touch?

Sep 10, 2018

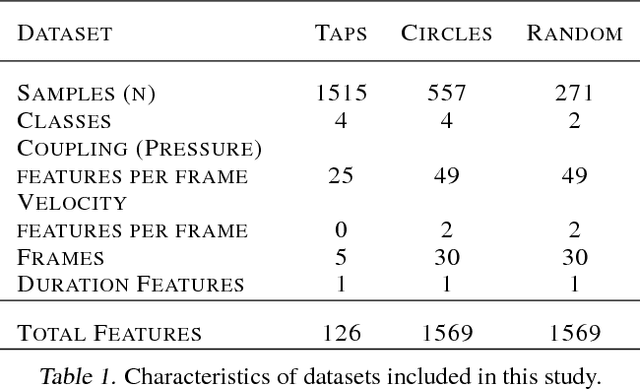

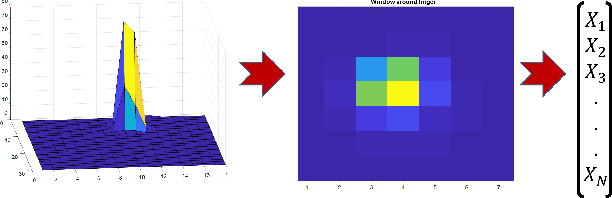

Abstract:This paper explores supervised techniques for continuous anomaly detection from biometric touch screen data. A capacitive sensor array used to mimic a touch screen as used to collect touch and swipe gestures from participants. The gestures are recorded over fixed segments of time, with position and force measured for each gesture. Support Vector Machine, Logistic Regression, and Gaussian mixture models were tested to learn individual touch patterns. Test results showed true negative and true positive scores of over 95% accuracy for all gesture types, with logistic regression models far outperforming the other methods. A more expansive and varied data collection over longer periods of time is needed to determine pragmatic usage of these results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge