Dario Izzo

Symbolic Regression for Space Applications: Differentiable Cartesian Genetic Programming Powered by Multi-objective Memetic Algorithms

Jun 13, 2022

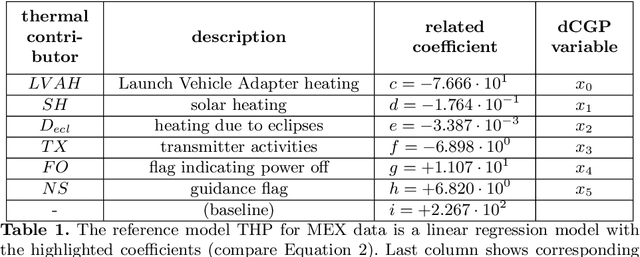

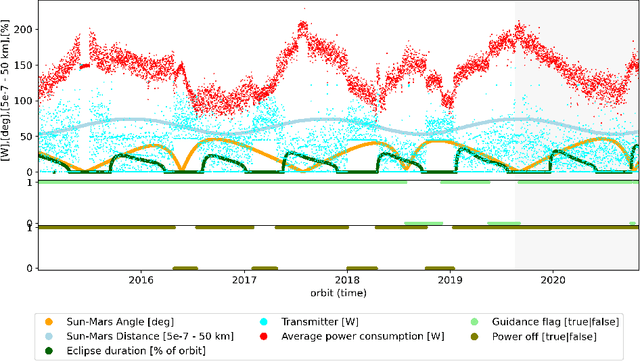

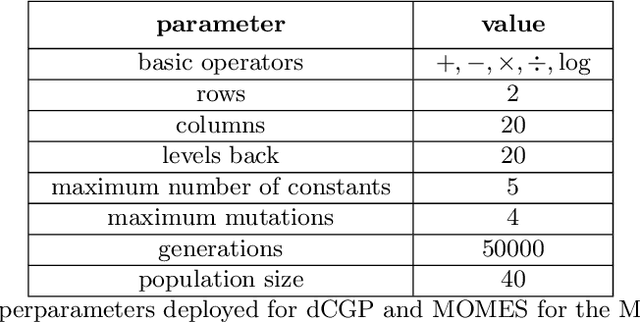

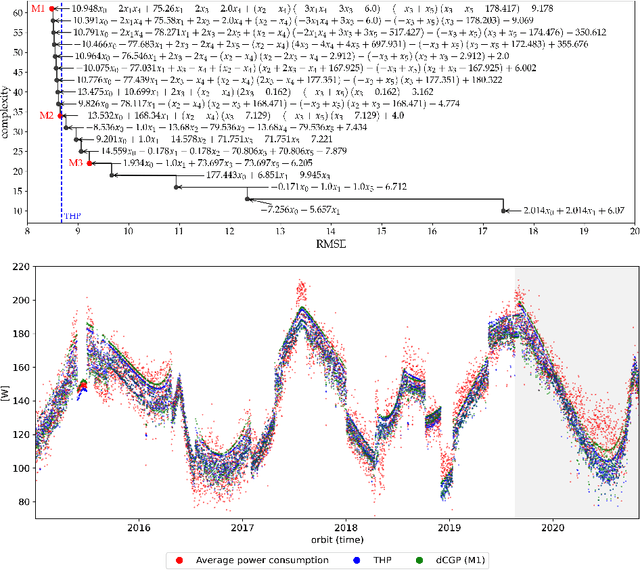

Abstract:Interpretable regression models are important for many application domains, as they allow experts to understand relations between variables from sparse data. Symbolic regression addresses this issue by searching the space of all possible free form equations that can be constructed from elementary algebraic functions. While explicit mathematical functions can be rediscovered this way, the determination of unknown numerical constants during search has been an often neglected issue. We propose a new multi-objective memetic algorithm that exploits a differentiable Cartesian Genetic Programming encoding to learn constants during evolutionary loops. We show that this approach is competitive or outperforms machine learned black box regression models or hand-engineered fits for two applications from space: the Mars express thermal power estimation and the determination of the age of stars by gyrochronology.

The Fellowship of the Dyson Ring: ACT&Friends' Results and Methods for GTOC 11

May 23, 2022

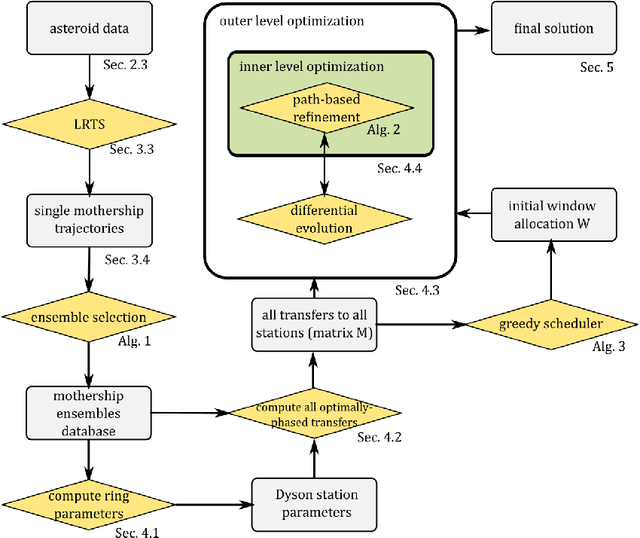

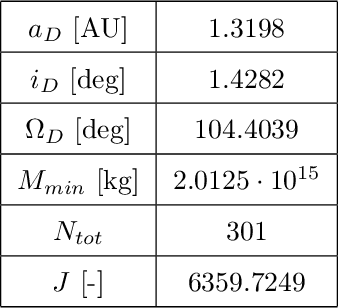

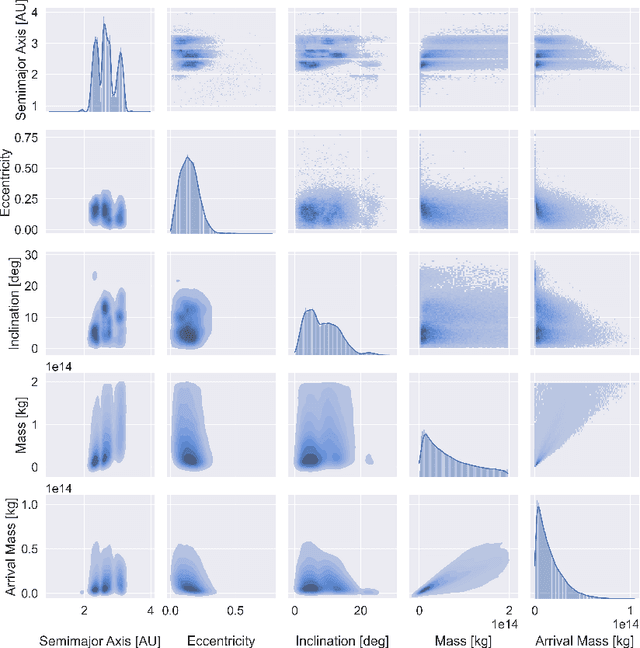

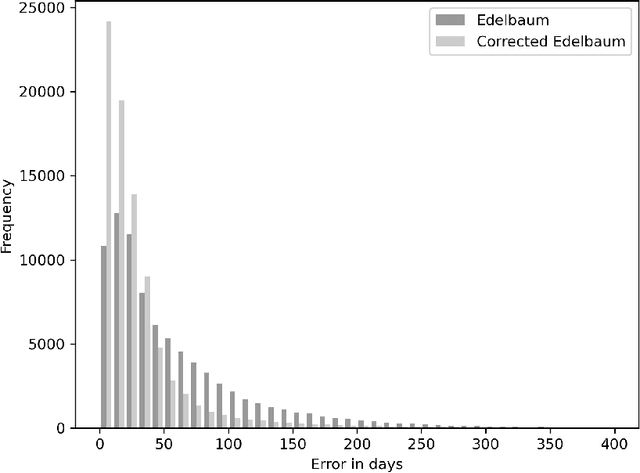

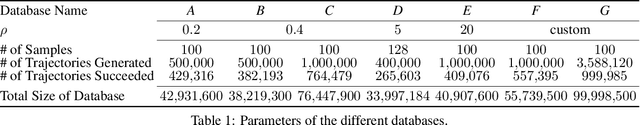

Abstract:Dyson spheres are hypothetical megastructures encircling stars in order to harvest most of their energy output. During the 11th edition of the GTOC challenge, participants were tasked with a complex trajectory planning related to the construction of a precursor Dyson structure, a heliocentric ring made of twelve stations. To this purpose, we developed several new approaches that synthesize techniques from machine learning, combinatorial optimization, planning and scheduling, and evolutionary optimization effectively integrated into a fully automated pipeline. These include a machine learned transfer time estimator, improving the established Edelbaum approximation and thus better informing a Lazy Race Tree Search to identify and collect asteroids with high arrival mass for the stations; a series of optimally-phased low-thrust transfers to all stations computed by indirect optimization techniques, exploiting the synodic periodicity of the system; and a modified Hungarian scheduling algorithm, which utilizes evolutionary techniques to arrange a mass-balanced arrival schedule out of all transfer possibilities. We describe the steps of our pipeline in detail with a special focus on how our approaches mutually benefit from each other. Lastly, we outline and analyze the final solution of our team, ACT&Friends, which ranked second at the GTOC 11 challenge.

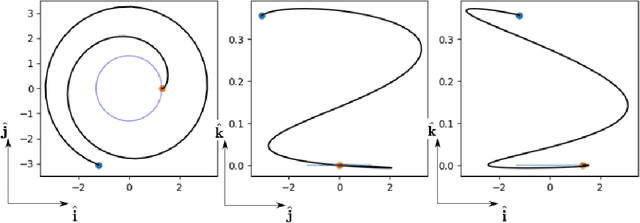

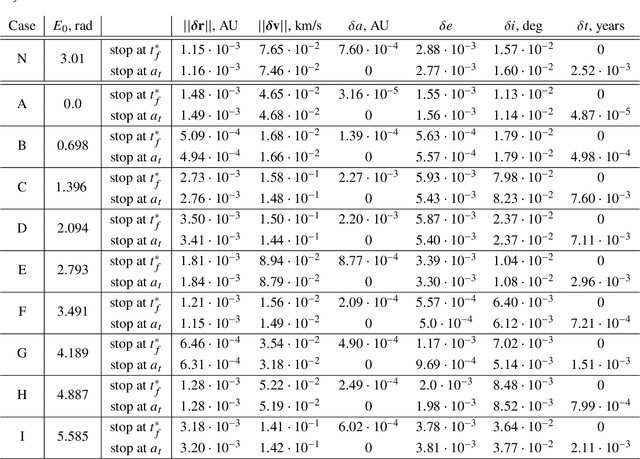

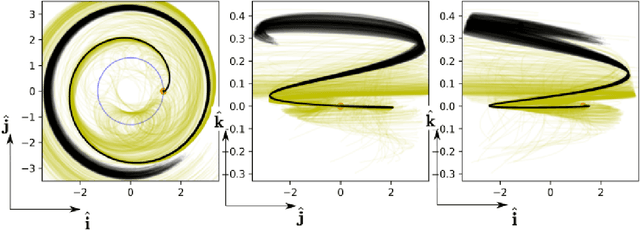

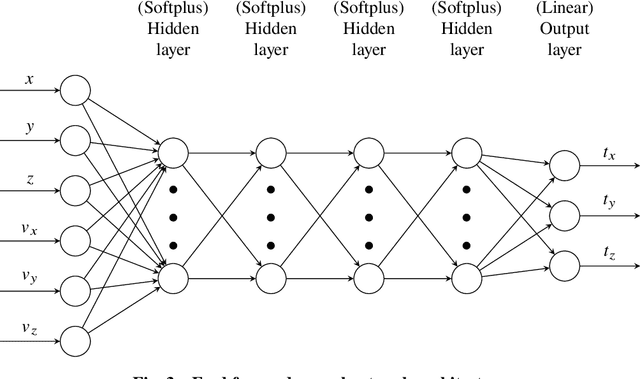

Neural representation of a time optimal, constant acceleration rendezvous

Mar 29, 2022

Abstract:We train neural models to represent both the optimal policy (i.e. the optimal thrust direction) and the value function (i.e. the time of flight) for a time optimal, constant acceleration low-thrust rendezvous. In both cases we develop and make use of the data augmentation technique we call backward generation of optimal examples. We are thus able to produce and work with large dataset and to fully exploit the benefit of employing a deep learning framework. We achieve, in all cases, accuracies resulting in successful rendezvous (simulated following the learned policy) and time of flight predictions (using the learned value function). We find that residuals as small as a few m/s, thus well within the possibility of a spacecraft navigation $\Delta V$ budget, are achievable for the velocity at rendezvous. We also find that, on average, the absolute error to predict the optimal time of flight to rendezvous from any orbit in the asteroid belt to an Earth-like orbit is small (less than 4\%) and thus also of interest for practical uses, for example, during preliminary mission design phases.

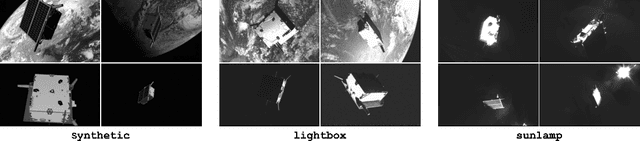

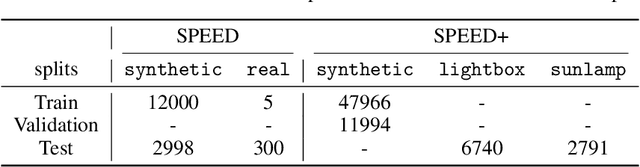

SPEED+: Next Generation Dataset for Spacecraft Pose Estimation across Domain Gap

Oct 06, 2021

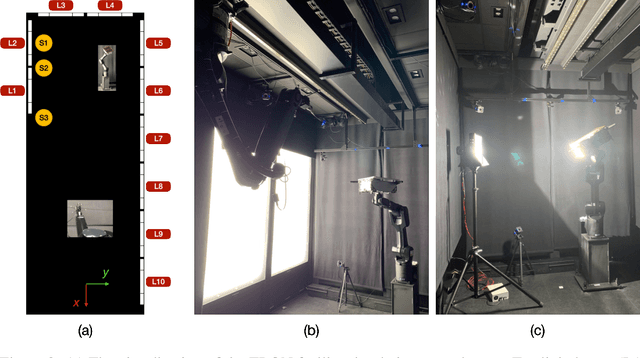

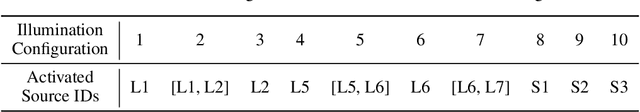

Abstract:Autonomous vision-based spaceborne navigation is an enabling technology for future on-orbit servicing and space logistics missions. While computer vision in general has benefited from Machine Learning (ML), training and validating spaceborne ML models are extremely challenging due to the impracticality of acquiring a large-scale labeled dataset of images of the intended target in the space environment. Existing datasets, such as Spacecraft PosE Estimation Dataset (SPEED), have so far mostly relied on synthetic images for both training and validation, which are easy to mass-produce but fail to resemble the visual features and illumination variability inherent to the target spaceborne images. In order to bridge the gap between the current practices and the intended applications in future space missions, this paper introduces SPEED+: the next generation spacecraft pose estimation dataset with specific emphasis on domain gap. In addition to 60,000 synthetic images for training, SPEED+ includes 9,531 simulated images of a spacecraft mockup model captured from the Testbed for Rendezvous and Optical Navigation (TRON) facility. TRON is a first-of-a-kind robotic testbed capable of capturing an arbitrary number of target images with accurate and maximally diverse pose labels and high-fidelity spaceborne illumination conditions. SPEED+ will be used in the upcoming international Satellite Pose Estimation Challenge co-hosted with the Advanced Concepts Team of the European Space Agency to evaluate and compare the robustness of spaceborne ML models trained on synthetic images.

Geodesy of irregular small bodies via neural density fields: geodesyNets

May 27, 2021

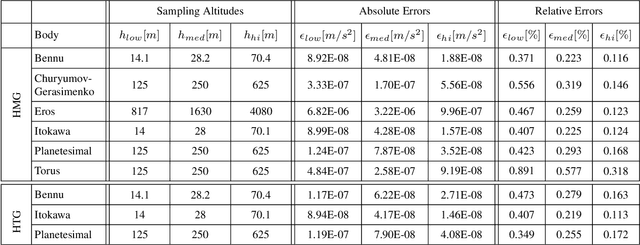

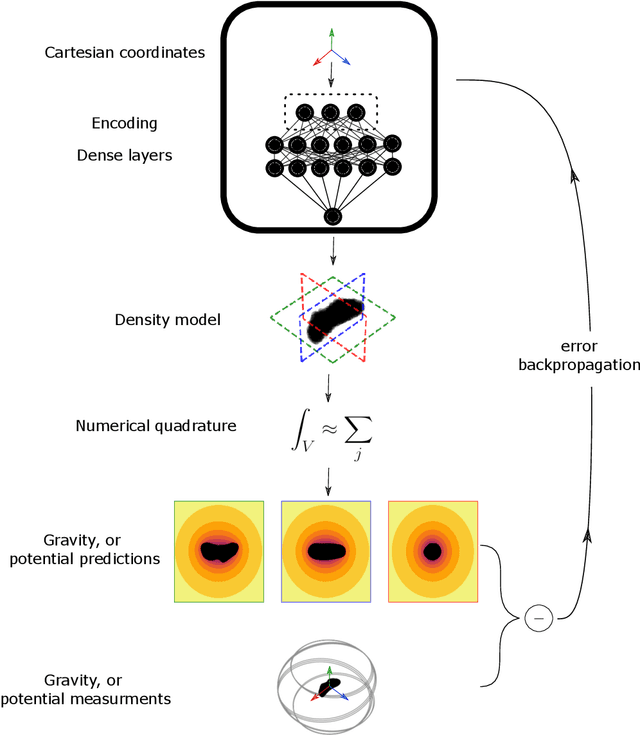

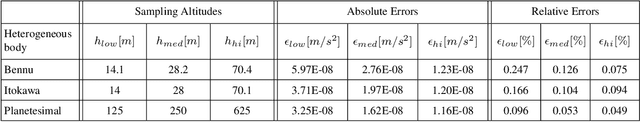

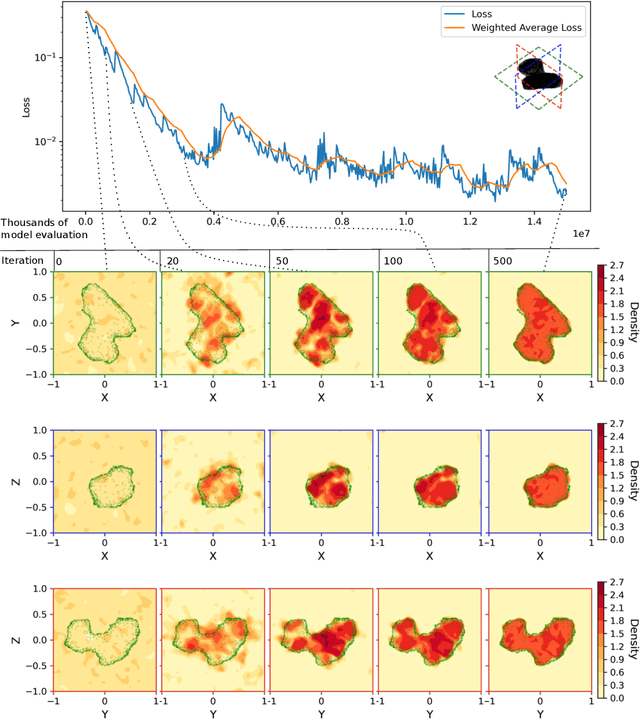

Abstract:We present a novel approach based on artificial neural networks, so-called geodesyNets, and present compelling evidence of their ability to serve as accurate geodetic models of highly irregular bodies using minimal prior information on the body. The approach does not rely on the body shape information but, if available, can harness it. GeodesyNets learn a three-dimensional, differentiable, function representing the body density, which we call neural density field. The body shape, as well as other geodetic properties, can easily be recovered. We investigate six different shapes including the bodies 101955 Bennu, 67P Churyumov-Gerasimenko, 433 Eros and 25143 Itokawa for which shape models developed during close proximity surveys are available. Both heterogeneous and homogeneous mass distributions are considered. The gravitational acceleration computed from the trained geodesyNets models, as well as the inferred body shape, show great accuracy in all cases with a relative error on the predicted acceleration smaller than 1\% even close to the asteroid surface. When the body shape information is available, geodesyNets can seamlessly exploit it and be trained to represent a high-fidelity neural density field able to give insights into the internal structure of the body. This work introduces a new unexplored approach to geodesy, adding a powerful tool to consolidated ones based on spherical harmonics, mascon models and polyhedral gravity.

Vision-based Neural Scene Representations for Spacecraft

May 11, 2021

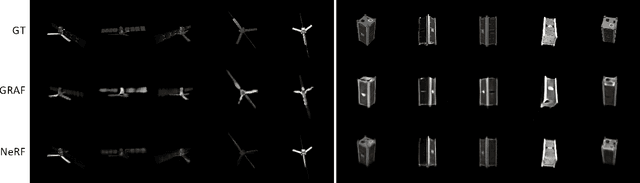

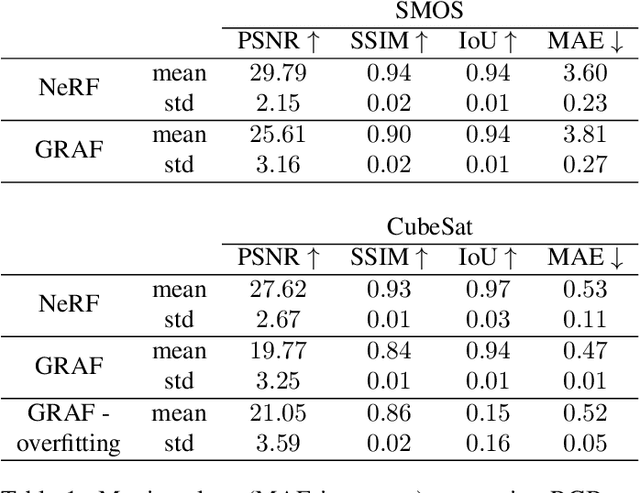

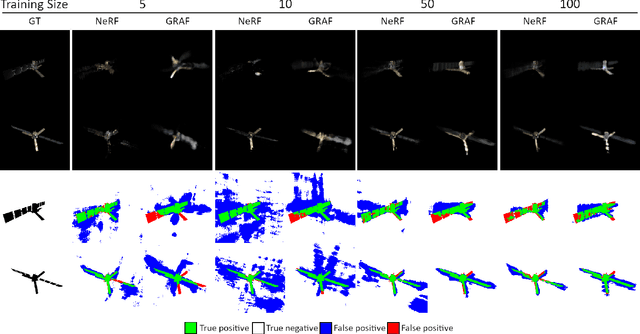

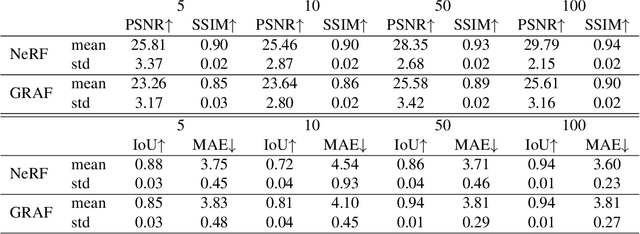

Abstract:In advanced mission concepts with high levels of autonomy, spacecraft need to internally model the pose and shape of nearby orbiting objects. Recent works in neural scene representations show promising results for inferring generic three-dimensional scenes from optical images. Neural Radiance Fields (NeRF) have shown success in rendering highly specular surfaces using a large number of images and their pose. More recently, Generative Radiance Fields (GRAF) achieved full volumetric reconstruction of a scene from unposed images only, thanks to the use of an adversarial framework to train a NeRF. In this paper, we compare and evaluate the potential of NeRF and GRAF to render novel views and extract the 3D shape of two different spacecraft, the Soil Moisture and Ocean Salinity satellite of ESA's Living Planet Programme and a generic cube sat. Considering the best performances of both models, we observe that NeRF has the ability to render more accurate images regarding the material specularity of the spacecraft and its pose. For its part, GRAF generates precise novel views with accurate details even when parts of the satellites are shadowed while having the significant advantage of not needing any information about the relative pose.

Shadow Neural Radiance Fields for Multi-view Satellite Photogrammetry

Apr 20, 2021

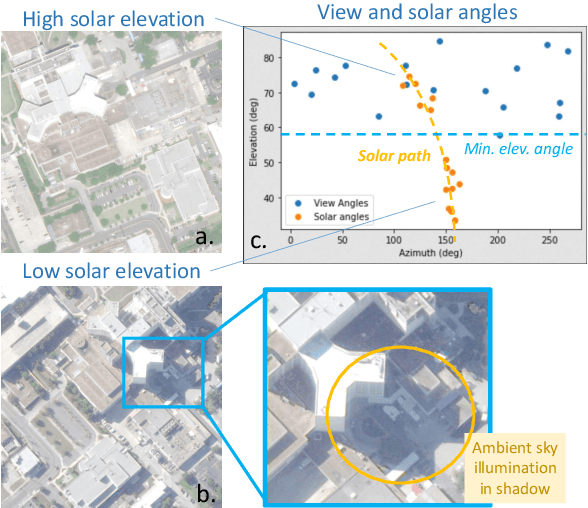

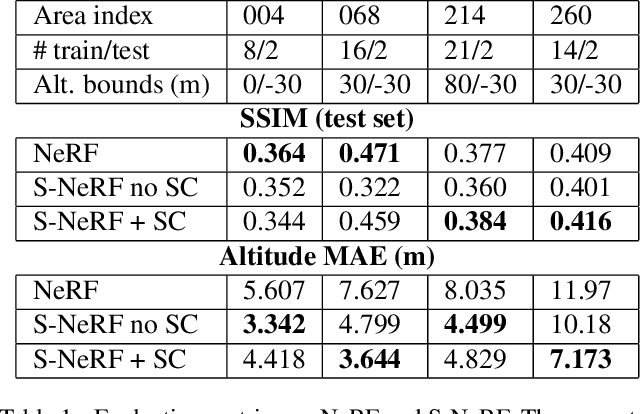

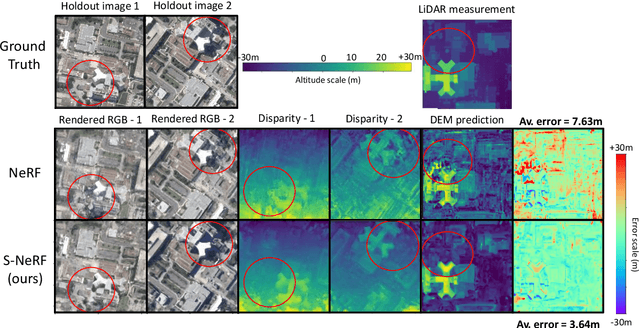

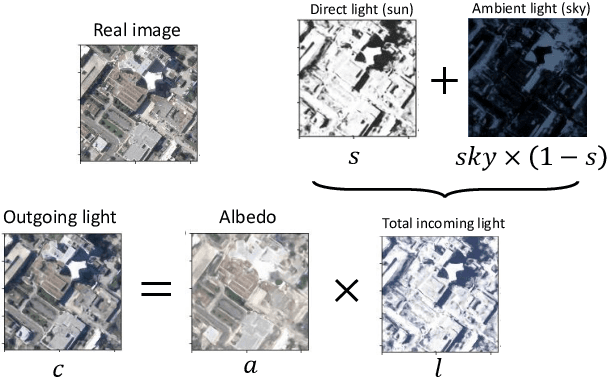

Abstract:We present a new generic method for shadow-aware multi-view satellite photogrammetry of Earth Observation scenes. Our proposed method, the Shadow Neural Radiance Field (S-NeRF) follows recent advances in implicit volumetric representation learning. For each scene, we train S-NeRF using very high spatial resolution optical images taken from known viewing angles. The learning requires no labels or shape priors: it is self-supervised by an image reconstruction loss. To accommodate for changing light source conditions both from a directional light source (the Sun) and a diffuse light source (the sky), we extend the NeRF approach in two ways. First, direct illumination from the Sun is modeled via a local light source visibility field. Second, indirect illumination from a diffuse light source is learned as a non-local color field as a function of the position of the Sun. Quantitatively, the combination of these factors reduces the altitude and color errors in shaded areas, compared to NeRF. The S-NeRF methodology not only performs novel view synthesis and full 3D shape estimation, it also enables shadow detection, albedo synthesis, and transient object filtering, without any explicit shape supervision.

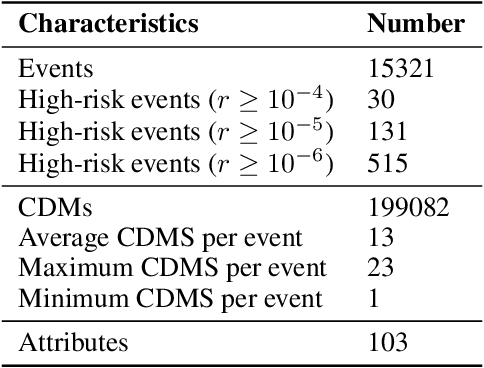

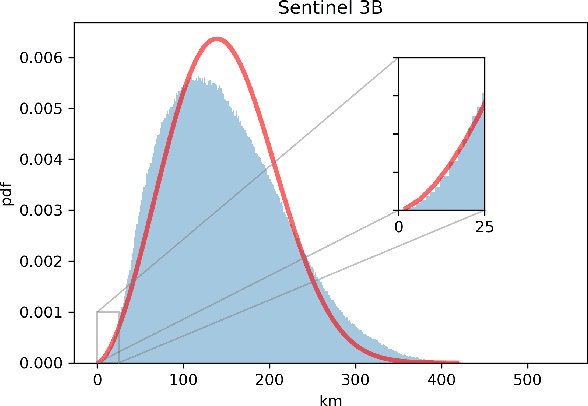

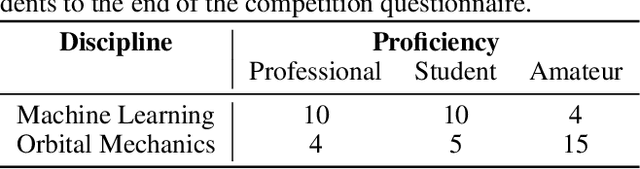

Spacecraft Collision Avoidance Challenge: design and results of a machine learning competition

Aug 07, 2020

Abstract:Spacecraft collision avoidance procedures have become an essential part of satellite operations. Complex and constantly updated estimates of the collision risk between orbiting objects inform the various operators who can then plan risk mitigation measures. Such measures could be aided by the development of suitable machine learning models predicting, for example, the evolution of the collision risk in time. In an attempt to study this opportunity, the European Space Agency released, in October 2019, a large curated dataset containing information about close approach events, in the form of Conjunction Data Messages (CDMs), collected from 2015 to 2019. This dataset was used in the Spacecraft Collision Avoidance Challenge, a machine learning competition where participants had to build models to predict the final collision risk between orbiting objects. This paper describes the design and results of the competition and discusses the challenges and lessons learned when applying machine learning methods to this problem domain.

Safe Crossover of Neural Networks Through Neuron Alignment

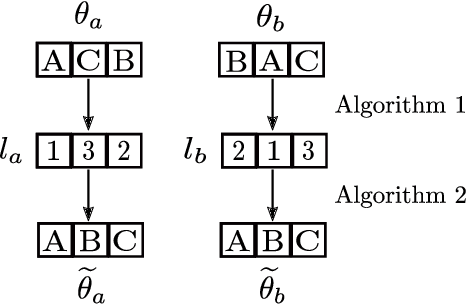

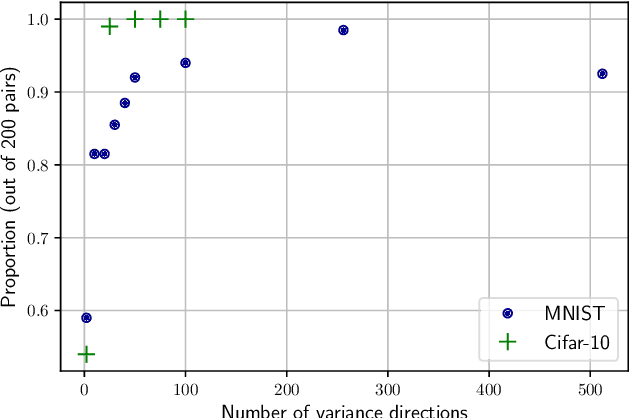

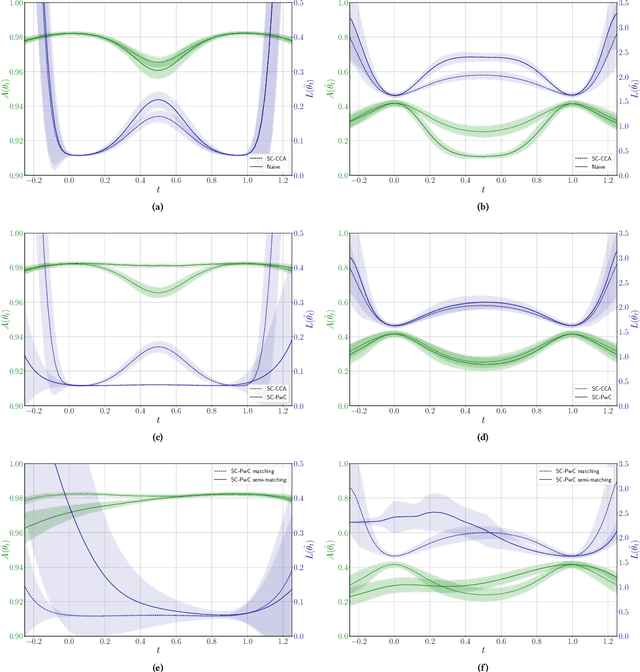

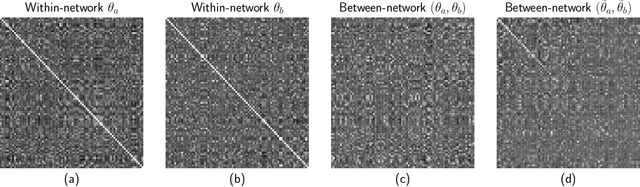

Mar 24, 2020

Abstract:One of the main and largely unexplored challenges in evolving the weights of neural networks using genetic algorithms is to find a sensible crossover operation between parent networks. Indeed, naive crossover leads to functionally damaged offspring that do not retain information from the parents. This is because neural networks are invariant to permutations of neurons, giving rise to multiple ways of representing the same solution. This is often referred to as the competing conventions problem. In this paper, we propose a two-step safe crossover(SC) operator. First, the neurons of the parents are functionally aligned by computing how well they correlate, and only then are the parents recombined. We compare two ways of measuring relationships between neurons: Pairwise Correlation (PwC) and Canonical Correlation Analysis (CCA). We test our safe crossover operators (SC-PwC and SC-CCA) on MNIST and CIFAR-10 by performing arithmetic crossover on the weights of feed-forward neural network pairs. We show that it effectively transmits information from parents to offspring and significantly improves upon naive crossover. Our method is computationally fast,can serve as a way to explore the fitness landscape more efficiently and makes safe crossover a potentially promising operator in future neuroevolution research and applications.

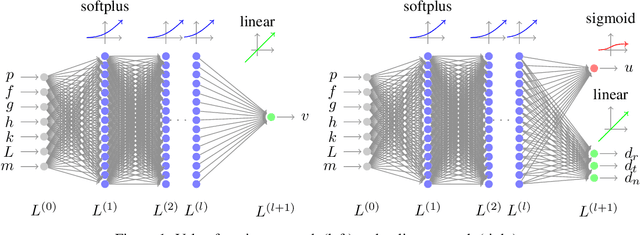

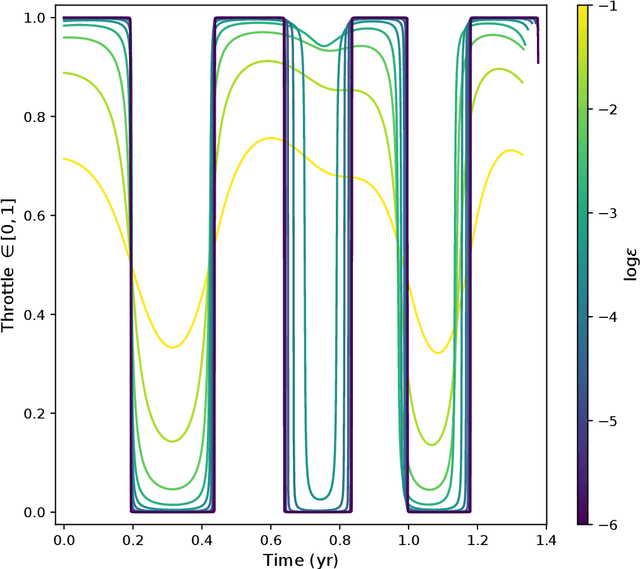

Real-Time Optimal Guidance and Control for Interplanetary Transfers Using Deep Networks

Feb 20, 2020

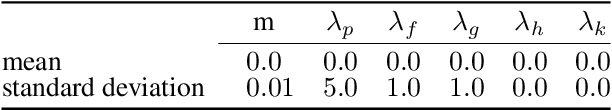

Abstract:We consider the Earth-Venus mass-optimal interplanetary transfer of a low-thrust spacecraft and show how the optimal guidance can be represented by deep networks in a large portion of the state space and to a high degree of accuracy. Imitation (supervised) learning of optimal examples is used as a network training paradigm. The resulting models are suitable for an on-board, real-time, implementation of the optimal guidance and control system of the spacecraft and are called G&CNETs. A new general methodology called Backward Generation of Optimal Examples is introduced and shown to be able to efficiently create all the optimal state action pairs necessary to train G&CNETs without solving optimal control problems. With respect to previous works, we are able to produce datasets containing a few orders of magnitude more optimal trajectories and obtain network performances compatible with real missions requirements. Several schemes able to train representations of either the optimal policy (thrust profile) or the value function (optimal mass) are proposed and tested. We find that both policy learning and value function learning successfully and accurately learn the optimal thrust and that a spacecraft employing the learned thrust is able to reach the target conditions orbit spending only 2 permil more propellant than in the corresponding mathematically optimal transfer. Moreover, the optimal propellant mass can be predicted (in case of value function learning) within an error well within 1%. All G&CNETs produced are tested during simulations of interplanetary transfers with respect to their ability to reach the target conditions optimally starting from nominal and off-nominal conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge