Daniel Berio

Neural Image Abstraction Using Long Smoothing B-Splines

Nov 07, 2025Abstract:We integrate smoothing B-splines into a standard differentiable vector graphics (DiffVG) pipeline through linear mapping, and show how this can be used to generate smooth and arbitrarily long paths within image-based deep learning systems. We take advantage of derivative-based smoothing costs for parametric control of fidelity vs. simplicity tradeoffs, while also enabling stylization control in geometric and image spaces. The proposed pipeline is compatible with recent vector graphics generation and vectorization methods. We demonstrate the versatility of our approach with four applications aimed at the generation of stylized vector graphics: stylized space-filling path generation, stroke-based image abstraction, closed-area image abstraction, and stylized text generation.

Word-As-Image for Semantic Typography

Mar 06, 2023

Abstract:A word-as-image is a semantic typography technique where a word illustration presents a visualization of the meaning of the word, while also preserving its readability. We present a method to create word-as-image illustrations automatically. This task is highly challenging as it requires semantic understanding of the word and a creative idea of where and how to depict these semantics in a visually pleasing and legible manner. We rely on the remarkable ability of recent large pretrained language-vision models to distill textual concepts visually. We target simple, concise, black-and-white designs that convey the semantics clearly. We deliberately do not change the color or texture of the letters and do not use embellishments. Our method optimizes the outline of each letter to convey the desired concept, guided by a pretrained Stable Diffusion model. We incorporate additional loss terms to ensure the legibility of the text and the preservation of the style of the font. We show high quality and engaging results on numerous examples and compare to alternative techniques.

Calligraphic Stylisation Learning with a Physiologically Plausible Model of Movement and Recurrent Neural Networks

Sep 24, 2017

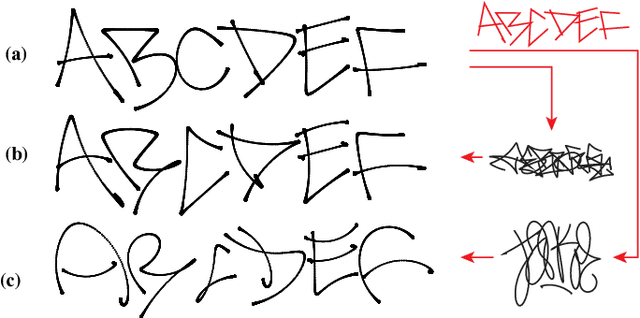

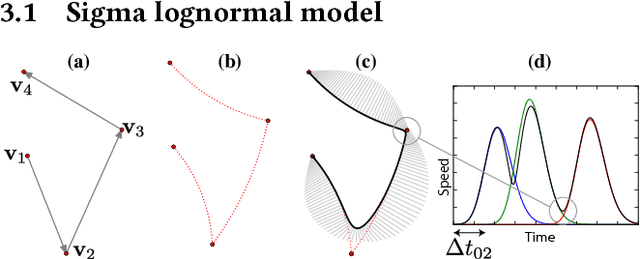

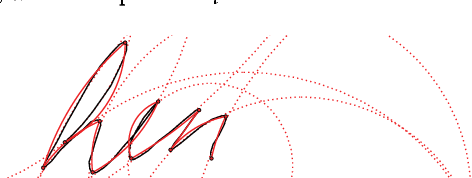

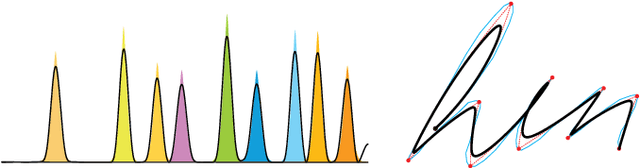

Abstract:We propose a computational framework to learn stylisation patterns from example drawings or writings, and then generate new trajectories that possess similar stylistic qualities. We particularly focus on the generation and stylisation of trajectories that are similar to the ones that can be seen in calligraphy and graffiti art. Our system is able to extract and learn dynamic and visual qualities from a small number of user defined examples which can be recorded with a digitiser device, such as a tablet, mouse or motion capture sensors. Our system is then able to transform new user drawn traces to be kinematically and stylistically similar to the training examples. We implement the system using a Recurrent Mixture Density Network (RMDN) combined with a representation given by the parameters of the Sigma Lognormal model, a physiologically plausible model of movement that has been shown to closely reproduce the velocity and trace of human handwriting gestures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge