Cristina Puente

GOFAI meets Generative AI: Development of Expert Systems by means of Large Language Models

Jul 17, 2025

Abstract:The development of large language models (LLMs) has successfully transformed knowledge-based systems such as open domain question nswering, which can automatically produce vast amounts of seemingly coherent information. Yet, those models have several disadvantages like hallucinations or confident generation of incorrect or unverifiable facts. In this paper, we introduce a new approach to the development of expert systems using LLMs in a controlled and transparent way. By limiting the domain and employing a well-structured prompt-based extraction approach, we produce a symbolic representation of knowledge in Prolog, which can be validated and corrected by human experts. This approach also guarantees interpretability, scalability and reliability of the developed expert systems. Via quantitative and qualitative experiments with Claude Sonnet 3.7 and GPT-4.1, we show strong adherence to facts and semantic coherence on our generated knowledge bases. We present a transparent hybrid solution that combines the recall capacity of LLMs with the precision of symbolic systems, thereby laying the foundation for dependable AI applications in sensitive domains.

Fuzzy Stochastic Timed Petri Nets for Causal properties representation

Nov 24, 2020

Abstract:Imagery is frequently used to model, represent and communicate knowledge. In particular, graphs are one of the most powerful tools, being able to represent relations between objects. Causal relations are frequently represented by directed graphs, with nodes denoting causes and links denoting causal influence. A causal graph is a skeletal picture, showing causal associations and impact between entities. Common methods used for graphically representing causal scenarios are neurons, truth tables, causal Bayesian networks, cognitive maps and Petri Nets. Causality is often defined in terms of precedence (the cause precedes the effect), concurrency (often, an effect is provoked simultaneously by two or more causes), circularity (a cause provokes the effect and the effect reinforces the cause) and imprecision (the presence of the cause favors the effect, but not necessarily causes it). We will show that, even though the traditional graphical models are able to represent separately some of the properties aforementioned, they fail trying to illustrate indistinctly all of them. To approach that gap, we will introduce Fuzzy Stochastic Timed Petri Nets as a graphical tool able to represent time, co-occurrence, looping and imprecision in causal flow.

Fake News Detection by means of Uncertainty Weighted Causal Graphs

Feb 04, 2020

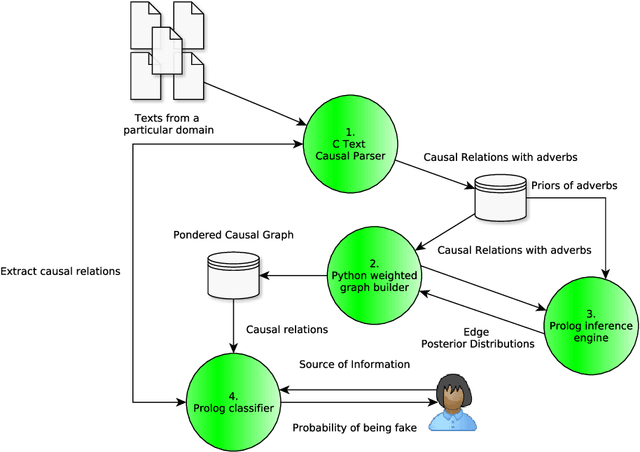

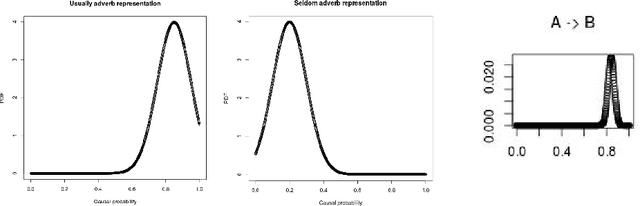

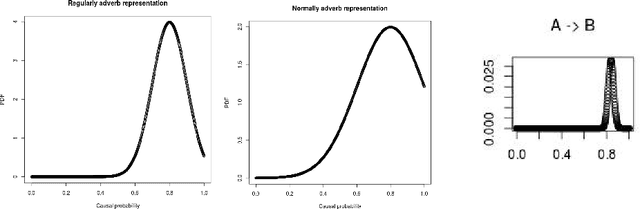

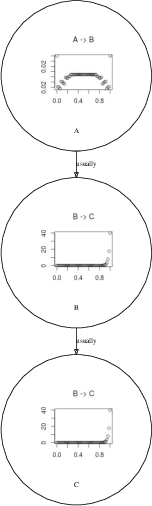

Abstract:Society is experimenting changes in information consumption, as new information channels such as social networks let people share news that do not necessarily be trust worthy. Sometimes, these sources of information produce fake news deliberately with doubtful purposes and the consumers of that information share it to other users thinking that the information is accurate. This transmission of information represents an issue in our society, as can influence negatively the opinion of people about certain figures, groups or ideas. Hence, it is desirable to design a system that is able to detect and classify information as fake and categorize a source of information as trust worthy or not. Current systems experiment difficulties performing this task, as it is complicated to design an automatic procedure that can classify this information independent on the context. In this work, we propose a mechanism to detect fake news through a classifier based on weighted causal graphs. These graphs are specific hybrid models that are built through causal relations retrieved from texts and consider the uncertainty of causal relations. We take advantage of this representation to use the probability distributions of this graph and built a fake news classifier based on the entropy and KL divergence of learned and new information. We believe that the problem of fake news is accurately tackled by this model due to its hybrid nature between a symbolic and quantitative methodology. We describe the methodology of this classifier and add empirical evidence of the usefulness of our proposed approach in the form of synthetic experiments and a real experiment involving lung cancer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge