Cristian Alb

Accuracy Convergent Field Predictors

May 07, 2022

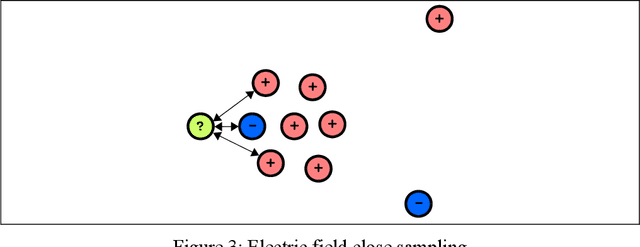

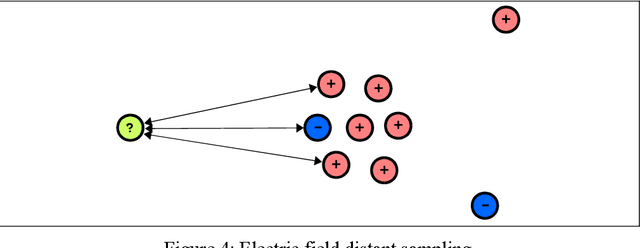

Abstract:Several predictive algorithms are described. Highlighted are variants that make predictions by superposing fields associated to the training data instances. They operate seamlessly with categorical, continuous, and mixed data. Predictive accuracy convergence is also discussed as a criteria for evaluating predictive algorithms. Methods are described on how to adapt algorithms in order to make them achieve predictive accuracy convergence.

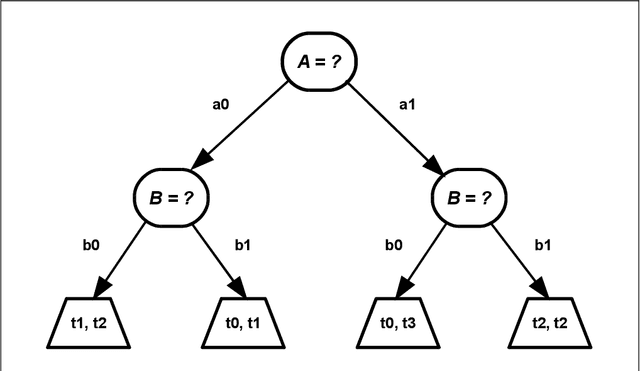

Interfering Paths in Decision Trees: A Note on Deodata Predictors

Feb 24, 2022

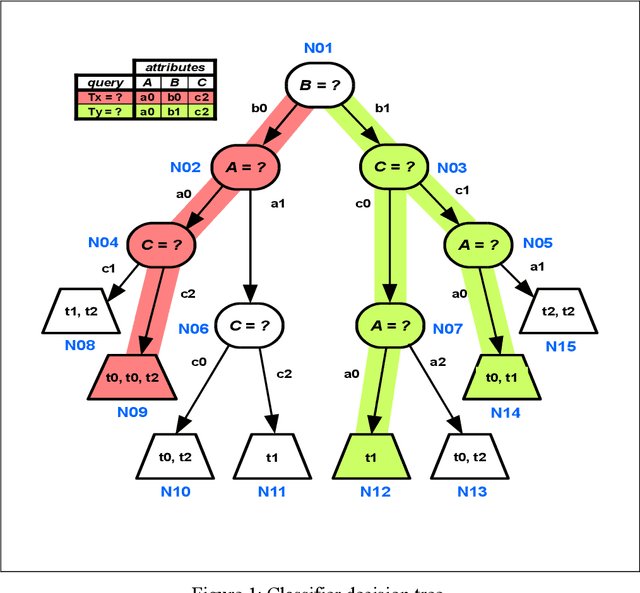

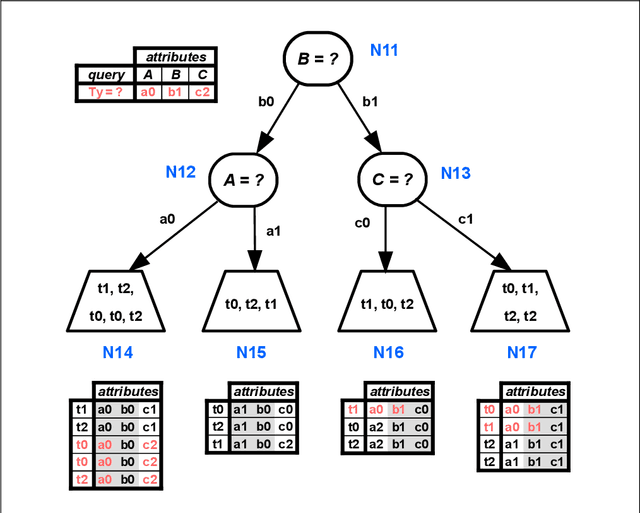

Abstract:A technique for improving the prediction accuracy of decision trees is proposed. It consists in evaluating the tree's branches in parallel over multiple paths. The technique enables predictions that are more aligned with the ones generated by the nearest neighborhood variant of the deodata algorithms. The technique also enables the hybridization of the decision tree algorithm with the nearest neighborhood variant.

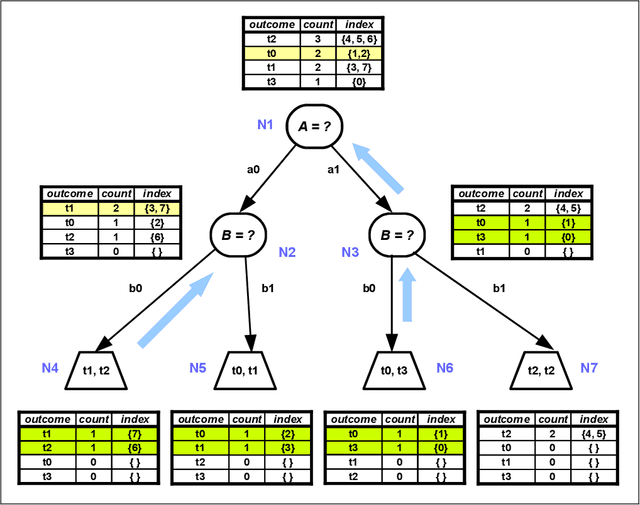

Backtrack Tie-Breaking for Decision Trees: A Note on Deodata Predictors

Feb 09, 2022

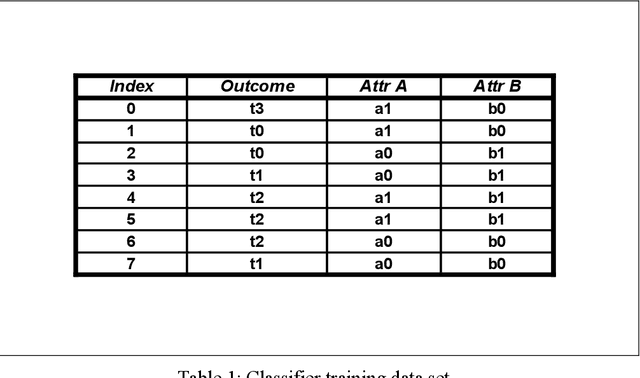

Abstract:A tie-breaking method is proposed for choosing the predicted class, or outcome, in a decision tree. The method is an adaptation of a similar technique used for deodata predictors.

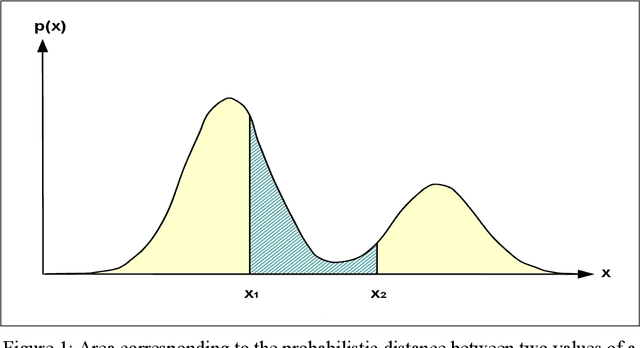

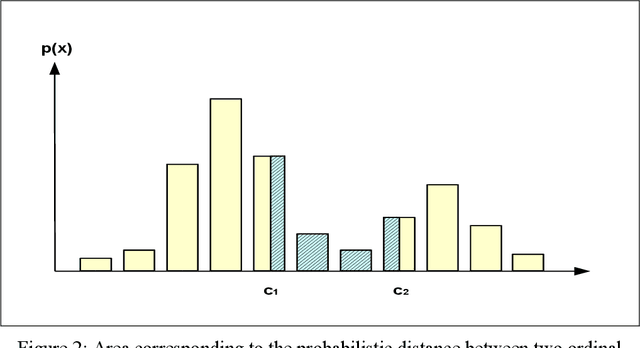

Probabilistic Alternatives to the Gower Distance: A Note on Deodata Predictors

Jan 17, 2022

Abstract:A probabilistic alternative to the Gower distance is proposed. The probabilistic distance enables the realization of a generic deodata predictor.

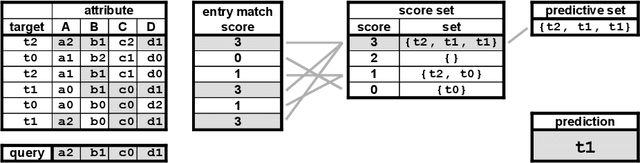

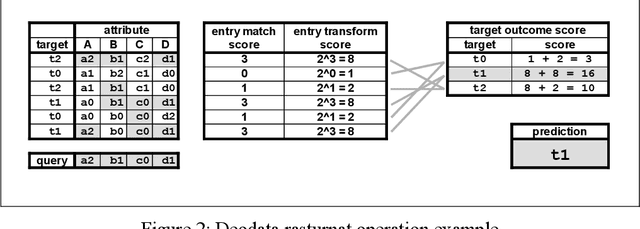

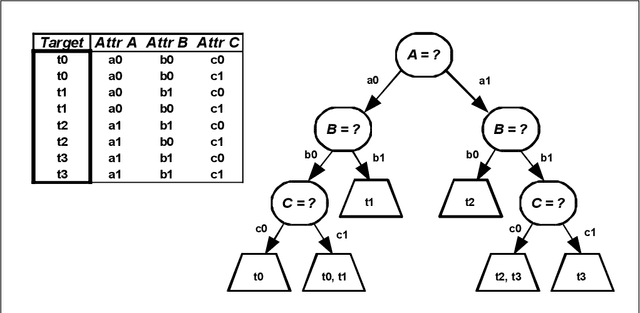

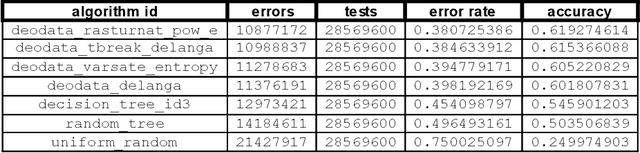

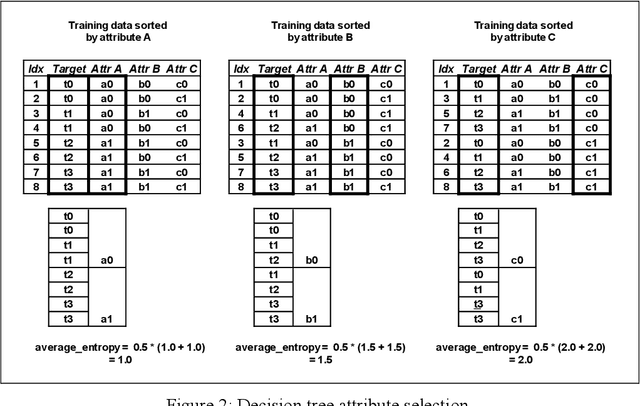

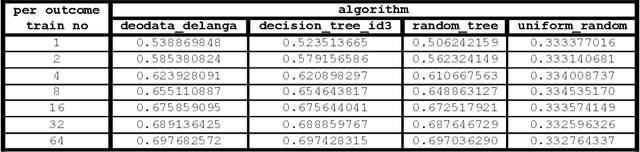

Collapsing the Decision Tree: the Concurrent Data Predictor

Aug 09, 2021

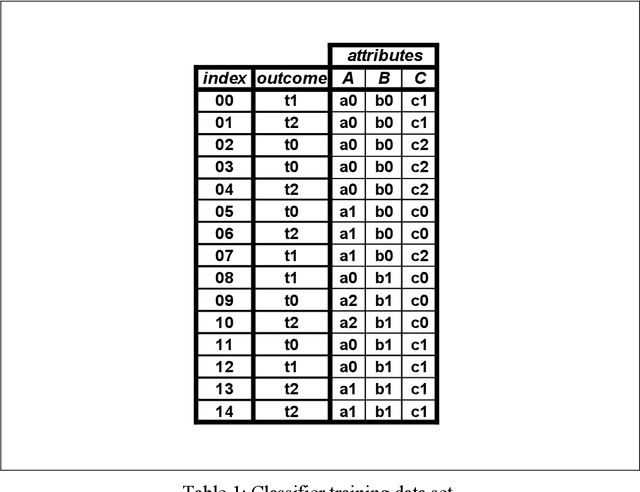

Abstract:A family of concurrent data predictors is derived from the decision tree classifier by removing the limitation of sequentially evaluating attributes. By evaluating attributes concurrently, the decision tree collapses into a flat structure. Experiments indicate improvements of the prediction accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge