Claudio Gheller

Radio U-Net: a convolutional neural network to detect diffuse radio sources in galaxy clusters and beyond

Aug 20, 2024

Abstract:The forthcoming generation of radio telescope arrays promises significant advancements in sensitivity and resolution, enabling the identification and characterization of many new faint and diffuse radio sources. Conventional manual cataloging methodologies are anticipated to be insufficient to exploit the capabilities of new radio surveys. Radio interferometric images of diffuse sources present a challenge for image segmentation tasks due to noise, artifacts, and embedded radio sources. In response to these challenges, we introduce Radio U-Net, a fully convolutional neural network based on the U-Net architecture. Radio U-Net is designed to detect faint and extended sources in radio surveys, such as radio halos, relics, and cosmic web filaments. Radio U-Net was trained on synthetic radio observations built upon cosmological simulations and then tested on a sample of galaxy clusters, where the detection of cluster diffuse radio sources relied on customized data reduction and visual inspection of LOFAR Two Metre Sky Survey (LoTSS) data. The 83% of clusters exhibiting diffuse radio emission were accurately identified, and the segmentation successfully recovered the morphology of the sources even in low-quality images. In a test sample comprising 246 galaxy clusters, we achieved a 73% accuracy rate in distinguishing between clusters with and without diffuse radio emission. Our results establish the applicability of Radio U-Net to extensive radio survey datasets, probing its efficiency on cutting-edge high-performance computing systems. This approach represents an advancement in optimizing the exploitation of forthcoming large radio surveys for scientific exploration.

Convolutional Deep Denoising Autoencoders for Radio Astronomical Images

Oct 16, 2021

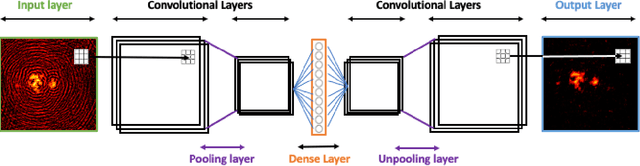

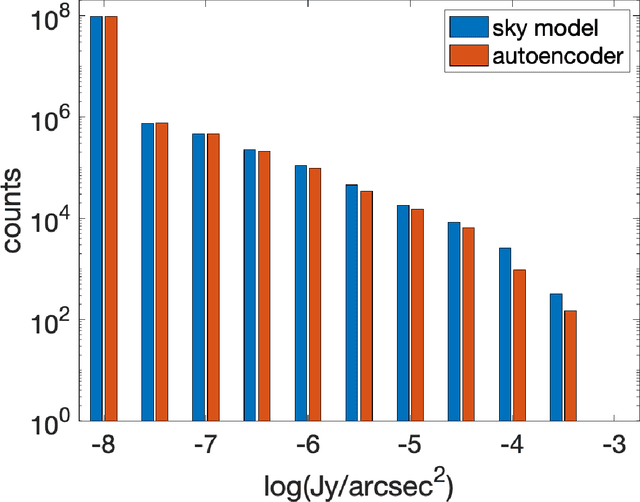

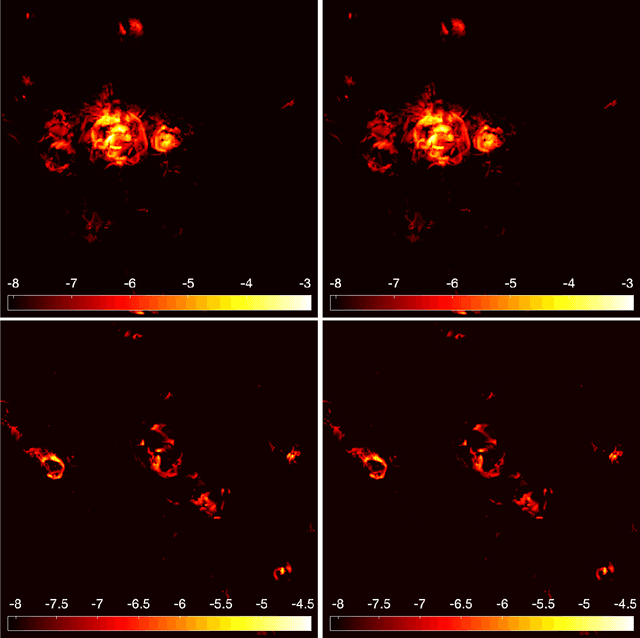

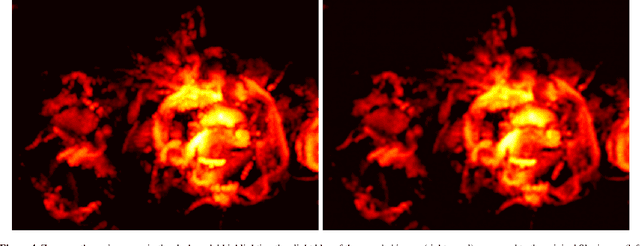

Abstract:We apply a Machine Learning technique known as Convolutional Denoising Autoencoder to denoise synthetic images of state-of-the-art radio telescopes, with the goal of detecting the faint, diffused radio sources predicted to characterise the radio cosmic web. In our application, denoising is intended to address both the reduction of random instrumental noise and the minimisation of additional spurious artefacts like the sidelobes, resulting from the aperture synthesis technique. The effectiveness and the accuracy of the method are analysed for different kinds of corrupted input images, together with its computational performance. Specific attention has been devoted to create realistic mock observations for the training, exploiting the outcomes of cosmological numerical simulations, to generate images corresponding to LOFAR HBA 8 hours observations at 150 MHz. Our autoencoder can effectively denoise complex images identifying and extracting faint objects at the limits of the instrumental sensitivity. The method can efficiently scale on large datasets, exploiting high performance computing solutions, in a fully automated way (i.e. no human supervision is required after training). It can accurately perform image segmentation, identifying low brightness outskirts of diffused sources, proving to be a viable solution for detecting challenging extended objects hidden in noisy radio observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge