Chuadhry Mujeeb Ahmed

Newcastle University UK

ProDER: A Continual Learning Approach for Fault Prediction in Evolving Smart Grids

Nov 07, 2025Abstract:As smart grids evolve to meet growing energy demands and modern operational challenges, the ability to accurately predict faults becomes increasingly critical. However, existing AI-based fault prediction models struggle to ensure reliability in evolving environments where they are required to adapt to new fault types and operational zones. In this paper, we propose a continual learning (CL) framework in the smart grid context to evolve the model together with the environment. We design four realistic evaluation scenarios grounded in class-incremental and domain-incremental learning to emulate evolving grid conditions. We further introduce Prototype-based Dark Experience Replay (ProDER), a unified replay-based approach that integrates prototype-based feature regularization, logit distillation, and a prototype-guided replay memory. ProDER achieves the best performance among tested CL techniques, with only a 0.045 accuracy drop for fault type prediction and 0.015 for fault zone prediction. These results demonstrate the practicality of CL for scalable, real-world fault prediction in smart grids.

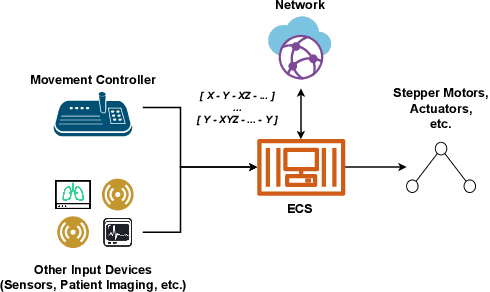

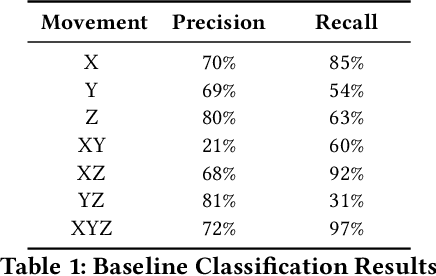

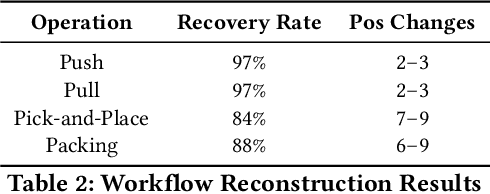

WaveVerif: Acoustic Side-Channel based Verification of Robotic Workflows

Oct 29, 2025Abstract:In this paper, we present a framework that uses acoustic side-channel analysis (ASCA) to monitor and verify whether a robot correctly executes its intended commands. We develop and evaluate a machine-learning-based workflow verification system that uses acoustic emissions generated by robotic movements. The system can determine whether real-time behavior is consistent with expected commands. The evaluation takes into account movement speed, direction, and microphone distance. The results show that individual robot movements can be validated with over 80% accuracy under baseline conditions using four different classifiers: Support Vector Machine (SVM), Deep Neural Network (DNN), Recurrent Neural Network (RNN), and Convolutional Neural Network (CNN). Additionally, workflows such as pick-and-place and packing could be identified with similarly high confidence. Our findings demonstrate that acoustic signals can support real-time, low-cost, passive verification in sensitive robotic environments without requiring hardware modifications.

Attack Pattern Mining to Discover Hidden Threats to Industrial Control Systems

Aug 06, 2025

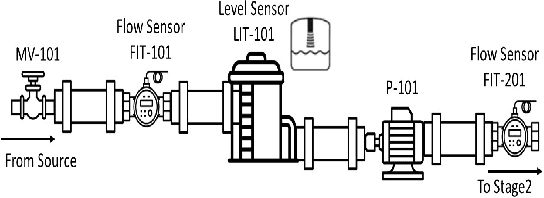

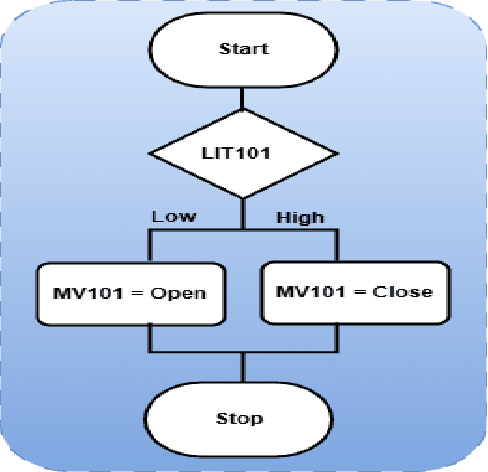

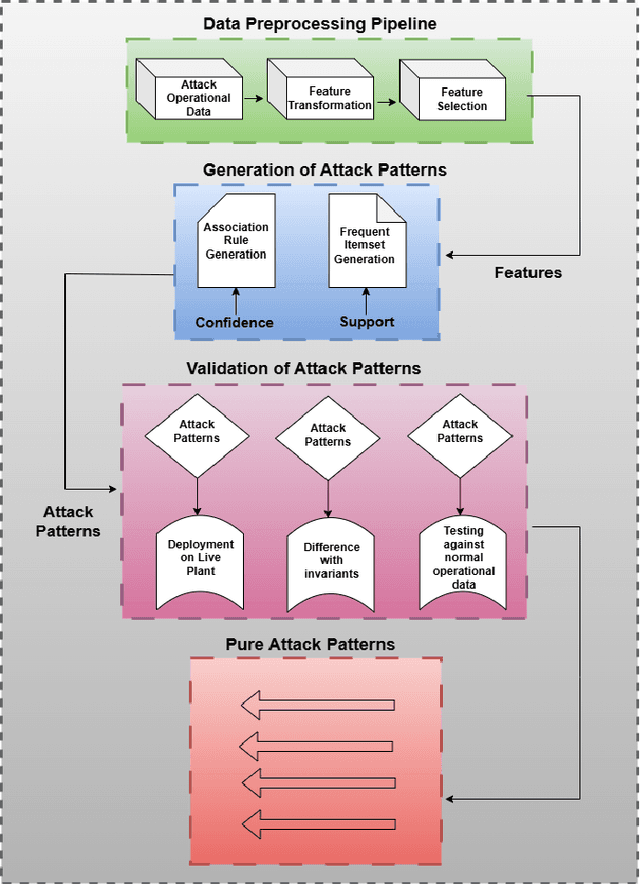

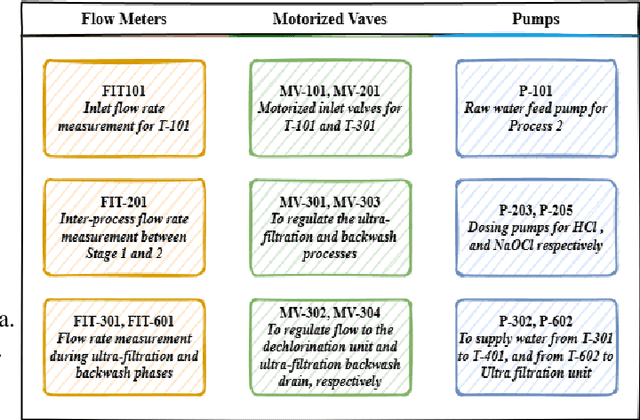

Abstract:This work focuses on validation of attack pattern mining in the context of Industrial Control System (ICS) security. A comprehensive security assessment of an ICS requires generating a large and variety of attack patterns. For this purpose we have proposed a data driven technique to generate attack patterns for an ICS. The proposed technique has been used to generate over 100,000 attack patterns from data gathered from an operational water treatment plant. In this work we present a detailed case study to validate the attack patterns.

Adversarial Sample Generation for Anomaly Detection in Industrial Control Systems

May 06, 2025Abstract:Machine learning (ML)-based intrusion detection systems (IDS) are vulnerable to adversarial attacks. It is crucial for an IDS to learn to recognize adversarial examples before malicious entities exploit them. In this paper, we generated adversarial samples using the Jacobian Saliency Map Attack (JSMA). We validate the generalization and scalability of the adversarial samples to tackle a broad range of real attacks on Industrial Control Systems (ICS). We evaluated the impact by assessing multiple attacks generated using the proposed method. The model trained with adversarial samples detected attacks with 95% accuracy on real-world attack data not used during training. The study was conducted using an operational secure water treatment (SWaT) testbed.

AttackLLM: LLM-based Attack Pattern Generation for an Industrial Control System

Apr 05, 2025Abstract:Malicious examples are crucial for evaluating the robustness of machine learning algorithms under attack, particularly in Industrial Control Systems (ICS). However, collecting normal and attack data in ICS environments is challenging due to the scarcity of testbeds and the high cost of human expertise. Existing datasets are often limited by the domain expertise of practitioners, making the process costly and inefficient. The lack of comprehensive attack pattern data poses a significant problem for developing robust anomaly detection methods. In this paper, we propose a novel approach that combines data-centric and design-centric methodologies to generate attack patterns using large language models (LLMs). Our results demonstrate that the attack patterns generated by LLMs not only surpass the quality and quantity of those created by human experts but also offer a scalable solution that does not rely on expensive testbeds or pre-existing attack examples. This multi-agent based approach presents a promising avenue for enhancing the security and resilience of ICS environments.

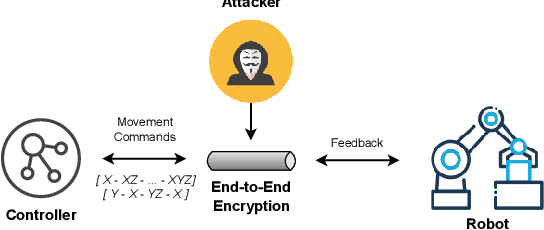

Can You Still See Me?: Reconstructing Robot Operations Over End-to-End Encrypted Channels

May 17, 2022

Abstract:Connected robots play a key role in Industry 4.0, providing automation and higher efficiency for many industrial workflows. Unfortunately, these robots can leak sensitive information regarding these operational workflows to remote adversaries. While there exists mandates for the use of end-to-end encryption for data transmission in such settings, it is entirely possible for passive adversaries to fingerprint and reconstruct entire workflows being carried out -- establishing an understanding of how facilities operate. In this paper, we investigate whether a remote attacker can accurately fingerprint robot movements and ultimately reconstruct operational workflows. Using a neural network approach to traffic analysis, we find that one can predict TLS-encrypted movements with around \textasciitilde60\% accuracy, increasing to near-perfect accuracy under realistic network conditions. Further, we also find that attackers can reconstruct warehousing workflows with similar success. Ultimately, simply adopting best cybersecurity practices is clearly not enough to stop even weak (passive) adversaries.

Attack Rules: An Adversarial Approach to Generate Attacks for Industrial Control Systems using Machine Learning

Jul 11, 2021

Abstract:Adversarial learning is used to test the robustness of machine learning algorithms under attack and create attacks that deceive the anomaly detection methods in Industrial Control System (ICS). Given that security assessment of an ICS demands that an exhaustive set of possible attack patterns is studied, in this work, we propose an association rule mining-based attack generation technique. The technique has been implemented using data from a secure Water Treatment plant. The proposed technique was able to generate more than 300,000 attack patterns constituting a vast majority of new attack vectors which were not seen before. Automatically generated attacks improve our understanding of the potential attacks and enable the design of robust attack detection techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge