Christoph Müller

NeuralCrop: Combining physics and machine learning for improved crop yield predictions

Dec 23, 2025Abstract:Global gridded crop models (GGCMs) simulate daily crop growth by explicitly representing key biophysical processes and project end-of-season yield time series. They are a primary tool to quantify the impacts of climate change on agricultural productivity and assess associated risks for food security. Despite decades of development, state-of-the-art GGCMs still have substantial uncertainties in simulating complex biophysical processes due to limited process understanding. Recently, machine learning approaches trained on observational data have shown great potential in crop yield predictions. However, these models have not demonstrated improved performance over classical GGCMs and are not suitable for simulating crop yields under changing climate conditions due to problems in generalizing outside their training distributions. Here we introduce NeuralCrop, a hybrid GGCM that combines the strengths of an advanced process-based GGCM, resolving important processes explicitly, with data-driven machine learning components. The model is first trained to emulate a competitive GGCM before it is fine-tuned on observational data. We show that NeuralCrop outperforms state-of-the-art GGCMs across site-level and large-scale cropping regions. Across moisture conditions, NeuralCrop reproduces the interannual yield anomalies in European wheat regions and the US Corn Belt more accurately during the period from 2000 to 2019 with particularly strong improvements under drought extremes. When generalizing to conditions unseen during training, NeuralCrop continues to make robust projections, while pure machine learning models exhibit substantial performance degradation. Our results show that our hybrid crop modelling approach offers overall improved crop modeling and more reliable yield projections under climate change and intensifying extreme weather conditions.

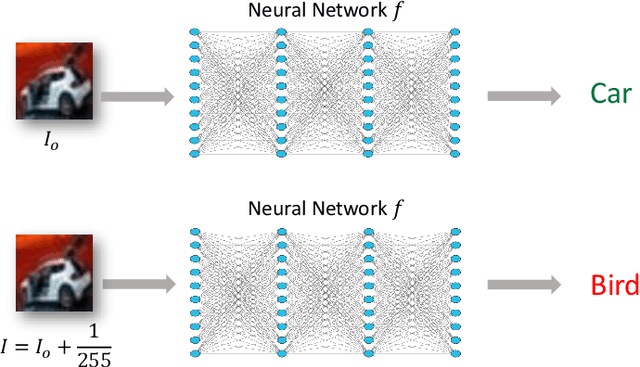

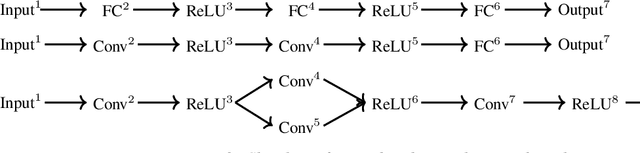

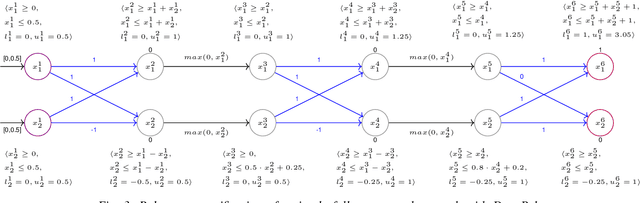

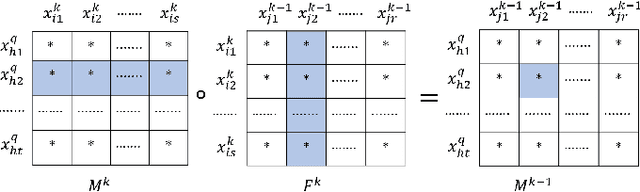

Neural Network Robustness Verification on GPUs

Jul 20, 2020

Abstract:Certifying the robustness of neural networks against adversarial attacks is critical to their reliable adoption in real-world systems including autonomous driving and medical diagnosis. Unfortunately, state-of-the-art verifiers either do not scale to larger networks or are too imprecise to prove robustness, which limits their practical adoption. In this work, we introduce GPUPoly, a scalable verifier that can prove the robustness of significantly larger deep neural networks than possible with prior work. The key insight behind GPUPoly is the design of custom, sound polyhedra algorithms for neural network verification on a GPU. Our algorithms leverage the available GPU parallelism and the inherent sparsity of the underlying neural network verification task. GPUPoly scales to very large networks: for example, it can prove the robustness of a 1M neuron, 34-layer deep residual network in about 1 minute. We believe GPUPoly is a promising step towards the practical verification of large real-world networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge