Cenk Bircanoglu

Benchmarking Image Embeddings for E-Commerce: Evaluating Off-the Shelf Foundation Models, Fine-Tuning Strategies and Practical Trade-offs

Apr 10, 2025Abstract:We benchmark foundation models image embeddings for classification and retrieval in e-Commerce, evaluating their suitability for real-world applications. Our study spans embeddings from pre-trained convolutional and transformer models trained via supervised, self-supervised, and text-image contrastive learning. We assess full fine-tuning and transfer learning (top-tuning) on six diverse e-Commerce datasets: fashion, consumer goods, cars, food, and retail. Results show full fine-tuning consistently performs well, while text-image and self-supervised embeddings can match its performance with less training. While supervised embeddings remain stable across architectures, SSL and contrastive embeddings vary significantly, often benefiting from top-tuning. Top-tuning emerges as an efficient alternative to full fine-tuning, reducing computational costs. We also explore cross-tuning, noting its impact depends on dataset characteristics. Our findings offer practical guidelines for embedding selection and fine-tuning strategies, balancing efficiency and performance.

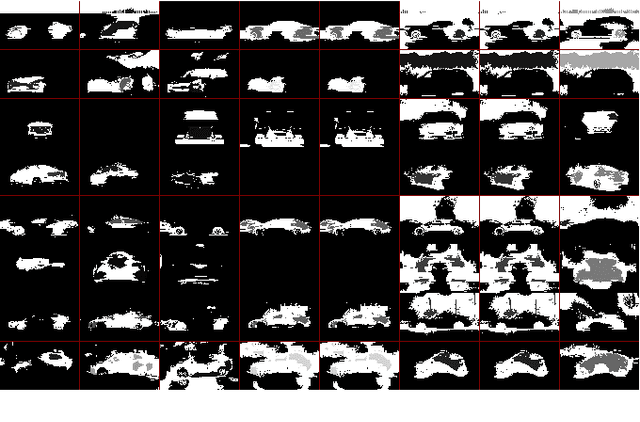

ISIM: Iterative Self-Improved Model for Weakly Supervised Segmentation

Nov 23, 2022Abstract:Weakly Supervised Semantic Segmentation (WSSS) is a challenging task aiming to learn the segmentation labels from class-level labels. In the literature, exploiting the information obtained from Class Activation Maps (CAMs) is widely used for WSSS studies. However, as CAMs are obtained from a classification network, they are interested in the most discriminative parts of the objects, producing non-complete prior information for segmentation tasks. In this study, to obtain more coherent CAMs with segmentation labels, we propose a framework that employs an iterative approach in a modified encoder-decoder-based segmentation model, which simultaneously supports classification and segmentation tasks. As no ground-truth segmentation labels are given, the same model also generates the pseudo-segmentation labels with the help of dense Conditional Random Fields (dCRF). As a result, the proposed framework becomes an iterative self-improved model. The experiments performed with DeepLabv3 and UNet models show a significant gain on the Pascal VOC12 dataset, and the DeepLabv3 application increases the current state-of-the-art metric by %2.5. The implementation associated with the experiments can be found: https://github.com/cenkbircanoglu/isim.

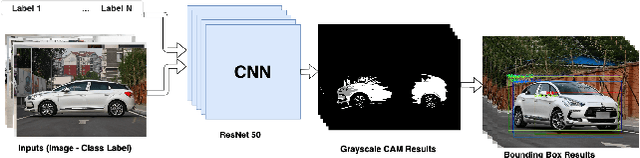

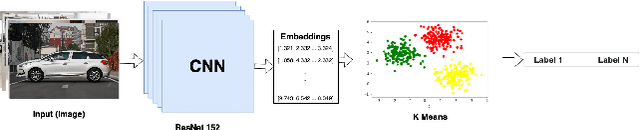

A Comparative Study on Effects of Original and Pseudo Labels for Weakly Supervised Learning for Car Localization Problem

Oct 08, 2020

Abstract:In this study, the effects of different class labels created as a result of multiple conceptual meanings on localization using Weakly Supervised Learning presented on Car Dataset. In addition, the generated labels are included in the comparison, and the solution turned into Unsupervised Learning. This paper investigates multiple setups for car localization in the images with other approaches rather than Supervised Learning. To predict localization labels, Class Activation Mapping (CAM) is implemented and from the results, the bounding boxes are extracted by using morphological edge detection. Besides the original class labels, generated class labels also employed to train CAM on which turn to a solution to Unsupervised Learning example. In the experiments, we first analyze the effects of class labels in Weakly Supervised localization on the Compcars dataset. We then show that the proposed Unsupervised approach outperforms the Weakly Supervised method in this particular dataset by approximately %6.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge