Catherine Soladié

Real-Time Cleaning and Refinement of Facial Animation Signals

Aug 04, 2020

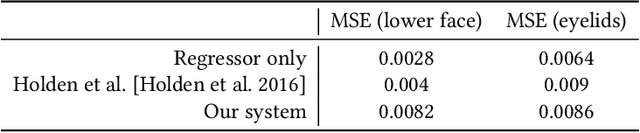

Abstract:With the increasing demand for real-time animated 3D content in the entertainment industry and beyond, performance-based animation has garnered interest among both academic and industrial communities. While recent solutions for motion-capture animation have achieved impressive results, handmade post-processing is often needed, as the generated animations often contain artifacts. Existing real-time motion capture solutions have opted for standard signal processing methods to strengthen temporal coherence of the resulting animations and remove inaccuracies. While these methods produce smooth results, they inherently filter-out part of the dynamics of facial motion, such as high frequency transient movements. In this work, we propose a real-time animation refining system that preserves -- or even restores -- the natural dynamics of facial motions. To do so, we leverage an off-the-shelf recurrent neural network architecture that learns proper facial dynamics patterns on clean animation data. We parametrize our system using the temporal derivatives of the signal, enabling our network to process animations at any framerate. Qualitative results show that our system is able to retrieve natural motion signals from noisy or degraded input animation.

A Robust Interactive Facial Animation Editing System

Jul 18, 2020

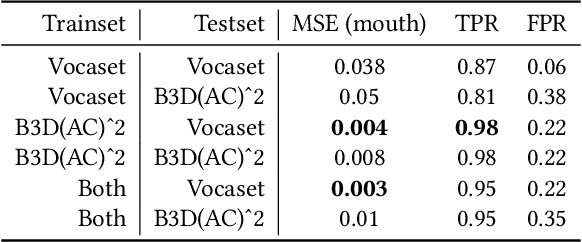

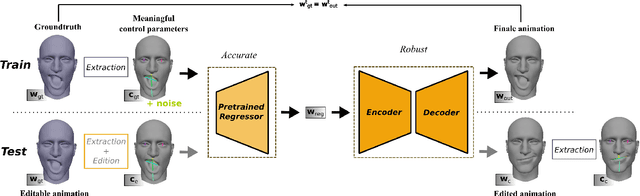

Abstract:Over the past few years, the automatic generation of facial animation for virtual characters has garnered interest among the animation research and industry communities. Recent research contributions leverage machine-learning approaches to enable impressive capabilities at generating plausible facial animation from audio and/or video signals. However, these approaches do not address the problem of animation edition, meaning the need for correcting an unsatisfactory baseline animation or modifying the animation content itself. In facial animation pipelines, the process of editing an existing animation is just as important and time-consuming as producing a baseline. In this work, we propose a new learning-based approach to easily edit a facial animation from a set of intuitive control parameters. To cope with high-frequency components in facial movements and preserve a temporal coherency in the animation, we use a resolution-preserving fully convolutional neural network that maps control parameters to blendshapes coefficients sequences. We stack an additional resolution-preserving animation autoencoder after the regressor to ensure that the system outputs natural-looking animation. The proposed system is robust and can handle coarse, exaggerated edits from non-specialist users. It also retains the high-frequency motion of the facial animation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge